基于Centos7部署k3s

主机拓扑

| 主机名 |

ip |

CPU |

内存 |

| Server1 |

192.168.48.101 |

≧2 |

2G |

| Server2 |

192.168.48.102 |

≧2 |

2G |

| Server3 |

192.168.48.103 |

≧2 |

2G |

| Agent1 |

192.168.49.104 |

≧1 |

512MB |

如果你是只用单serve1只需要创建server1和若干台agent,部署教程请跳转3.基础k3s

如果你是高可用一定需要保留≥3台的server节点,部署教程请跳转6.高可用k3s

前提是要完成2.基础配置

基础配置

高可用和基础k3s都要运行

系统初始化

操作节点:[所有节点]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

| #!/bin/bash

if [ $# -eq 2 ];then

echo "设置主机名为:$1"

echo "ens33设置IP地址为:192.168.48.$2"

else

echo "使用方法:sh $0 主机名 主机位"

exit 2

fi

echo "--------------------------------------"

echo "1.正在设置主机名:$1"

hostnamectl set-hostname $1

echo "2.正在关闭firewalld、selinux"

systemctl disable firewalld &> /dev/null

systemctl stop firewalld

sed -i "s#SELINUX=enforcing#SELINUX=disabled#g" /etc/selinux/config

setenforce 0

echo "3.正在设置ens33:192.168.48.$2"

cat > /etc/sysconfig/network-scripts/ifcfg-ens33 <<EOF

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

NAME=ens33

UUID=53b402ff-5865-47dd-a853-7afcd6521738

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.48.$2

GATEWAY=192.168.48.2

PREFIX=24

DNS1=192.168.48.2

DNS2=114.114.114.114

EOF

nmcli c reload

nmcli c up ens33

echo "4.更新yum源软件包缓存"

yum clean all && yum makecache

echo "5.添加hosts解析"

cat > /etc/hosts <<EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.48.101 Server1

192.168.48.102 Server2

192.168.48.103 Server3

192.168.48.104 Agent1

EOF

echo "6.必备工具安装"

yum install wget psmisc vim net-tools telnet socat device-mapper-persistent-data lvm2 git gcc -y

echo "7.重启系统"

reboot

|

1

2

3

4

5

| sudo chmod +x system_init.sh

[Server1] sudo sh system_init.sh Server1 101

[Server2] sudo sh system_init.sh Server2 102

[Server3] sudo sh system_init.sh Server3 103

[Agent1] sudo sh system_init.sh Agent1 104

|

安装容器工具

请你考虑好,你的集群要以什么为运行时,下面提供了,docker和containerd,自行选择

只能二选一!!!

只能二选一!!!

只能二选一!!!

安装docker

操作节点:[所有节点]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| sudo curl -L "https://qygit.qianyisky.cn/https://github.com/docker/compose/releases/download/v2.35.1/docker-compose-linux-x86_64" -o /usr/local/bin/docker-compose

sudo yum -y remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

sudo yum remove docker-ce docker-ce-cli containerd.io docker-compose docker-machine docker-swarm -y

sudo rm /etc/yum.repos.d/docker-ce.repo

sudo rm -rf /var/lib/docker

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum install docker-ce -y

sudo systemctl enable --now docker

sudo chmod +x /usr/local/bin/docker-compose

sudo tee /etc/docker/daemon.json > /dev/null <<'EOF'

{

"registry-mirrors": [

"https://docker.xuanyuan.me",

"https://docker.m.daocloud.io",

"https://docker.1ms.run",

"https://docker.1panel.live",

"https://registry.cn-hangzhou.aliyuncs.com",

"https://docker.qianyios.top"

],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

sudo docker-compose --version

sudo docker version

|

安装containerd

操作节点:[所有节点]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

| sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sudo yum clean all && yum makecache

sudo yum install -y containerd.io

sudo mkdir -p /etc/containerd/certs.d/docker.io

sudo mkdir -p /etc/containerd/certs.d/registry.k8s.io

sudo mkdir -p /etc/containerd/certs.d/k8s.gcr.io

sudo mkdir -p /etc/containerd/certs.d/ghcr.io

sudo mkdir -p /etc/containerd/certs.d/gcr.io

sudo mkdir -p /etc/containerd/certs.d/quay.io

sudo mkdir -p /etc/containerd/certs.d/registry-1.docker.io

sudo tee /etc/containerd/certs.d/docker.io/hosts.toml > /dev/null <<'EOF'

server = "https://docker.io"

[host."https://registry.cn-hangzhou.aliyuncs.com/"]

capabilities = ["pull", "resolve"]

[host."https://docker.xuanyuan.me"]

capabilities = ["pull", "resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull", "resolve"]

[host."https://docker.1ms.run"]

capabilities = ["pull", "resolve"]

[host."https://docker.1panel.live"]

capabilities = ["pull", "resolve"]

[host."https://docker.qianyios.top/"]

capabilities = ["pull", "resolve"]

[host."https://reg-mirror.giniu.com"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/registry-1.docker.io/hosts.toml > /dev/null <<'EOF'

server = "https://registry-1.docker.io"

[host."https://registry.cn-hangzhou.aliyuncs.com/"]

capabilities = ["pull", "resolve"]

[host."https://docker.xuanyuan.me"]

capabilities = ["pull", "resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull", "resolve"]

[host."https://docker.1ms.run"]

capabilities = ["pull", "resolve"]

[host."https://docker.1panel.live"]

capabilities = ["pull", "resolve"]

[host."https://docker.qianyios.top/"]

capabilities = ["pull", "resolve"]

[host."https://reg-mirror.giniu.com"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/k8s.gcr.io/hosts.toml > /dev/null <<'EOF'

server = "https://k8s.gcr.io"

[host."https://registry.aliyuncs.com/google_containers"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/ghcr.io/hosts.toml > /dev/null <<'EOF'

server = "https://ghcr.io"

[host."https://ghcr.m.daocloud.io/"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/gcr.io/hosts.toml > /dev/null <<'EOF'

server = "https://gcr.io"

[host."https://gcr.m.daocloud.io/"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/registry.k8s.io/hosts.toml > /dev/null <<'EOF'

server = "registry.k8s.io"

[host."k8s.m.daocloud.io"]

capabilities = ["pull", "resolve", "push"]

[host."https://registry.aliyuncs.com/v2/google_containers"]

capabilities = ["pull", "resolve"]

EOF

sudo tee /etc/containerd/certs.d/quay.io/hosts.toml > /dev/null <<'EOF'

server = "https://quay.io"

[host."https://quay.tencentcloudcr.com/"]

capabilities = ["pull", "resolve"]

EOF

sudo sh -c 'containerd config default > /etc/containerd/config.toml'

sudo sed -i 's#sandbox_image = "registry.k8s.io/pause:.*"#sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.10"#' /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sed -i '/\[plugins\."io\.containerd\.grpc\.v1\.cri"\.registry\]/!b;n;s/config_path = ""/config_path = "\/etc\/containerd\/certs.d"/' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl restart containerd.service

sudo ctr image ls

|

添加镜像源

操作节点:[所有节点]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| sudo mkdir -p /etc/rancher/k3s

sudo tee /etc/rancher/k3s/registries.yaml > /dev/null <<'EOF'

mirrors:

docker.io:

endpoint:

- "https://registry.cn-hangzhou.aliyuncs.com/"

- "https://docker.xuanyuan.me"

- "https://docker.m.daocloud.io"

- "https://docker.1ms.run"

- "https://docker.1panel.live"

- "https://hub.rat.dev"

- "https://docker-mirror.aigc2d.com"

- "https://docker.qianyios.top/"

quay.io:

endpoint:

- "https://quay.tencentcloudcr.com/"

registry.k8s.io:

endpoint:

- "https://registry.aliyuncs.com/v2/google_containers"

gcr.io:

endpoint:

- "https://gcr.m.daocloud.io/"

k8s.gcr.io:

endpoint:

- "https://registry.aliyuncs.com/google_containers"

ghcr.io:

endpoint:

- "https://ghcr.m.daocloud.io/"

EOF

|

建议在这里打个快照

基础k3s

确保所有节点都下载了k3s安装脚本

1

2

| sudo wget https://rancher-mirror.rancher.cn/k3s/k3s-install.sh

sudo chmod +x k3s-install.sh

|

这里目前只需要用到Server1和Agent1,也就是单server1情况

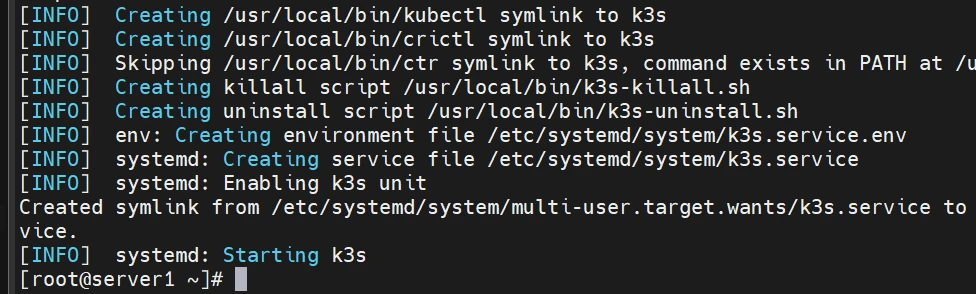

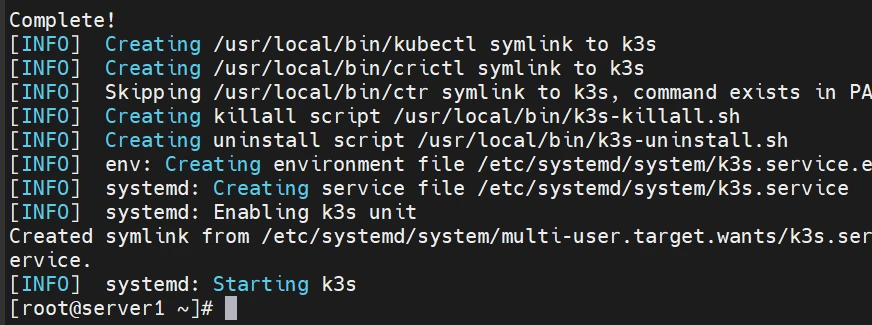

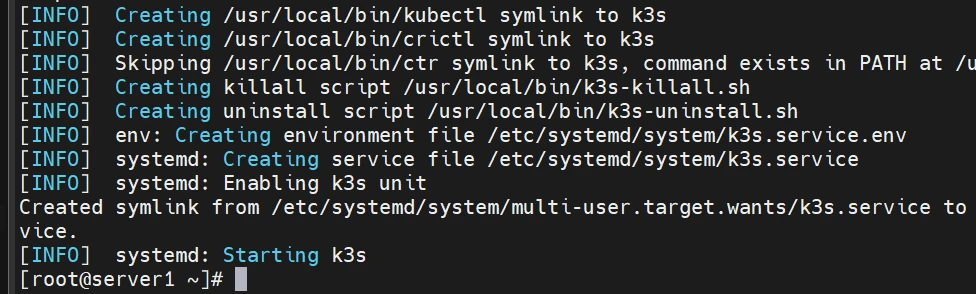

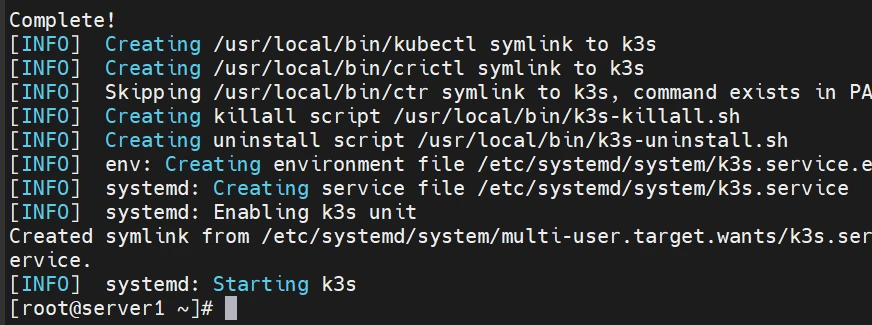

部署Server

操作节点:[Server1]

如需在单个服务器上安装 K3s,可以在 server 节点上执行如下操作:

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

基于docker

如果有参数的值数错了,可以改一下,然后重新运行命令即可

--docke一定要放在所有参数的最前面

1

2

3

4

5

| sudo \

INSTALL_K3S_MIRROR=cn \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --docker \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com"

|

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

基于containerd

如果有参数的值数错了,可以改一下,然后重新运行命令即可

1

2

3

4

5

| sudo \

INSTALL_K3S_MIRROR=cn \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com"

|

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

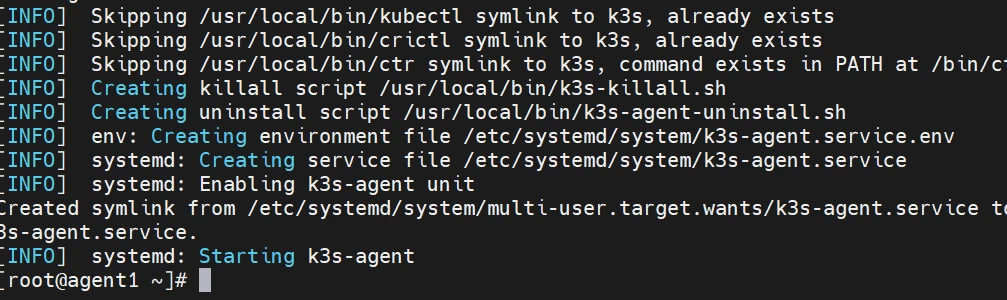

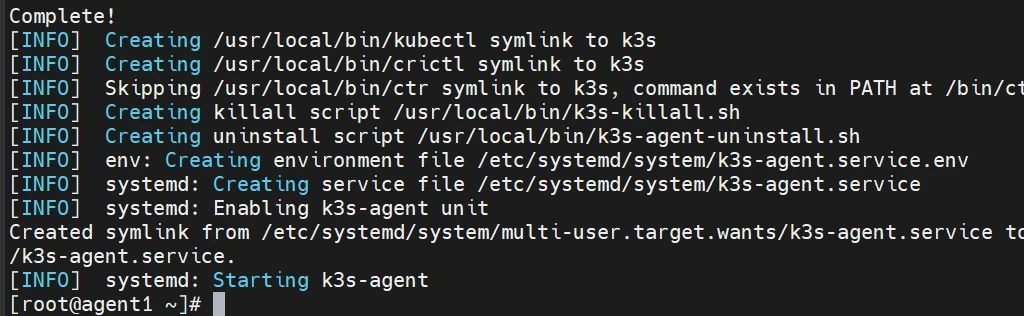

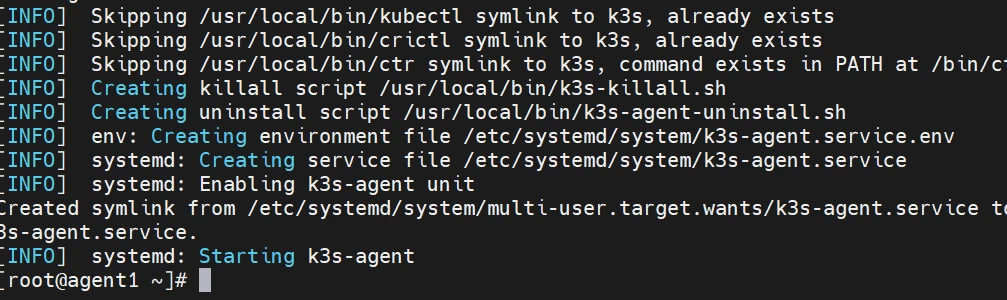

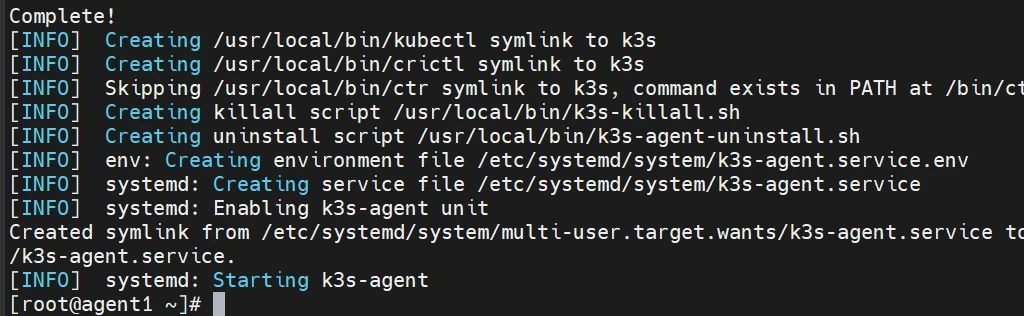

部署Agent节点

操作节点:[Server1]

查看token

1

| sudo cat /var/lib/rancher/k3s/server/node-token

|

K107eca1d1c601a2d308c7dd0b639ef08fa9414f729c720c0cc04337126aa966884::server:035b03761950e59b30e9f5310b92310c

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

基于docker

操作节点:[Agent1]

如果有参数的值数错了,可以改一下,然后重新运行命令即可

--docke一定要放在所有参数的最前面

1

2

3

4

5

6

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_URL=https://192.168.48.101:6443 \

K3S_TOKEN=035b03761950e59b30e9f5310b92310c \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --docker

|

· 035b03761950e59b30e9f5310b92310c 是前面server1获取的token

· 192.168.48.101是server1的ip

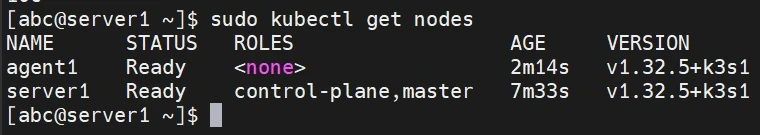

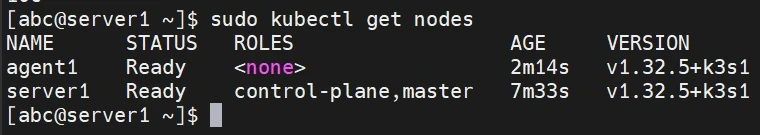

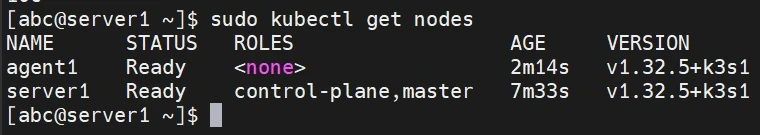

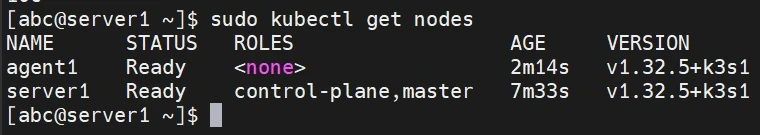

这时候在server1查看是否成功加入集群

如果需要部署dashboard请跳转7.安装dashboard

基于containerd

操作节点:[Agent1]

如果有参数的值数错了,可以改一下,然后重新运行命令即可

1

2

3

4

5

6

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_URL=https://192.168.48.101:6443 \

K3S_TOKEN=035b03761950e59b30e9f5310b92310c \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh

|

· 035b03761950e59b30e9f5310b92310c 是前面server1获取的token

· 192.168.48.101是server1的ip

这时候在server1查看是否成功加入集群

如果需要部署dashboard请跳转7.安装dashboard

高可用k3s

确保所有节点都下载了k3s安装脚本

1

2

| sudo wget https://rancher-mirror.rancher.cn/k3s/k3s-install.sh

sudo chmod +x k3s-install.sh

|

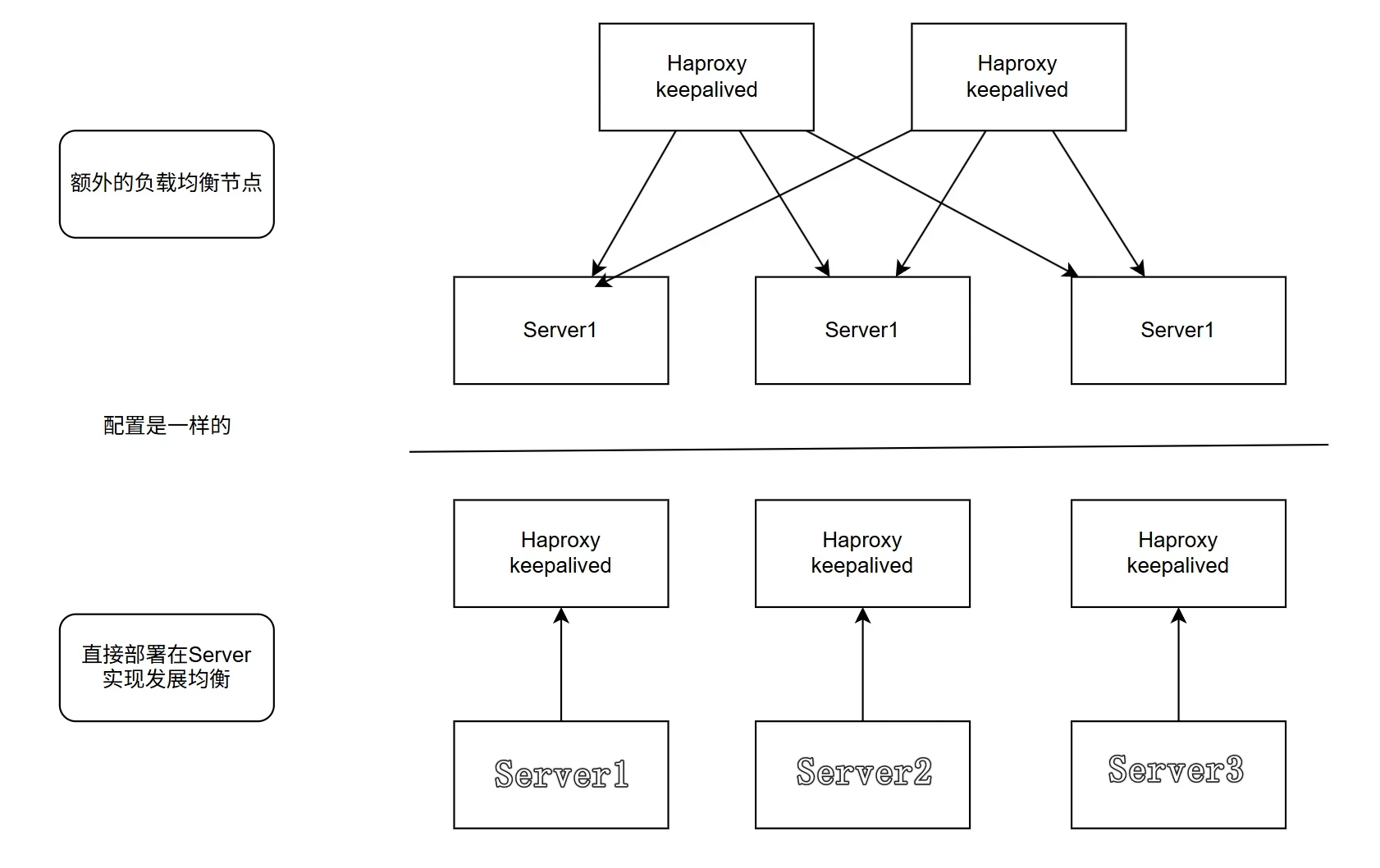

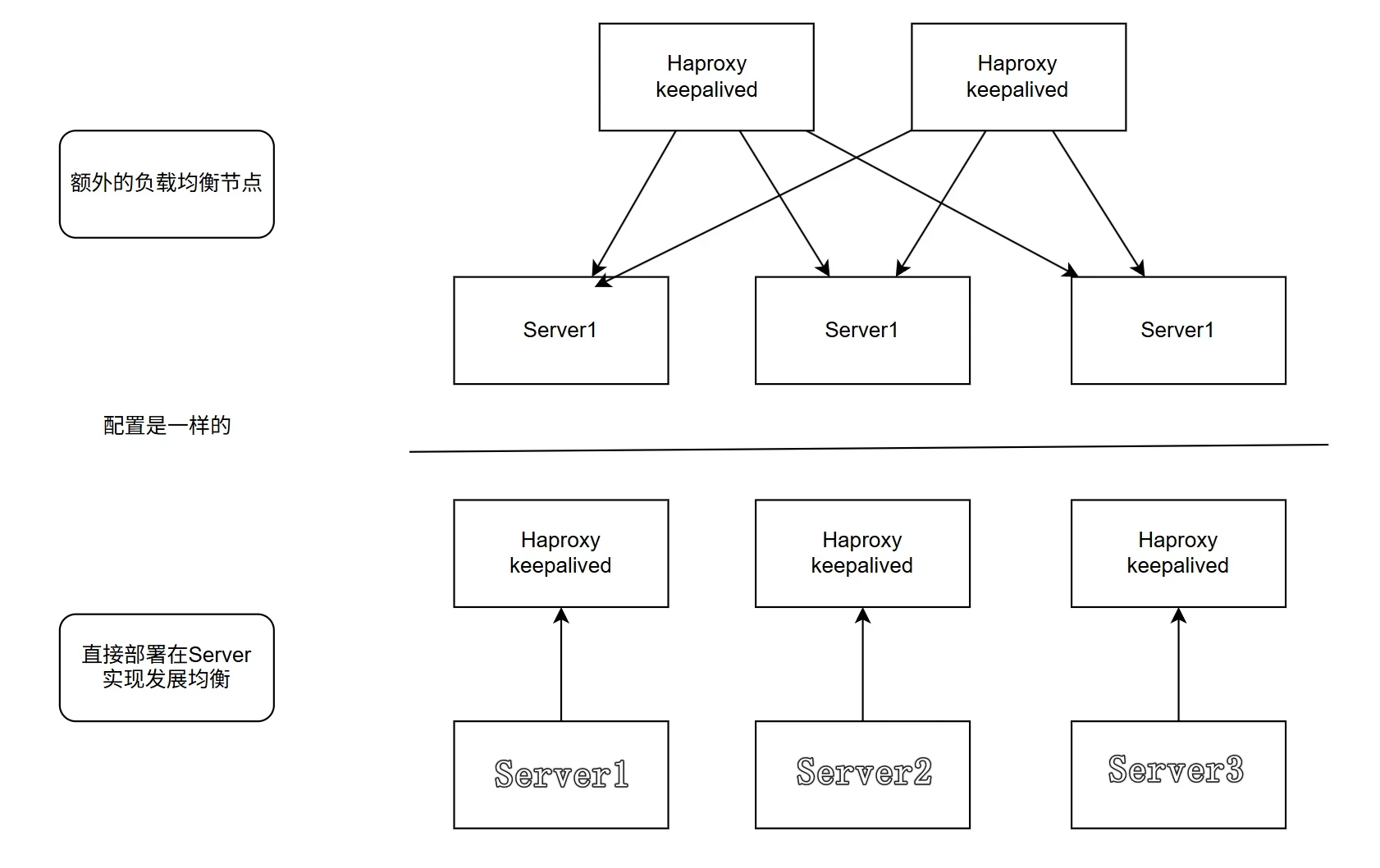

配置集群负载均衡器

官方教程:集群负载均衡器

按理来说我们需要两台额外的节点来做负载均衡和高可用vip节点,但是为了测试方便,我们直接部署在

server节点,也就是图中的第二种方法

操作节点:[所有的server]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| sudo yum install -y haproxy keepalived

sudo tee /etc/haproxy/haproxy.cfg > /dev/null <<'EOF'

frontend k3s-frontend

bind *:16443

mode tcp

option tcplog

default_backend k3s-backend

backend k3s-backend

mode tcp

option tcp-check

balance roundrobin

timeout connect 5s

timeout server 30s

timeout client 30s

default-server inter 10s downinter 5s

server server-1 192.168.48.101:6443 check

server server-2 192.168.48.102:6443 check

server server-3 192.168.48.103:6443 check

EOF

|

操作节点:[Server1]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| sudo tee /etc/keepalived/keepalived.conf > /dev/null <<'EOF'

global_defs {

enable_script_security

script_user root

}

vrrp_script chk_haproxy {

script 'killall -0 haproxy'

interval 2

}

vrrp_instance haproxy-vip {

interface ens33

state MASTER

priority 200

virtual_router_id 51

virtual_ipaddress {

192.168.48.200/24

}

track_script {

chk_haproxy

}

}

EOF

|

操作节点:[Server2]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| sudo tee /etc/keepalived/keepalived.conf > /dev/null <<'EOF'

global_defs {

enable_script_security

script_user root

}

vrrp_script chk_haproxy {

script 'killall -0 haproxy'

interval 2

}

vrrp_instance haproxy-vip {

interface ens33

state BACKUP

priority 150

virtual_router_id 51

virtual_ipaddress {

192.168.48.200/24

}

track_script {

chk_haproxy

}

}

EOF

|

操作节点:[Server3]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| sudo tee /etc/keepalived/keepalived.conf > /dev/null <<'EOF'

global_defs {

enable_script_security

script_user root

}

vrrp_script chk_haproxy {

script 'killall -0 haproxy'

interval 2

}

vrrp_instance haproxy-vip {

interface ens33

state BACKUP

priority 100

virtual_router_id 51

virtual_ipaddress {

192.168.48.200/24

}

track_script {

chk_haproxy

}

}

EOF

|

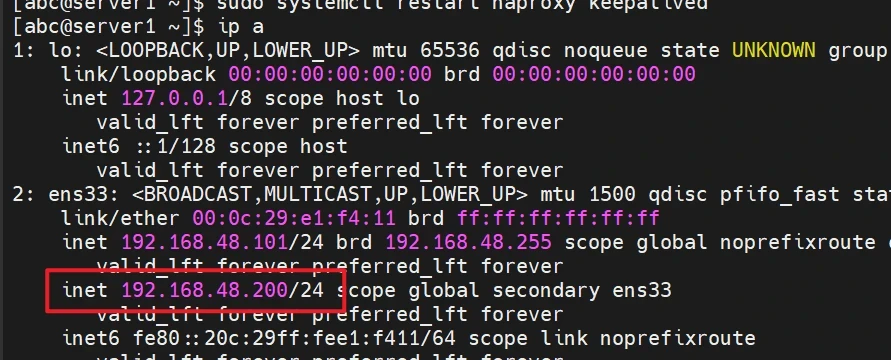

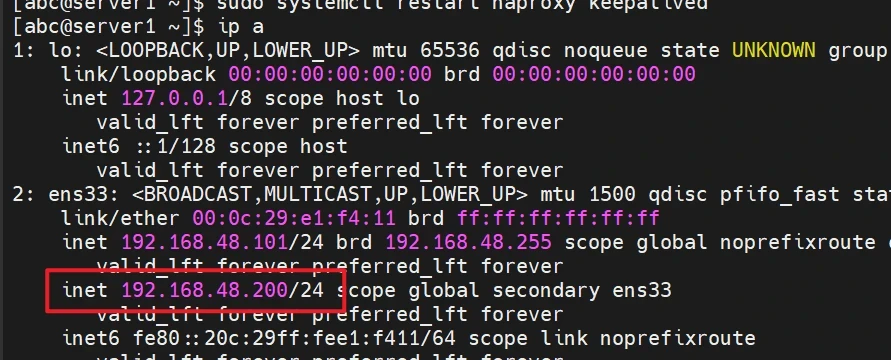

操作节点:[所有的Server]

1

2

| sudo systemctl restart haproxy keepalived

sudo systemctl enable --now haproxy keepalived

|

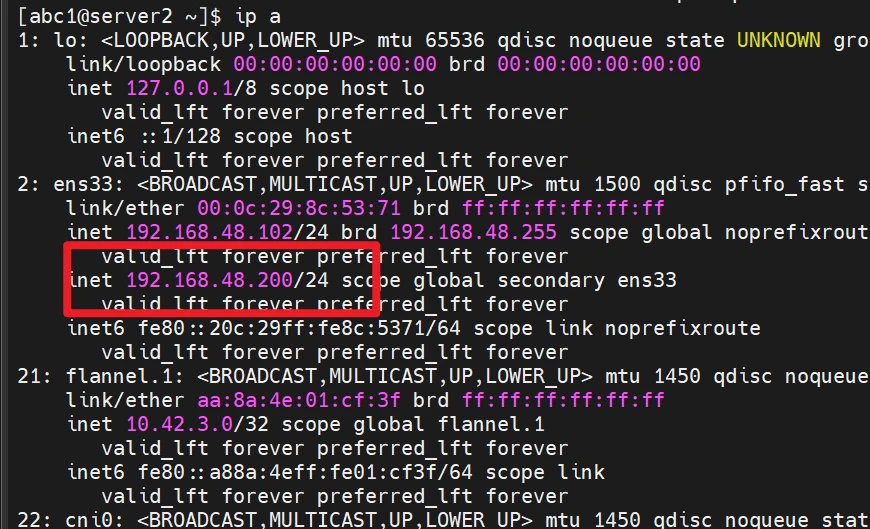

现在来查看vip是否生成

操作节点:[Server1]

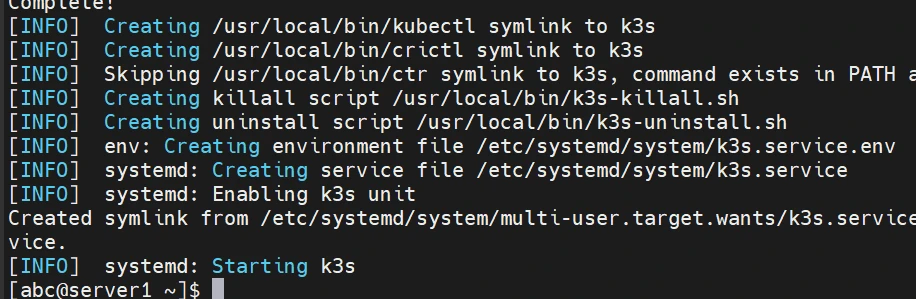

初始化第一个server

操作节点:[server1]

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

要注意你选的是哪个容器工具哈

基于docker

如果有参数的值数错了,可以改一下,然后重新运行命令即可

--docke一定要放在所有参数的最前面

1

2

3

4

5

6

7

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --docker --cluster-init - server \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com" \

--tls-san= 192.168.48.200

|

qianyiosQianyios12345是作为集群间的共享密钥,可自定义

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

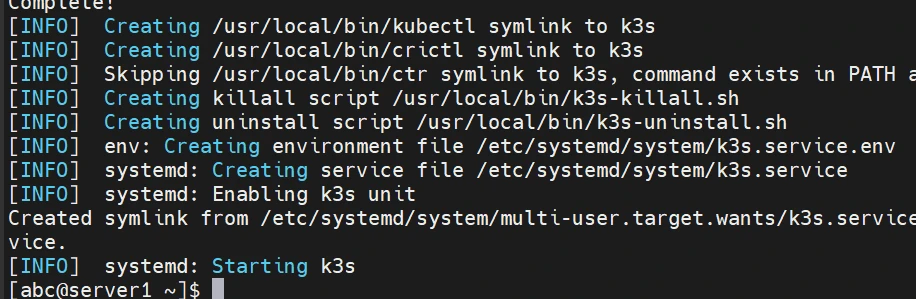

基于containerd

如果有参数的值数错了,可以改一下,然后重新运行命令即可

1

2

3

4

5

6

7

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --cluster-init - server \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com" \

--tls-san= 192.168.48.200

|

qianyiosQianyios12345是作为集群间的共享密钥,可自定义

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

其他server加入集群

基于docker

如果有参数的值数错了,可以改一下,然后重新运行命令即可

--docke一定要放在所有参数的最前面

1

2

3

4

5

6

7

8

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --docker \

--server https://192.168.48.101:6443 \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com" \

--tls-san= 192.168.48.200

|

qianyiosQianyios12345是作为第一个server1共享出来的密钥

–server https://192.168.48.101:6443 改成serve1的ip地址即可

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

基于containerd

如果有参数的值数错了,可以改一下,然后重新运行命令即可

1

2

3

4

5

6

7

8

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh \

--system-default-registry "registry.cn-hangzhou.aliyuncs.com" \

--server https://192.168.48.101:6443 \

--tls-san= 192.168.48.200

|

qianyiosQianyios12345是作为第一个server1共享出来的密钥

–server https://192.168.48.101:6443 改成serve1的ip地址即可

自行完成 9.解决非root用户使用kubectl等命令显示无命令的办法

其他Agent加入集群

基于docker

如果有参数的值数错了,可以改一下,然后重新运行命令即可

--docke一定要放在所有参数的最前面

1

2

3

4

5

6

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

K3S_URL=https://192.168.48.101:6443 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh --docker - agent

|

基于containerd

如果有参数的值数错了,可以改一下,然后重新运行命令即可

1

2

3

4

5

6

| sudo \

INSTALL_K3S_MIRROR=cn \

K3S_TOKEN=qianyiosQianyios12345 \

K3S_URL=https://192.168.48.101:6443 \

INSTALL_K3S_REGISTRIES="https://registry.cn-hangzhou.aliyuncs.com,https://registry.aliyuncs.com/google_containers" \

./k3s-install.sh - agent

|

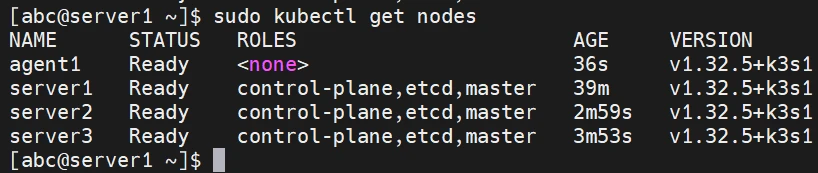

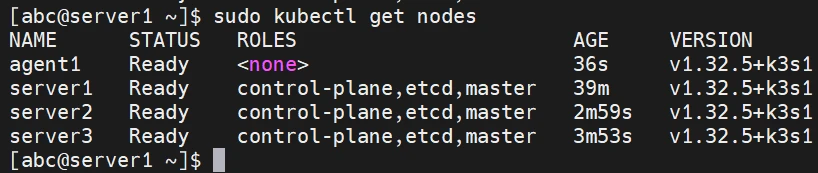

这时候在Server可以查看node情况

安装dashboard

在基础或高可用k3s都可以使用

操作节点:[Server1]

1

2

3

4

5

6

7

| sudo wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml && \

sudo sed -i 's/kubernetesui\/dashboard:v2.7.0/registry.cn-hangzhou.aliyuncs.com\/qianyios\/dashboard:v2.7.0/g' recommended.yaml

sleep 3

sudo sed -i 's/kubernetesui\/metrics-scraper:v1.0.8/registry.cn-hangzhou.aliyuncs.com\/qianyios\/metrics-scraper:v1.0.8/g' recommended.yaml

sudo sed -i '/targetPort: 8443/a\ nodePort: 30001' recommended.yaml

sudo sed -i '/nodePort: 30001/a\ type: NodePort' recommended.yaml

|

运行pod

1

| sudo kubectl apply -f recommended.yaml

|

创建token

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

|

sudo kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

sudo kubectl create clusterrolebinding dashboard-admin \

--clusterrole=cluster-admin \

--serviceaccount=kubernetes-dashboard:dashboard-admin

sudo kubectl create token dashboard-admin -n kubernetes-dashboard

sudo cat <<EOF | sudo kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: dashboard-admin-token

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: dashboard-admin

type: kubernetes.io/service-account-token

EOF

KUBECONFIG_FILE="dashboard-kubeconfig.yaml"

APISERVER=$(sudo kubectl config view --minify -o jsonpath='{.clusters[0].cluster.server}')

CA_CERT=$(sudo kubectl config view --raw -o jsonpath='{.clusters[0].cluster.certificate-authority-data}')

TOKEN=$(sudo kubectl get secret dashboard-admin-token -n kubernetes-dashboard -o jsonpath='{.data.token}' | base64 --decode)

sudo cat <<EOF > ${KUBECONFIG_FILE}

apiVersion: v1

kind: Config

clusters:

- name: kubernetes

cluster:

server: ${APISERVER}

certificate-authority-data: ${CA_CERT}

users:

- name: dashboard-admin

user:

token: ${TOKEN}

contexts:

- name: dashboard-context

context:

cluster: kubernetes

user: dashboard-admin

current-context: dashboard-context

EOF

echo "✅ kubeconfig 文件已生成:${KUBECONFIG_FILE}"

|

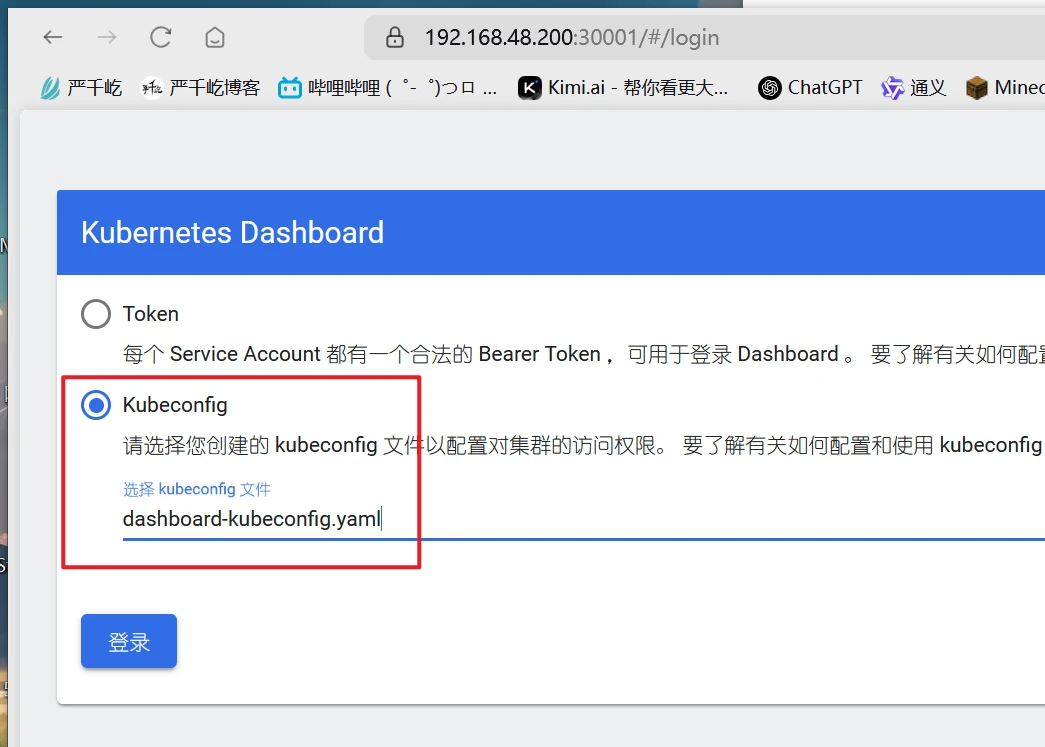

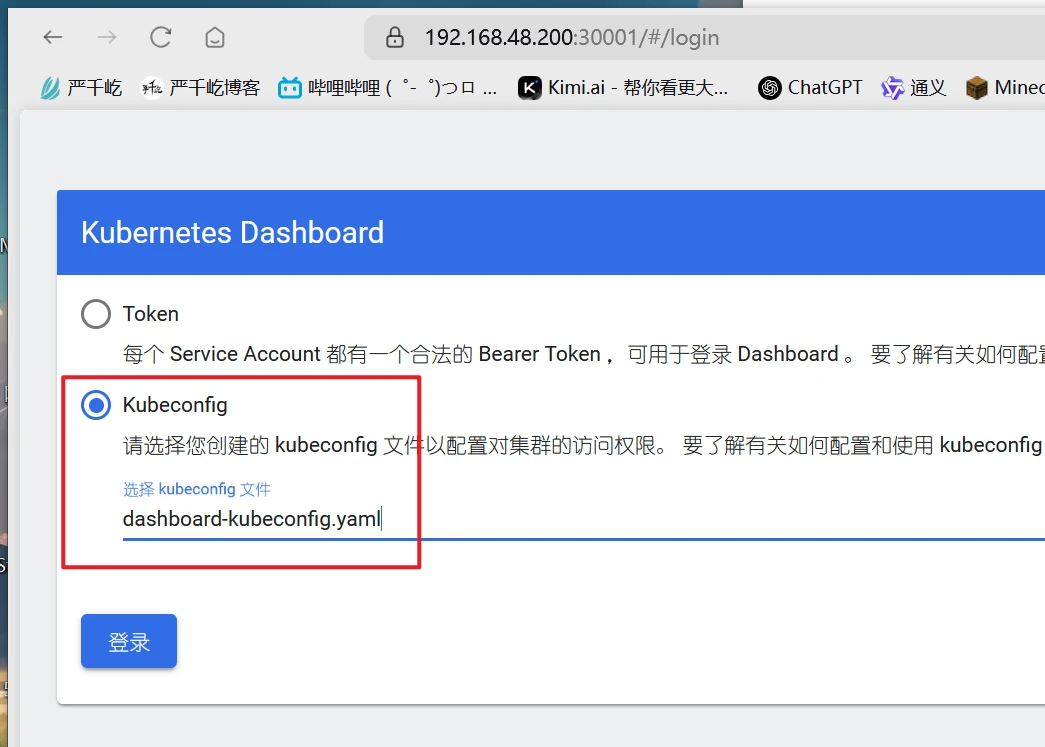

这时候就会提示你

✅ kubeconfig 文件已生成:dashboard-kubeconfig.yaml

你就把这个文件上传到dashboard的kubeconfig就可以免密登入了

这个192.168.48.200是高可用地址

高可用模拟宕机测试

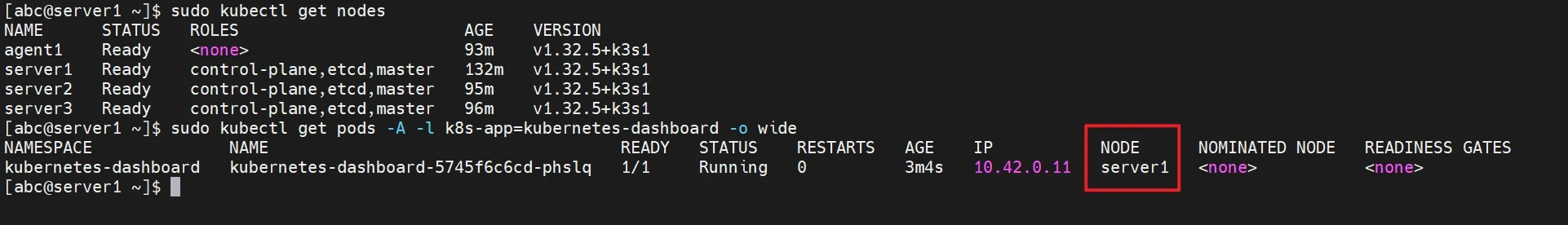

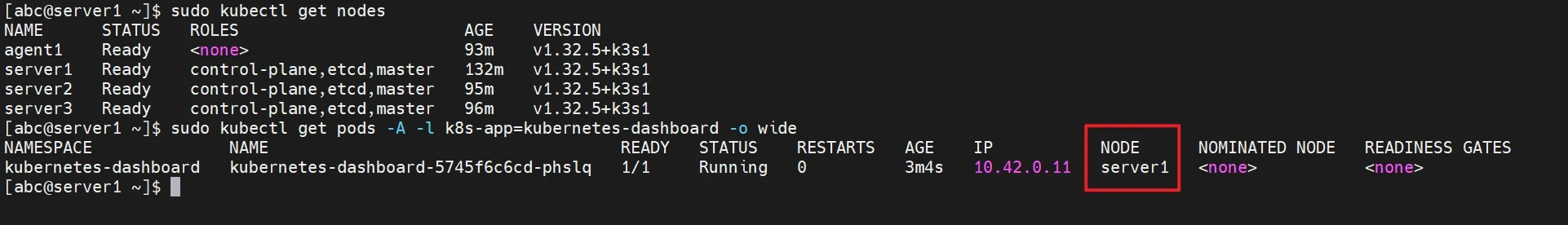

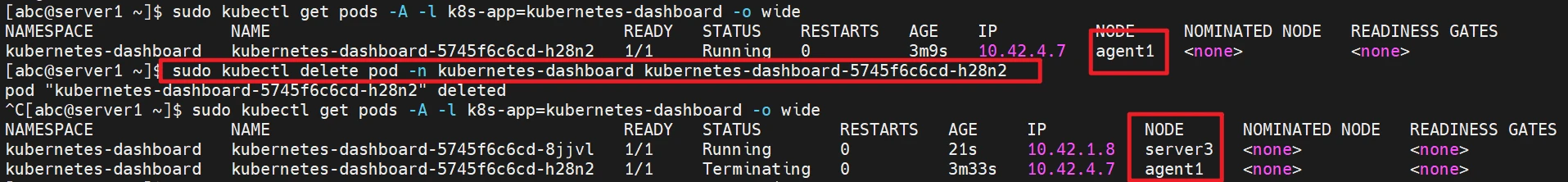

查看dashboard部署在哪个节点

1

2

| sudo kubectl get pods -A -l k8s-app=kubernetes-dashboard -o wide

sudo kubectl get nodes

|

我这里显示的是dashboard部署在Server1

那么我们就对Server1进行powerof关机,来模拟宕机看看dashboard能否被k3s自动调度到其他节点

但是我发现pod还在running的状态,且server1的状态还是ready

server2 已经被标记为 NotReady

说明 Kubernetes 已感知到它不可用(可能是关机、网络不通或 kubelet 崩溃等),但:

- 如果 Pod 的副本数是

1,Kubernetes 不会自动创建新的 Pod 。

- 默认的节点失联容忍时间较长(5分钟),所以即使节点 NotReady,也不会立刻触发 Pod 驱逐。

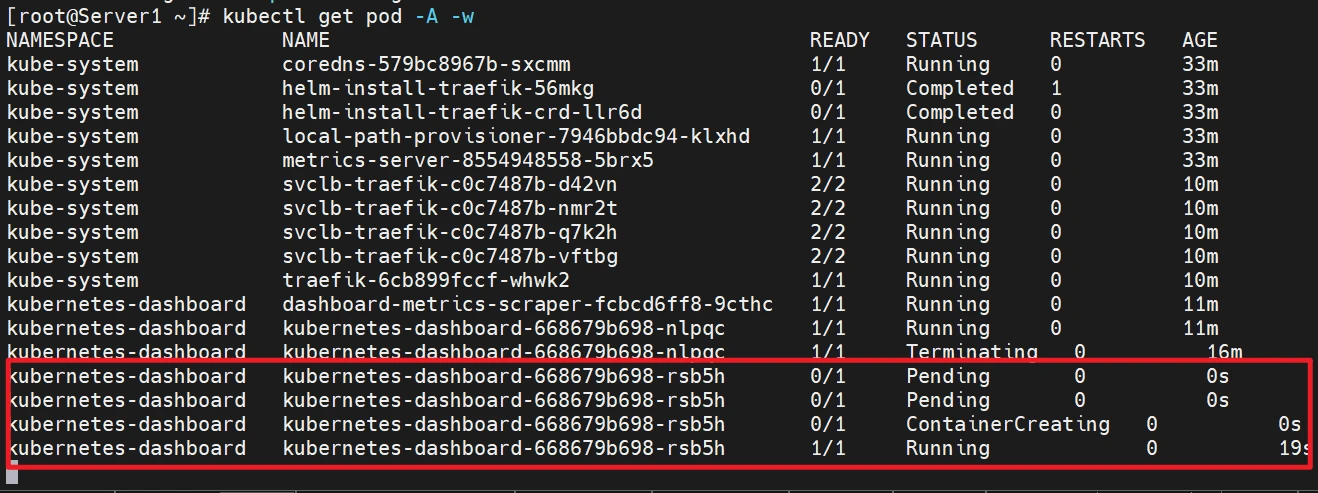

方案一 等待五分钟

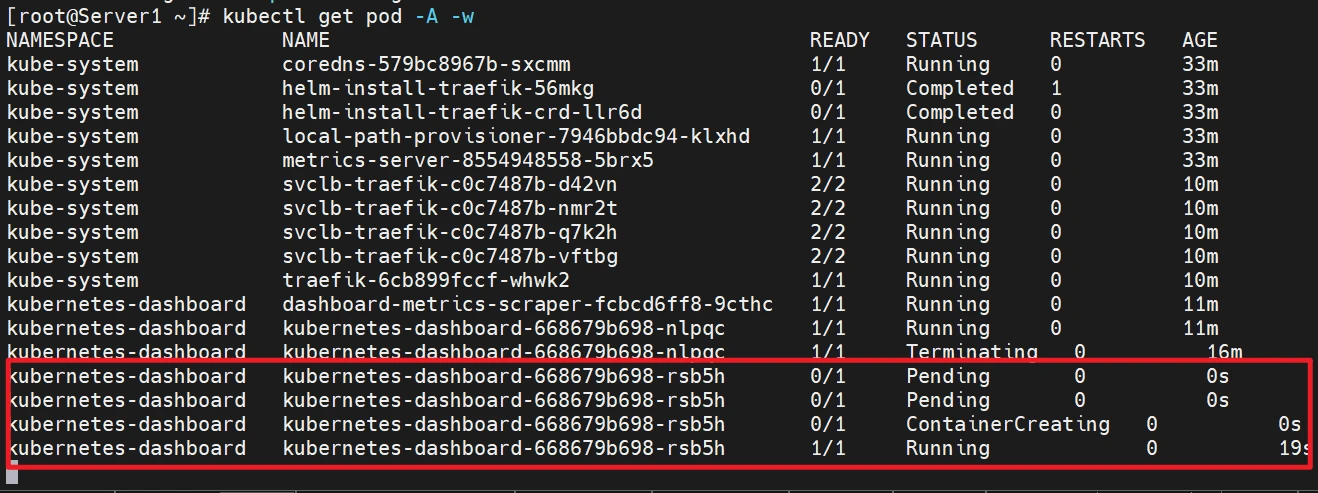

经过漫长等待,dashboard的pod进行了重新分配

1

| kubectl get pods -A -l k8s-app=kubernetes-dashboard -o wide

|

经过查看已经被调度到了Agent1节点

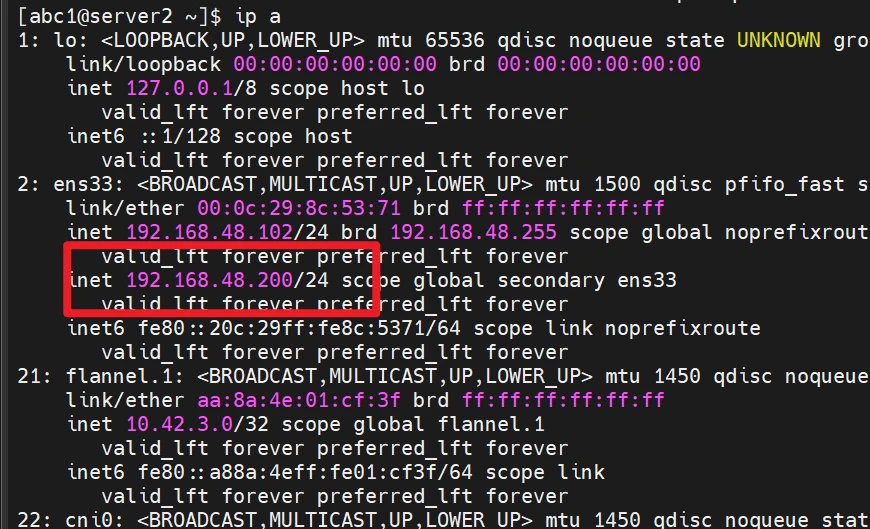

而且,原本server1的高可用ip,现已经漂移到了server2了,同样也可以用高可用ip访问k3s内的所有pod

结论:高可用实验,实验成功,且页面可以正常访问

方案二 手动删除 Pod 强制重建(推荐测试)

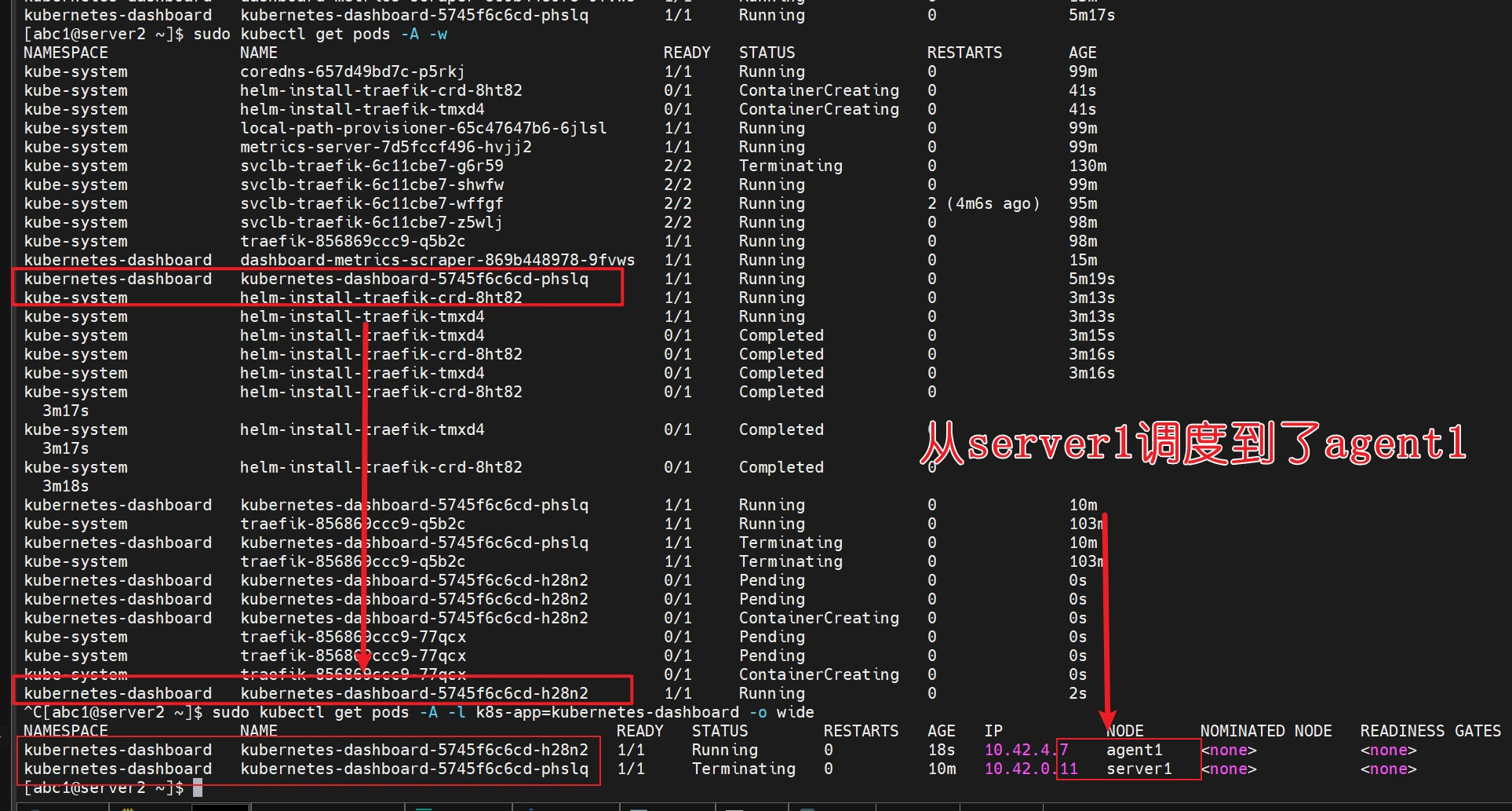

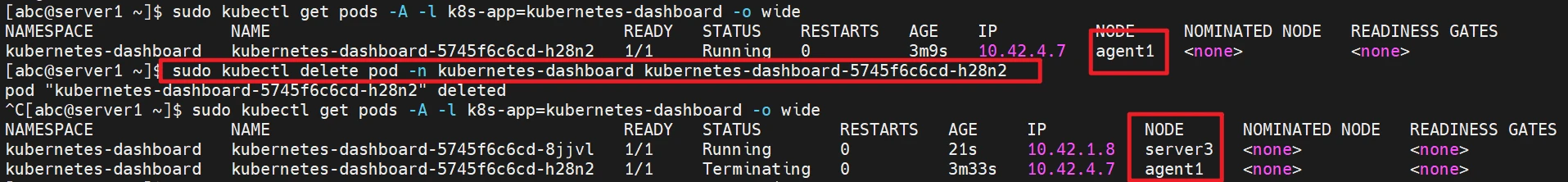

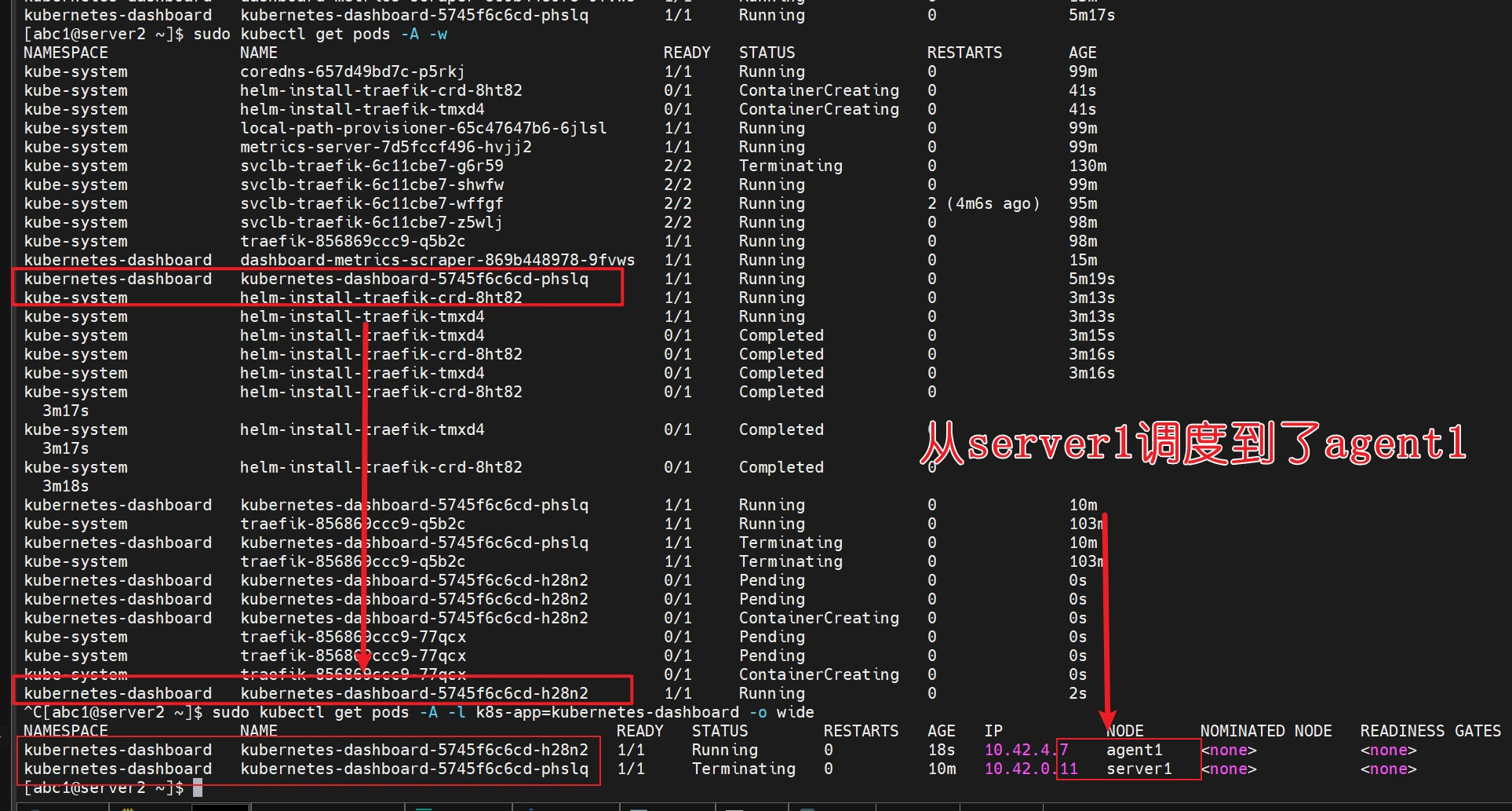

由于刚刚经过方案一的测试,被调度到了Agent1,所以这次对Agent1进行模拟宕机,然后手动删除pod

1

| sudo kubectl delete pod -n kubernetes-dashboard pod名字

|

经过手动删除,立马触发自动调度,已经被调度到了Server3节点

结论:高可用实验,实验成功,且页面可以正常访问

方案三 缩短节点失联容忍时间(适用于生产环境)

如果你希望 Kubernetes 更快地响应节点故障,可以在 K3s 启动参数中添加以下内容:

1

2

| --node-monitor-grace-period=20s \

--pod-eviction-timeout=30s

|

⚠️ 注意:这会影响整个集群的行为,适用于生产环境或需要快速故障恢复的场景。

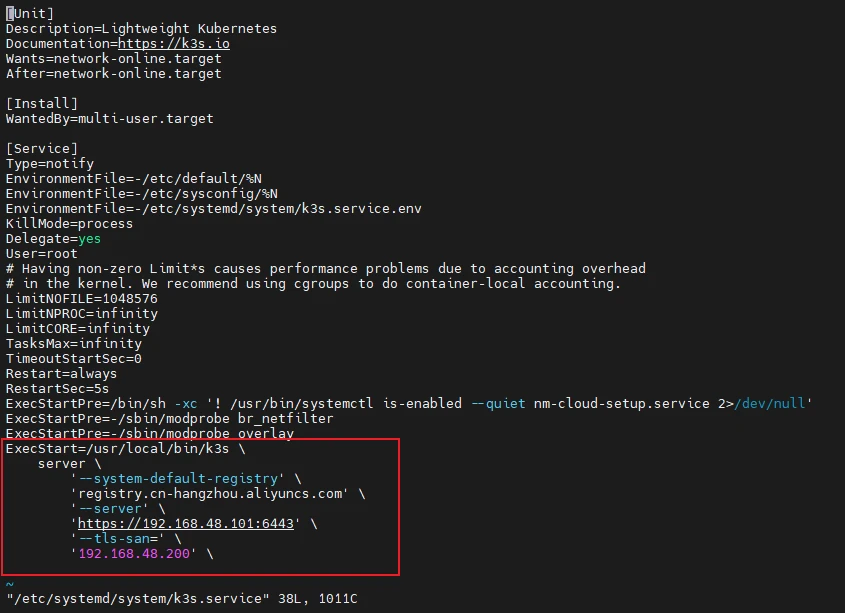

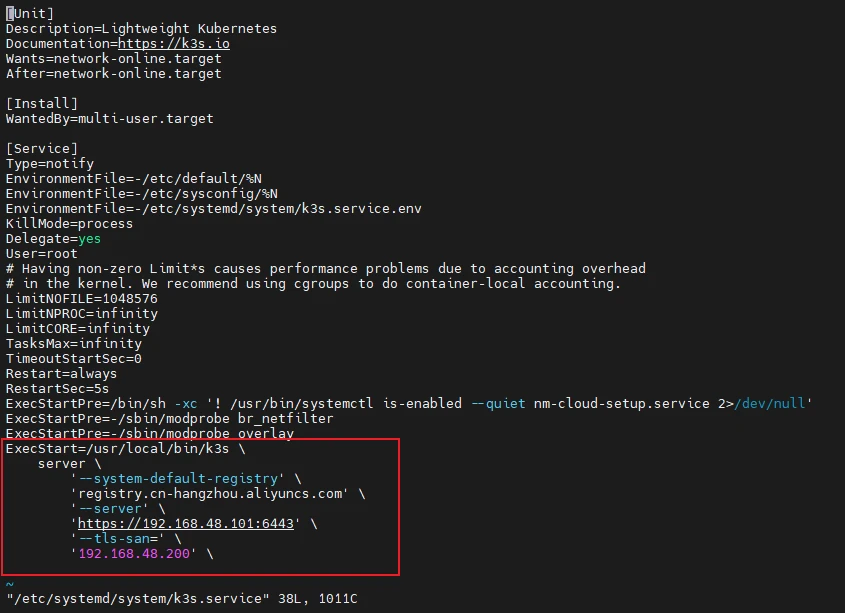

修改启动参数

如果你在安装的时候有些参数输入错了,或者想改,可以在这里改

首先,停止 K3s 服务以避免在更新过程中出现冲突:

修改k3s启动参数

1

| sudo vi /etc/systemd/system/k3s.service

|

假设你的–tls-san的高可用地址输入错了,要改成别的,你就改完,记得保存

然后删除旧证书

1

2

| sudo rm -f /var/lib/rancher/k3s/server/tls/serving-kube-apiserver*

sudo rm -f /var/lib/rancher/k3s/server/tls/server*

|

重启服务

1

2

| sudo systemctl daemon-reload

sudo systemctl start k3s

|

卸载K3S

官方教程:Uninstalling K3s | K3s

卸载Server

要从服务器节点卸载 K3s,请运行:

1

| /usr/local/bin/k3s-uninstall.sh

|

卸载Agent

要从代理节点卸载 K3s,请运行:

1

| /usr/local/bin/k3s-agent-uninstall.sh

|

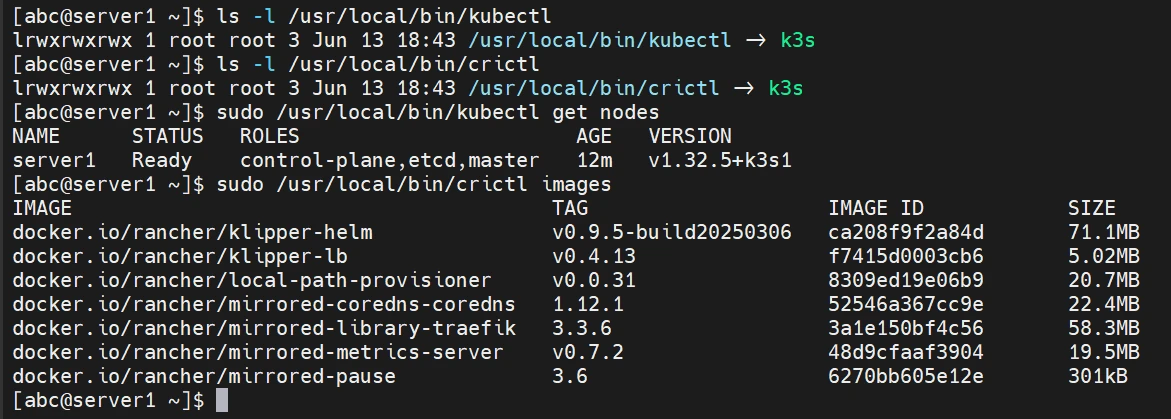

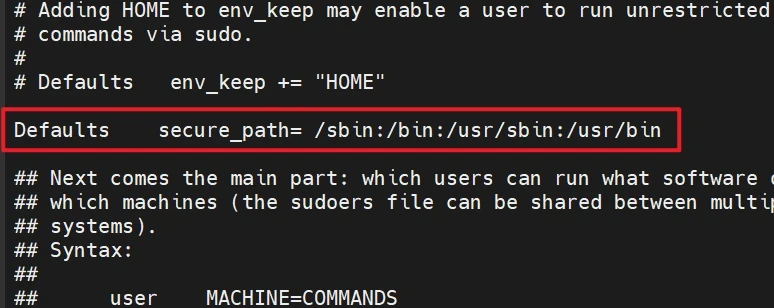

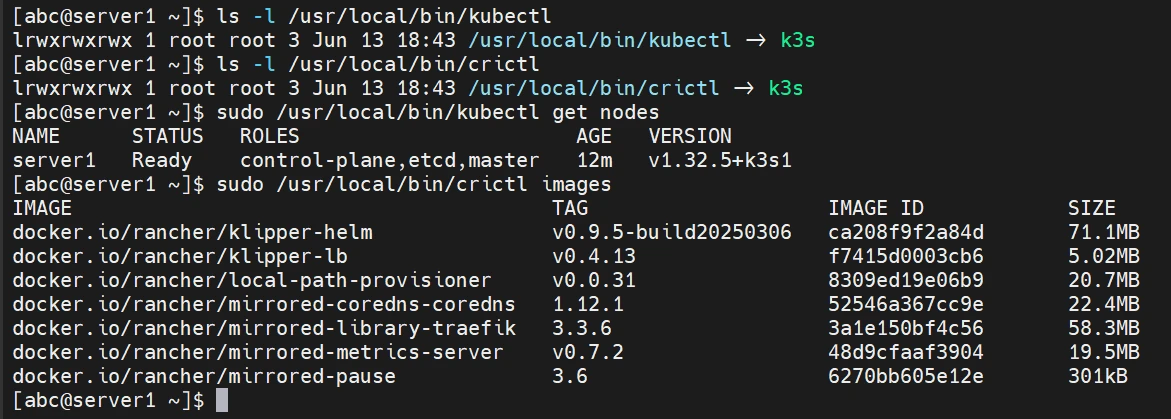

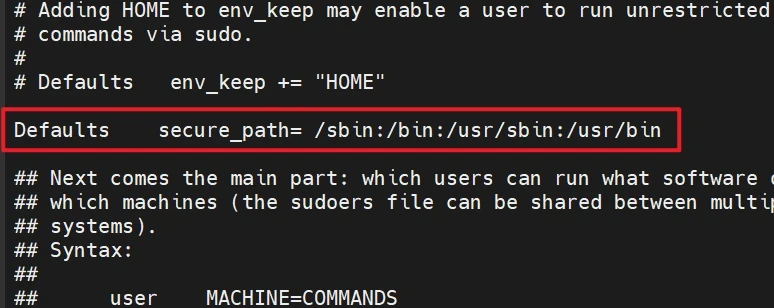

解决非root用户使用kubectl等命令显示无命令的办法

这时候运行查看节点命令,提示找不到命令

一看发现只有具体到指定路径才可以正常运行,并且用户和权限组都是root

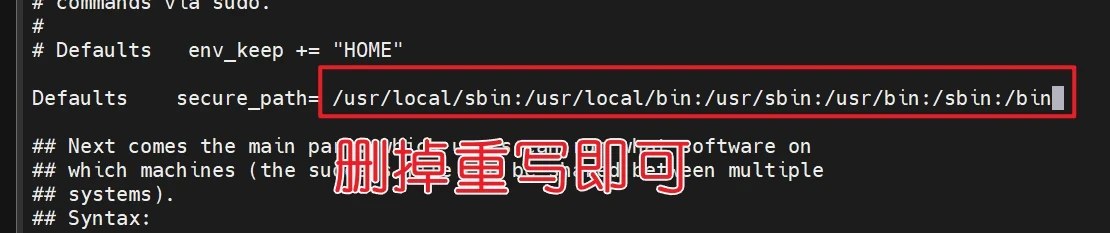

这时候在普通用户查看visudo

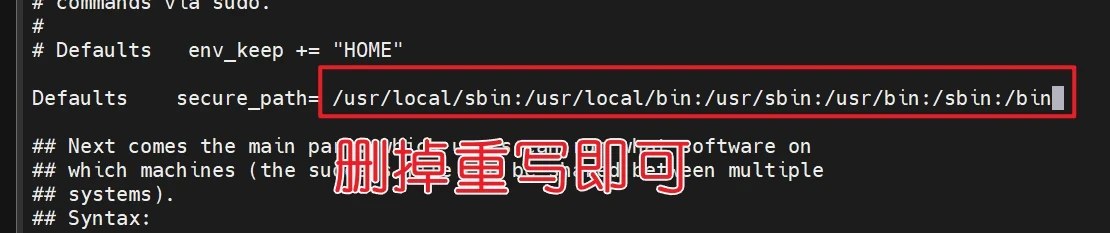

一看地址,他并没有/usr/local/bin的路径,所以普通用户是没办法继承root的路径的,所以你要设置普通用户默认的环境变量(生成环境,建议仔细斟酌要不要添加,不然就只能用绝对路径)

1

| /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

|

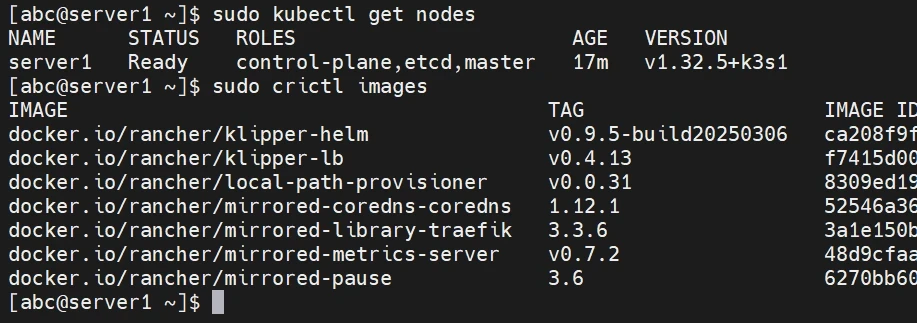

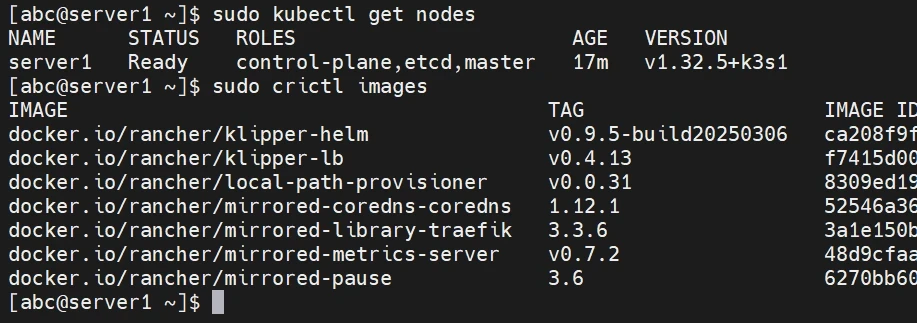

这时候再次运行k3s命令

1

2

| sudo kubectl get nodes

sudo crictl images

|

千屹博客旗下的所有文章,是通过本人课堂学习和课外自学所精心整理的知识巨著

难免会有出错的地方

如果细心的你发现了小失误,可以在下方评论区告诉我,或者私信我!

非常感谢大家的热烈支持!