OpenStack Antelope

本文档是基于 openEuler 24.03 LTS 的 OpenStack 部署指南

镜像下载: openEuler 24.03 LTS

主机拓扑

主机名

ip

硬盘1

硬盘2

cpu

内存

controller

192.168.48.101

100G

4

7G

compute

192.168.48.102

100G

4

4G

storage

192.168.48.103

100G

100G

4

2G

具体安装的服务如下:

节点名称

OpenStack 服务

控制节点

MariaDB RabbitMQ Memcache Keystone Placement Glance Nova Neutron Cinder Horizon Heat

计算节点

Nova Neutron

块存储节点

Cinder Swift

前情提要

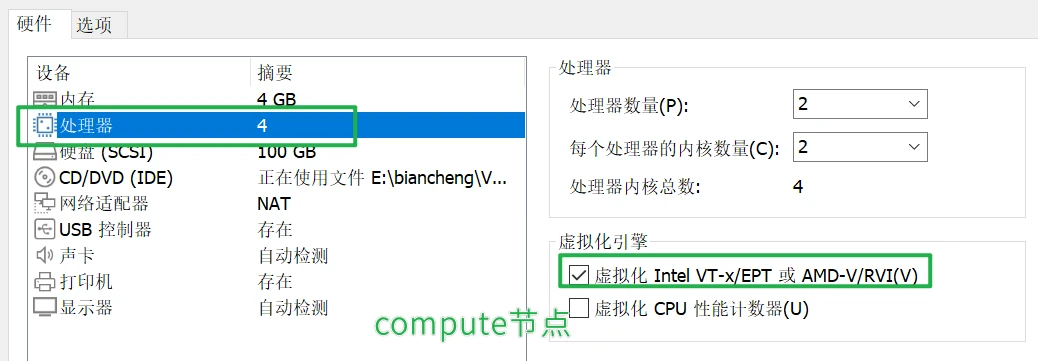

compute启动虚拟机硬件加速(x86_64)

所有节点的内存和硬盘芯片都要按照我的来

安全性

物理节点关闭顺序

给每台机都加上两个脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 cat >> stop.sh << EOF #!/bin/bash # 关闭所有 OpenStack 节点 # 依次关闭计算节点、网络节点、控制节点 for server in \$(openstack server list -f value -c ID); do openstack server stop \$server done # 关闭计算节点 echo "Stopping compute services..." systemctl stop openstack-nova-compute.service systemctl stop libvirtd.service # 关闭网络节点 echo "Stopping network services..." systemctl stop openvswitch.service systemctl stop neutron-server.service systemctl stop neutron-linuxbridge-agent.service systemctl stop neutron-dhcp-agent.service systemctl stop neutron-metadata-agent.service systemctl stop neutron-l3-agent.service # 关闭控制节点 echo "Stopping control services..." systemctl stop mariadb.service systemctl stop rabbitmq-server.service systemctl stop memcached.service systemctl stop httpd.service systemctl stop openstack-glance-api.service systemctl stop openstack-glance-registry.service systemctl stop openstack-cinder-api.service systemctl stop openstack-cinder-scheduler.service systemctl stop openstack-cinder-volume.service systemctl stop openstack-nova-api.service systemctl stop openstack-nova-scheduler.service systemctl stop openstack-nova-conductor.service systemctl stop openstack-nova-novncproxy.service systemctl stop openstack-nova-consoleauth.service systemctl stop openstack-keystone.service systemctl stop openstack-heat-api.service systemctl stop openstack-heat-api-cfn.service systemctl stop openstack-heat-engine.service systemctl stop openstack-swift-proxy.service systemctl stop openstack-swift-account.service systemctl stop openstack-swift-container.service systemctl stop openstack-swift-object.service echo "Stopping all services..." systemctl stop --all # 关闭电源 echo "Shutting down the system..." poweroff EOF cat >> start.sh << EOF #!/bin/bash # 重新启动 OpenStack 服务 # 依次启动控制节点、网络节点、计算节点 # 启动控制节点 echo "Starting control services..." systemctl start mariadb.service systemctl start rabbitmq-server.service systemctl start memcached.service systemctl start httpd.service systemctl start openstack-glance-api.service systemctl start openstack-glance-registry.service systemctl start openstack-cinder-api.service systemctl start openstack-cinder-scheduler.service systemctl start openstack-cinder-volume.service systemctl start openstack-nova-api.service systemctl start openstack-nova-scheduler.service systemctl start openstack-nova-conductor.service systemctl start openstack-nova-novncproxy.service systemctl start openstack-nova-consoleauth.service systemctl start openstack-keystone.service systemctl start openstack-heat-api.service systemctl start openstack-heat-api-cfn.service systemctl start openstack-heat-engine.service systemctl start openstack-swift-proxy.service systemctl start openstack-swift-account.service systemctl start openstack-swift-container.service systemctl start openstack-swift-object.service # 启动网络节点 echo "Starting network services..." systemctl start openvswitch.service systemctl start neutron-server.service systemctl start neutron-linuxbridge-agent.service systemctl start neutron-dhcp-agent.service systemctl start neutron-metadata-agent.service systemctl start neutron-l3-agent.service # 启动计算节点 echo "Starting compute services..." systemctl start libvirtd.service systemctl start openstack-nova-compute.service EOF

(先给coompute和storage节点执行-最后等这两个节点完全关闭,再给控制节点执行)

1 2 3 关闭物理机的时候运行 sh stop.sh (运行的时候可能会提示你有些服务找不到,报错,这个没关系,但是要是告诉你有些服务起不来,要你自己去找报错了)一般情况下是没问题的

物理节点开启顺序

先开controller再开剩下的计算节点

基本用户信息

OpenStack 各组件都需要在控制节点数据库中注册专属账户以存放数据信息,故需要设置密码,强烈建议各组件的密码以及宿主机密码各不相同。

OpenStack 组件

密码

控制节点 root

Lj201840.

计算节点 root

Lj201840.

Metadata 元数据密钥

METADATA_SECRET

Mariadb root 账户

MARIADB_PASS

RabbitMQ 服务

RABBIT_PASS

OpenStack admin

ADMIN_PASS

Placement 服务

PLACEMENT_PASS

Keystone 数据库

KEYSTONE_DBPASS

Glance 服务

GLANCE_PASS

Glance 数据库

GLANCE_DBPASS

Nova 服务

NOVA_PASS

Nova 数据库

NOVA_DBPASS

Neutron 服务

NEUTRON_PASS

Neutron 数据库

NEUTRON_DBPASS

Cinder 服务

CINDER_PASS

Cinder 数据库

CINDER_DBPASS

Horizon 数据库

DASH_DBPASS

Swift服务

SWIFT_PASS

Heat服务

HEAT_PASS

Heat数据库服务

HEAT_DBPASS

heat_domain_admin用户

HEAT_DOMAIN_USER_PASS

测试用户

用户

密码

admin

123456

use_dog

123456

基础配置

操作节点:[所有节点]

不要一股脑的复制,注意修改网卡的名字,我这里是ens33,修改成你的网卡名字,包括修改ip段,比如我的是192.168.48.你就要修改成你的172.8.3.最后那一个主机位就不用管,其他不变

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 #!/bin/bash if [ $# -eq 2 ];then echo "设置主机名为:$1 " echo "ens33设置IP地址为:192.168.48.$2 " else echo "使用方法:sh $0 主机名 主机位" exit 2 fi echo "--------------------------------------" echo "1.正在设置主机名:$1 " hostnamectl set-hostname $1 echo "2.正在关闭firewalld、selinux" systemctl disable firewalld &> /dev/null systemctl stop firewalld sed -i "s#SELINUX=enforcing#SELINUX=disabled#g" /etc/selinux/config setenforce 0 echo "3.正在设置ens33:192.168.48.$2 " cat > /etc/sysconfig/network-scripts/ifcfg-ens33 <<EOF TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no NAME=ens33 UUID=53b402ff-5865-47dd-a853-7afcd6521738 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.48.$2 GATEWAY=192.168.48.2 PREFIX=24 DNS1=192.168.48.2 DNS2=114.114.114.114 EOF nmcli c reload nmcli c up ens33 echo "4.新增华为云源" mkdir /etc/yum.repos.d/bak/cp /etc/yum.repos.d/* /etc/yum.repos.d/bak/sed -i 's/\$basearch/x86_64/g' /etc/yum.repos.d/openEuler.repo sed -i 's/http\:\/\/repo.openeuler.org/https\:\/\/mirrors.huaweicloud.com\/openeuler/g' /etc/yum.repos.d/openEuler.repo echo "5.更新yum源软件包缓存" yum clean all && yum makecache echo "6.添加hosts解析" cat > /etc/hosts <<EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.48.101 controller 192.168.48.102 compute 192.168.48.103 storage EOF echo "7.安装chrony服务,并同步时间" yum install chrony -y systemctl enable chronyd --now timedatectl set-timezone Asia/Shanghai timedatectl set-local-rtc 1 timedatectl set-ntp yes chronyc -a makestep chronyc tracking chronyc sources echo "8.必备工具安装" yum install wget psmisc vim net-tools telnet socat device-mapper-persistent-data lvm2 git -y echo "9.安装openstack" yum update -y yum install openstack-release-antelope -y echo "10.重启" reboot

运行

1 2 3 4 5 6 7 sh system_init.sh 主机名 主机位 [controller] sh init.sh controller 101 [compute] sh init.sh compute 102 [storage] sh init.sh storage 103

SSH免密

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 yum install -y sshpass cat > sshmianmi.sh << "EOF" hosts=("controller" "compute" "storage" ) password="Lj201840." ssh_config="$HOME /.ssh/config" ssh-keygen -t rsa -N "" -f ~/.ssh/id_rsa mkdir -p "$HOME /.ssh" touch "$ssh_config " chmod 600 "$ssh_config " { echo "Host *" echo " StrictHostKeyChecking no" echo " UserKnownHostsFile /dev/null" } >> "$ssh_config " for host in "${hosts[@]} " do sshpass -p "$password " ssh-copy-id -o StrictHostKeyChecking=no "$host " sshpass -p "$password " ssh -o StrictHostKeyChecking=no "$host " "echo '免密登录成功'" done EOF sh sshmianmi.sh

依赖组件

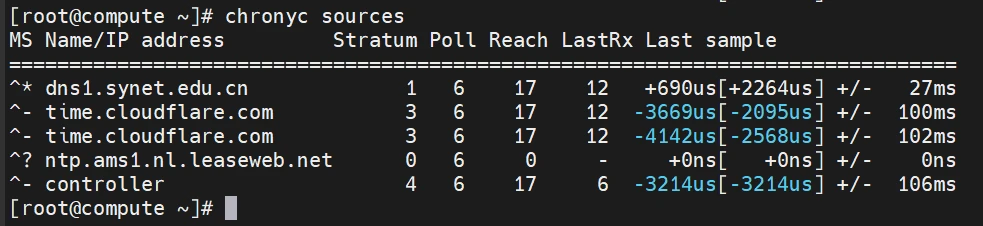

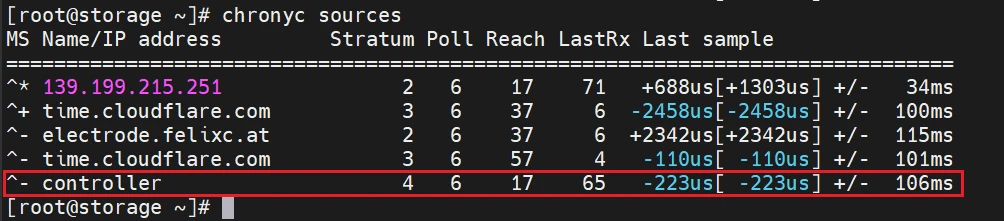

时间同步

集群环境时刻要求每个节点的时间一致,一般由时钟同步软件保证。本文使用chrony软件

由于前面基础配置已经安装chrony,这里就不再安装了

操作节点:[controller]

1 2 echo "allow 192.168.48.0/24" | sudo tee -a /etc/chrony.conf > /dev/nullsystemctl restart chronyd

操作节点:[compute,storage]

1 2 3 echo "server 192.168.48.101 iburst" | sudo tee -a /etc/chrony.conf > /dev/nullsystemctl restart chronyd

配置完成后,检查一下结果,在其他非controller节点执行chronyc sources,返回结果类似如下内容,表示成功从controller同步时钟

安装数据库

操作节点:[controller]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 dnf install mysql-config mariadb mariadb-server python3-PyMySQL -y cat > /etc/my.cnf.d/openstack.cnf << "EOF" [mysqld] bind-address = 192.168.48.101 default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8 EOF systemctl enable --now mariadb mysql_secure_installation Enter current password for root (enter for none): 回车 Switch to unix_socket authentication [Y/n] n Change the root password? [Y/n] y Remove anonymous users ? [Y/n] y Disallow root login remotely? [Y/n] Y Remove test database and access to it? [Y/n] y Reload privilege tables now? [Y/n] y mysql -u root -pMARIADB_PASS

安装消息队列

操作节点:[controller]

1 2 3 4 dnf install rabbitmq-server -y systemctl enable --now rabbitmq-server rabbitmqctl add_user openstack RABBIT_PASS rabbitmqctl set_permissions openstack ".*" ".*" ".*"

安装缓存服务

操作节点:[controller]

controller是我的主机名,你要改成你的主机名

1 2 3 dnf install memcached python3-memcached -y sed -i "s/OPTIONS=\"-l 127.0.0.1,::1\"/OPTIONS=\"-l 127.0.0.1,::1,controller\"/g" /etc/sysconfig/memcached systemctl enable memcached --now

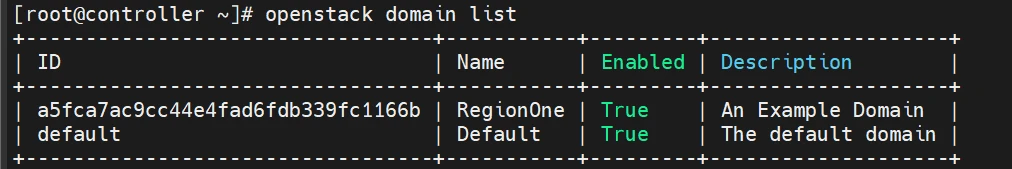

Keystone

Keystone是OpenStack提供的鉴权服务,是整个OpenStack的入口,提供了租户隔离、用户认证、服务发现等功能,必须安装。

建立一个脚本 (后续我都不做解释了,自行添加脚本,然后运行,要注意脚本里的密码啥的,改成自己需要的)

1 2 3 mkdir openstack-install cd openstack-install vim keystone.sh

添加以下内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 #!/bin/bash echo "CREATE DATABASE keystone;" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';" | mysql -u root -pMARIADB_PASSmysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" sleep 3dnf install openstack-keystone httpd mod_wsgi -y mv /etc/keystone/keystone.conf{,.bak}cat > /etc/keystone/keystone.conf <<EOF [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [token] provider = fernet EOF su -s /bin/sh -c "keystone-manage db_sync" keystone echo "use keystone; show tables;" | mysql -u root -pMARIADB_PASSkeystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone keystone-manage bootstrap --bootstrap-password 123456 \ --bootstrap-admin-url http://controller:5000/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne cp /etc/httpd/conf/httpd.conf{,.bak}sed -i "s/#ServerName www.example.com:80/ServerName controller/g" /etc/httpd/conf/httpd.conf ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/systemctl enable httpd --now cat << EOF > /root/admin-openrc export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=123456 export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 EOF cat << EOF > /root/user_dog-openrc export OS_USERNAME=user_dog export OS_PASSWORD=123456 export OS_PROJECT_NAME=Demo export OS_USER_DOMAIN_NAME=RegionOne export OS_PROJECT_DOMAIN_NAME=RegionOne export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 EOF dnf install python3-openstackclient -y source /root/admin-openrcopenstack domain create --description "An RegionOne Domain" RegionOne openstack project create --domain default --description "Service Project" service openstack project create --domain RegionOne --description "Demo Project" Demo openstack user create --domain RegionOne --password 123456 user_dog openstack role create user_dog_role openstack role add --project Demo --user user_dog user_dog_role openstack domain list

运行keystone脚本

Glance

1 2 cd openstack-install vim glance.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 # !/bin/bash # 替换 GLANCE_DBPASS,为 glance 数据库设置密码 echo "CREATE DATABASE glance;" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';" | mysql -u root -pMARIADB_PASS mysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" source /root/admin-openrc # 创建glance用户并设置密码 openstack user create --domain default --password GLANCE_PASS glance # 添加glance用户到service project并指定admin角色 openstack role add --project service --user glance admin # 创建glance服务实体 openstack service create --name glance --description "OpenStack Image" image # 创建glance API服务 openstack endpoint create --region RegionOne image public http://controller:9292 openstack endpoint create --region RegionOne image internal http://controller:9292 openstack endpoint create --region RegionOne image admin http://controller:9292 # 安装软件包 dnf install openstack-glance -y # 修改配置文件 mv /etc/glance/glance-api.conf{,.bak} cat >/etc/glance/glance-api.conf << "EOF" [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = GLANCE_PASS [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ EOF # 同步数据库 su -s /bin/sh -c "glance-manage db_sync" glance # 启动服务 systemctl enable openstack-glance-api.service --now source /root/admin-openrc mkdir /root/openstack-install/iso/ # 下载镜像 wget -P /root/openstack-install/iso/ https://resource.qianyios.top/cloud/iso/cirros-0.4.0-x86_64-disk.img # 向Image服务上传镜像 source /root/admin-openrc openstack image create --disk-format qcow2 --container-format bare \ --file /root/openstack-install/iso/cirros-0.4.0-x86_64-disk.img --public cirros # 验证是否上传 openstack image list

Placement

Placement是OpenStack提供的资源调度组件,一般不面向用户,由Nova等组件调用,安装在控制节点。

安装、配置Placement服务前,需要先创建相应的数据库、服务凭证和API endpoints。

操作节点[controller]

1 2 cd openstack-install vim placement.sh

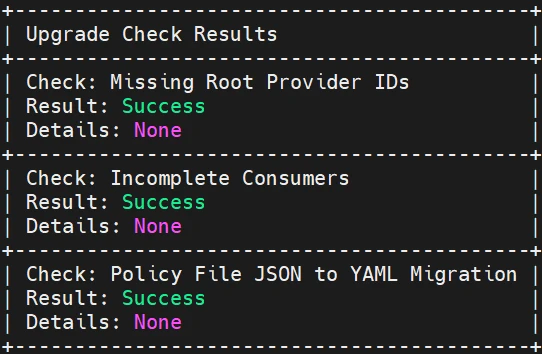

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 # !/bin/bash # 创建数据库并授权 # 替换PLACEMENT_DBPASS为placement数据库访问密码。 echo "CREATE DATABASE placement;" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';" | mysql -u root -pMARIADB_PASS mysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" source /root/admin-openrc # 创建placement用户并设置密码 openstack user create --domain default --password PLACEMENT_PASS placement # 添加placement用户到service project并指定admin角色 openstack role add --project service --user placement admin # 创建placement服务实体 openstack service create --name placement \ --description "Placement API" placement # 创建Placement API服务endpoints openstack endpoint create --region RegionOne \ placement public http://controller:8778 openstack endpoint create --region RegionOne \ placement internal http://controller:8778 openstack endpoint create --region RegionOne \ placement admin http://controller:8778 # 安装placement dnf install openstack-placement-api -y # 配置placement mv /etc/placement/placement.conf{,.bak} # PLACEMENT_DBPASS为placement服务的数据库账户密码 # PLACEMENT_PASS为placement服务的密码 # 以下的placement.conf文件不要有任何的注释,不然后面会报错 cat > /etc/placement/placement.conf << "EOF" [placement_database] connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = placement password = PLACEMENT_PASS EOF su -s /bin/sh -c "placement-manage db sync" placement oslopolicy-convert-json-to-yaml --namespace placement \ --policy-file /etc/placement/policy.json \ --output-file /etc/placement/policy.yaml mv /etc/placement/policy.json{,.bak} systemctl restart httpd dnf install python3-osc-placement -y placement-status upgrade check

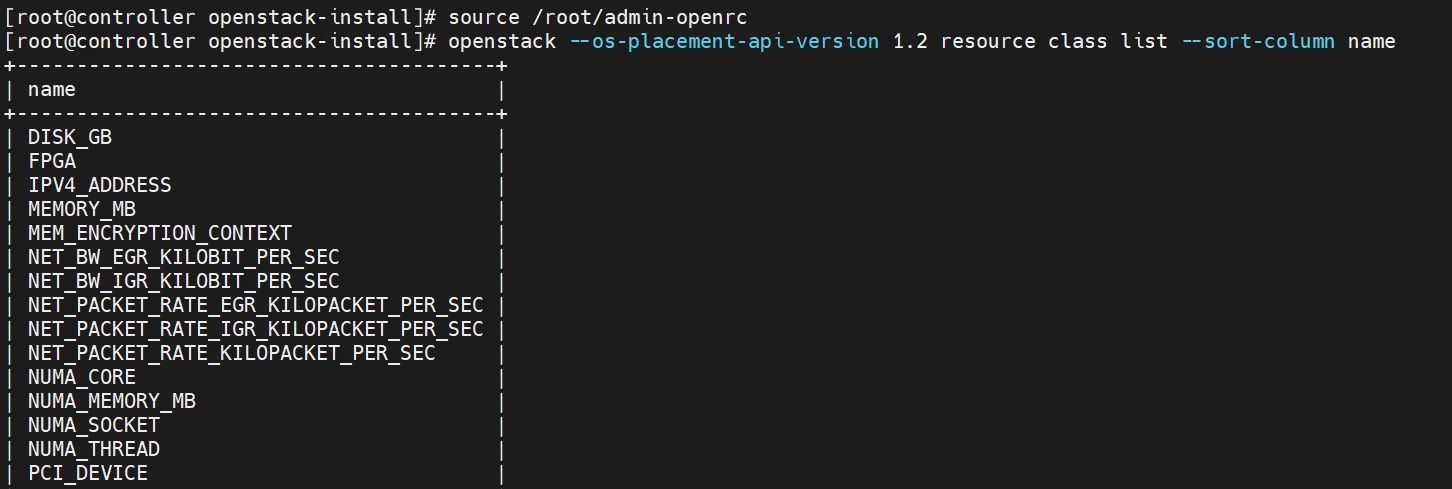

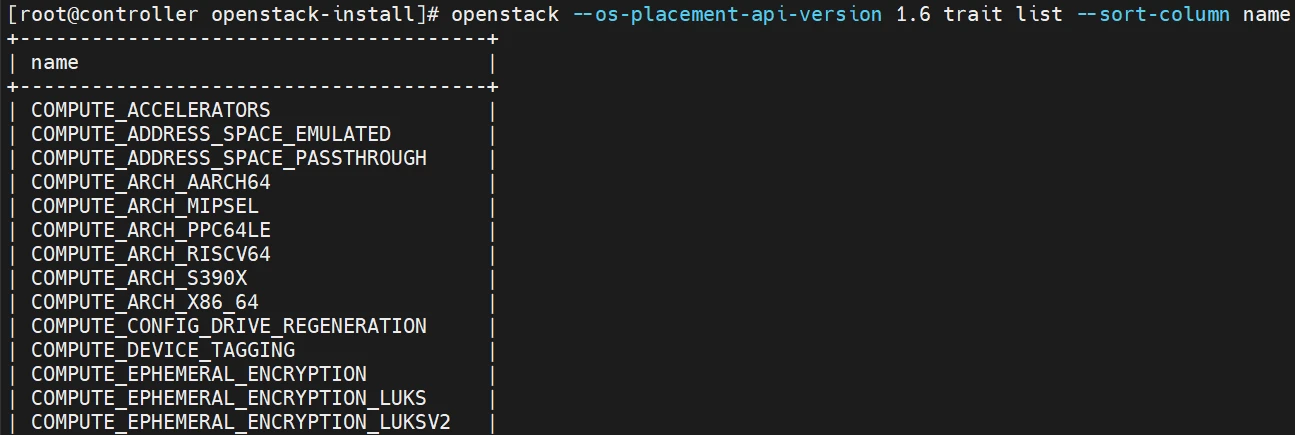

验证服务,列出可用的资源类别及特性

1 2 3 source /root/admin-openrc openstack --os-placement-api-version 1.2 resource class list --sort-column name openstack --os-placement-api-version 1.6 trait list --sort-column name

Nova

Nova是OpenStack的计算服务,负责虚拟机的创建、发放等功能。

controller

操作节点[controller]

1 2 cd openstack-install vim nova-controller.sh

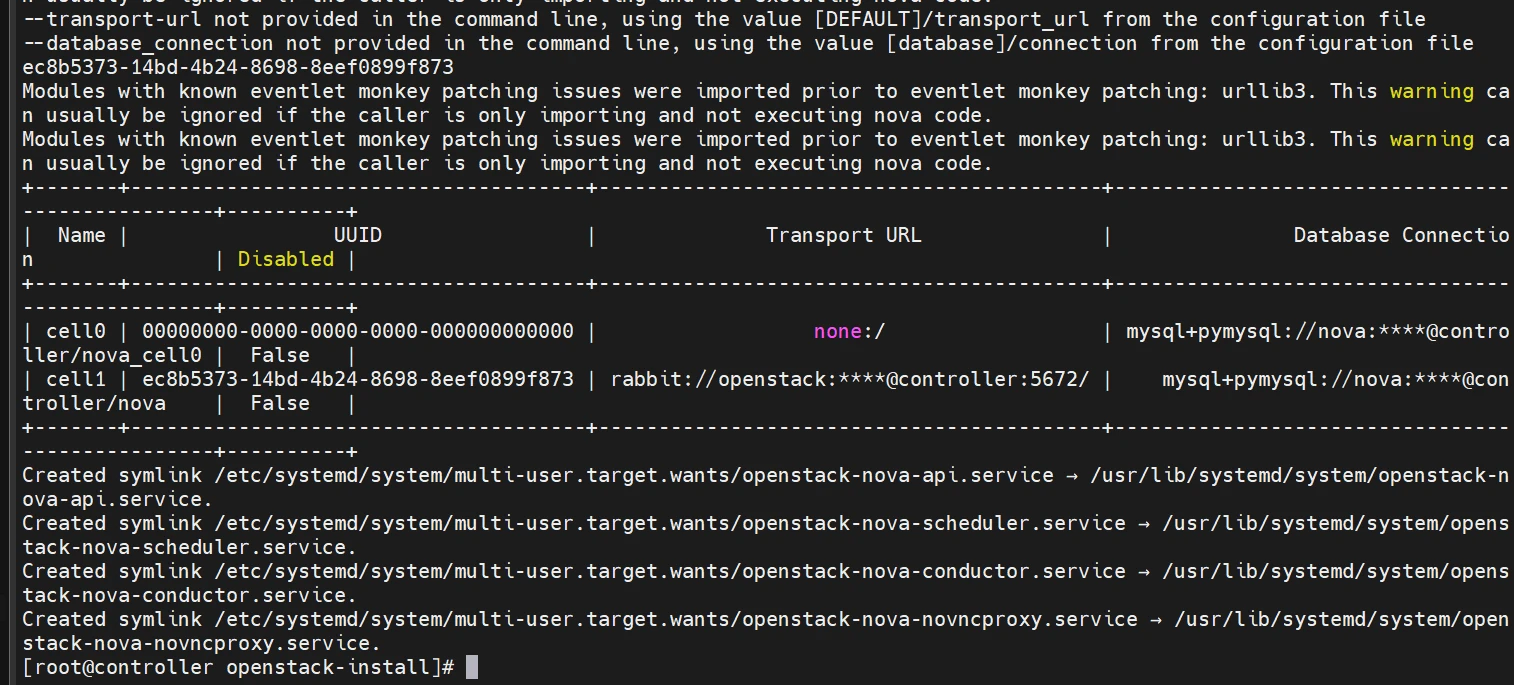

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 # 创建数据库并授权 # 创建nova_api、nova和nova_cell0数据库: echo "CREATE DATABASE nova_api;" | mysql -u root -pMARIADB_PASS echo "CREATE DATABASE nova;" | mysql -u root -pMARIADB_PASS echo "CREATE DATABASE nova_cell0;" | mysql -u root -pMARIADB_PASS # 授权 echo "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS echo "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';" | mysql -u root -pMARIADB_PASS mysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" source /root/admin-openrc # 创建nova用户并设置用户密码 openstack user create --domain default --password NOVA_PASS nova # 添加nova用户到service project并指定admin角色 openstack role add --project service --user nova admin # 创建nova服务实体 openstack service create --name nova \ --description "OpenStack Compute" compute # 创建Nova API服务endpoints: openstack endpoint create --region RegionOne \ compute public http://controller:8774/v2.1 openstack endpoint create --region RegionOne \ compute internal http://controller:8774/v2.1 openstack endpoint create --region RegionOne \ compute admin http://controller:8774/v2.1 dnf install openstack-nova-api openstack-nova-conductor \ openstack-nova-novncproxy openstack-nova-scheduler -y mv /etc/nova/nova.conf{,.bak} cat > /etc/nova/nova.conf <<EOF [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/ # RABBIT_PASS为 rabbitmq 密码 my_ip = 192.168.48.101 # 控制节点的 IP log_dir = /var/log/nova state_path = /var/lib/nova [api_database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api # NOVA_DBPASS 为数据库 Nova 账户密码 [database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova # NOVA_DBPASS 为数据库 Nova 账户密码 [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS # NOVA_PASS 为 Nova 服务的密码 [vnc] enabled = true server_listen = \$my_ip server_proxyclient_address = \$my_ip [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS # PLACEMENT_PASS 为 placement 服务的密码 EOF # 同步数据库 su -s /bin/sh -c "nova-manage api_db sync" nova su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova su -s /bin/sh -c "nova-manage db sync" nova # 验证cell0和cell1注册正确 su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova # 启动服务 systemctl enable \ openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service \ openstack-nova-novncproxy.service --now

compute

操作节点[compute]

确认计算节点是否支持虚拟机硬件加速(x86_64)

1 egrep -c '(vmx|svm)' /proc/cpuinfo

如果返回值为0则不支持硬件加速。

这时候请回到文章开头前情提要部分,认真看看如何开启硬件加速

不好好看文章,你就要回到开头呗,累吧?

1 2 mkdir openstack-install && cd openstack-install vim nova-compute.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 # !/bin/bash dnf install openstack-nova-compute -y mv /etc/nova/nova.conf{,.bak} cat > /etc/nova/nova.conf <<EOF [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/ # 替换RABBIT_PASS为RabbitMQ中openstack账户的密码。 my_ip = 192.168.48.102 compute_driver = libvirt.LibvirtDriver instances_path = /var/lib/nova/instances log_dir = /var/log/nova lock_path = /var/lock/nova state_path = /var/lib/nova [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS # 替换NOVA_PASS为nova用户的密码。 [vnc] enabled = true server_listen = \$my_ip server_proxyclient_address = \$my_ip novncproxy_base_url = http://192.168.48.101:6080/vnc_auto.html # 建议设置成192.168.48.101,也就是controller,这个决定你在页面访问实例控制台的地址,如果你是自己的电脑访问,然后你又没有设置hosts映射到controller,你会访问不到的,改成ip可以访问 [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS # PLACEMENT_PASS 为 Placement 服务密码 EOF systemctl enable libvirtd.service openstack-nova-compute.service --now

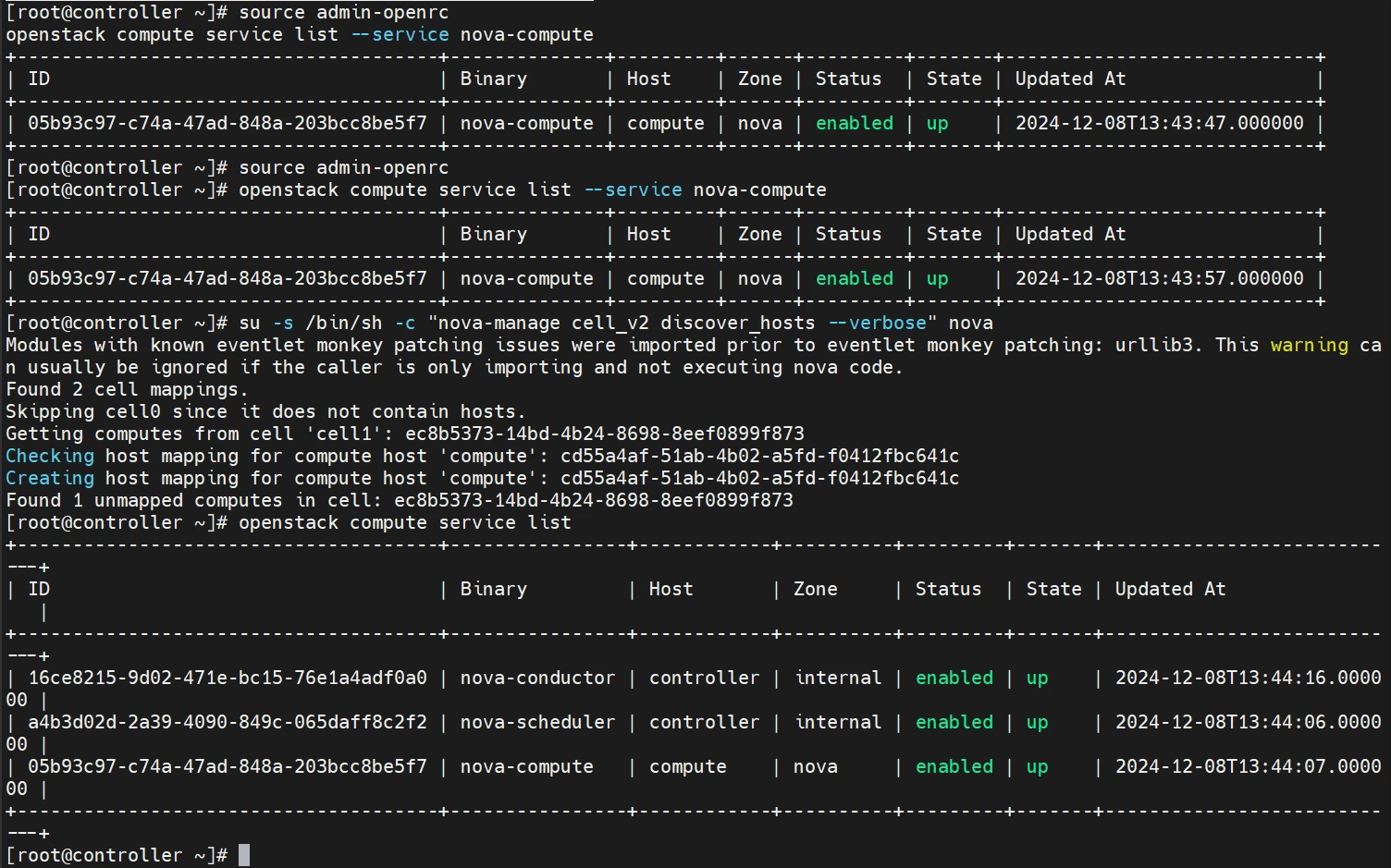

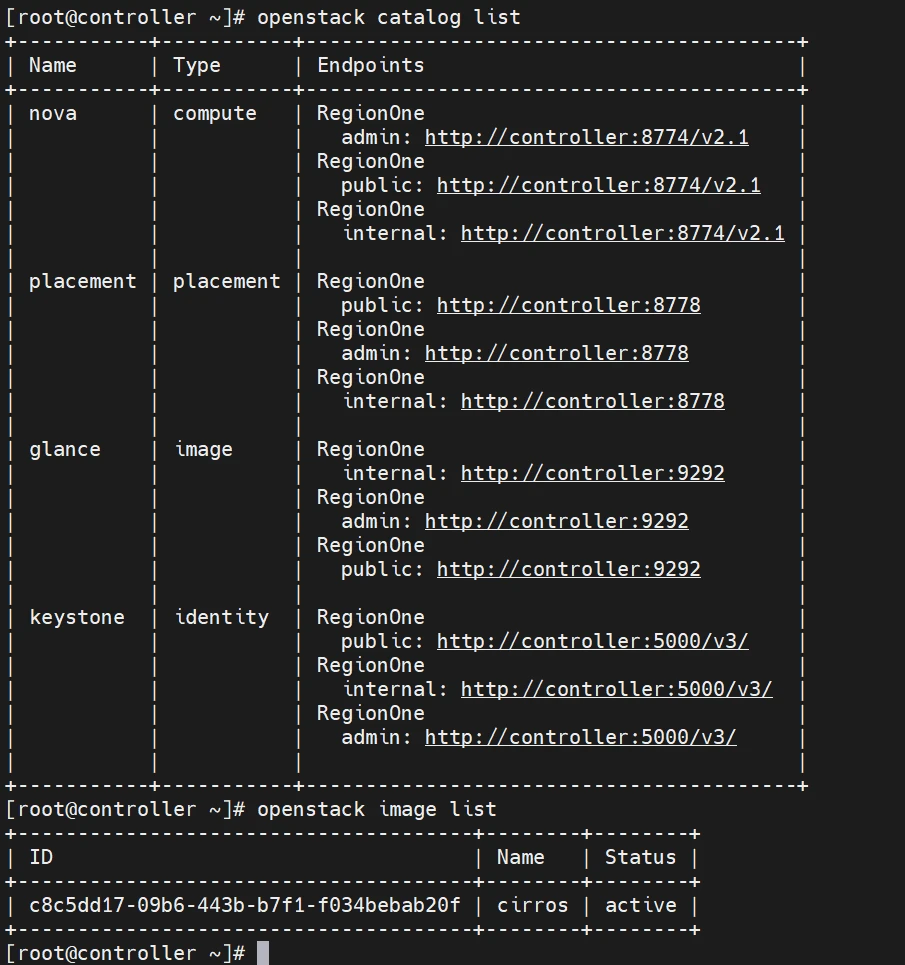

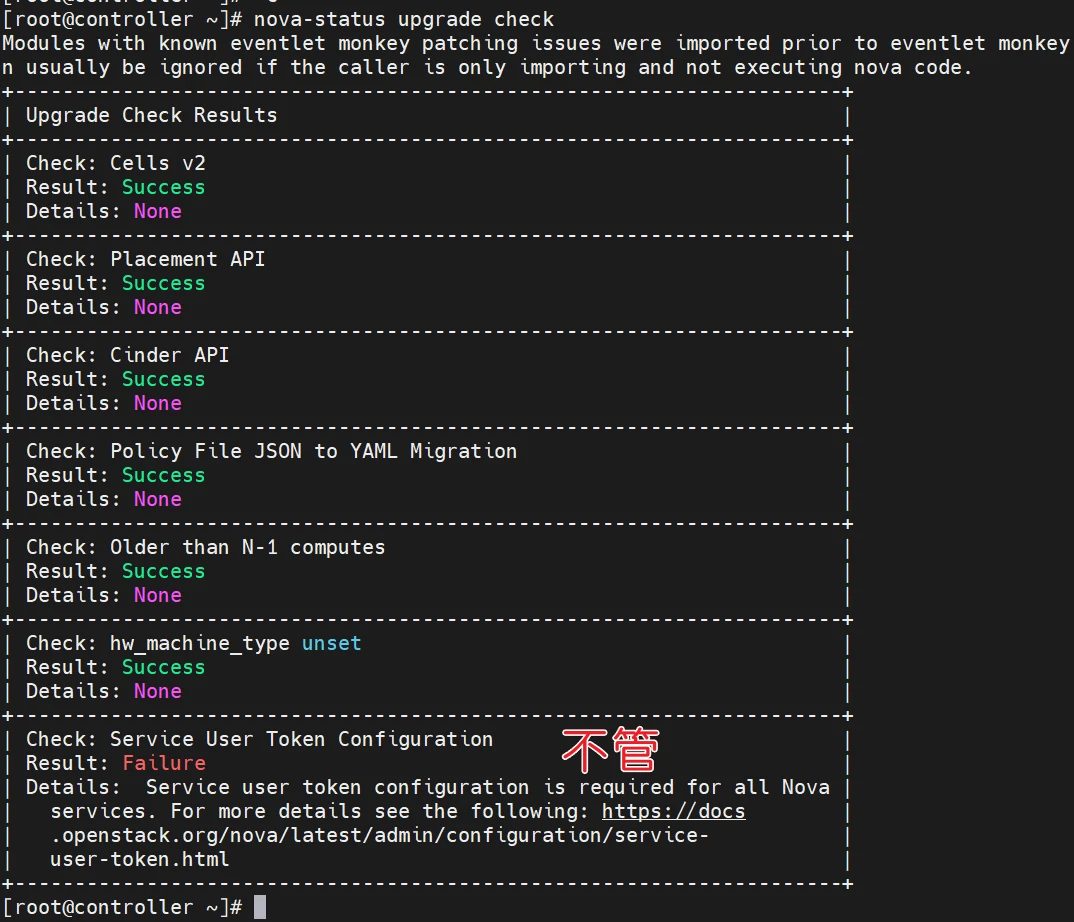

验证服务

操作节点[controller]

1 2 3 4 5 6 7 8 9 10 11 12 source /root/admin-openrcopenstack compute service list --service nova-compute su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova openstack compute service list openstack catalog list openstack image list nova-status upgrade check

Neutron

Neutron是OpenStack的网络服务,提供虚拟交换机、IP路由、DHCP等功能。

controller

操作节点[controller]

1 2 cd openstack-install vim neutron-controller.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 #!/bin/bash echo "CREATE DATABASE neutron;" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';" | mysql -u root -pMARIADB_PASSmysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" source /root/admin-openrcopenstack user create --domain default --password NEUTRON_PASS neutron openstack role add --project service --user neutron admin openstack service create --name neutron \ --description "OpenStack Networking" network openstack endpoint create --region RegionOne \ network public http://controller:9696 openstack endpoint create --region RegionOne \ network internal http://controller:9696 openstack endpoint create --region RegionOne \ network admin http://controller:9696 dnf install -y openstack-neutron openstack-neutron-linuxbridge ebtables ipset openstack-neutron-ml2 mv /etc/neutron/neutron.conf{,.bak}cat >/etc/neutron/neutron.conf<<"EOF" [database] connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron [DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = neutron password = NEUTRON_PASS [nova] auth_url = http://controller:5000 auth_type = password project_domain_name = Default user_domain_name = Default region_name = RegionOne project_name = service username = nova password = NOVA_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp [experimental] linuxbridge = true EOF mv /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}cat > /etc/neutron/plugins/ml2/ml2_conf.ini << EOF [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security [ml2_type_flat] flat_networks = provider [ml2_type_vxlan] vni_ranges = 1:1000 [securitygroup] enable_ipset = true EOF cat > /etc/neutron/plugins/ml2/linuxbridge_agent.ini <<EOF [linux_bridge] physical_interface_mappings = provider:ens33 # ens33 为第一块网卡名称 [vxlan] enable_vxlan = true local_ip = 192.168.48.101 l2_population = true # 192.168.48.101 为控制节点的 IP [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver EOF mv /etc/neutron/l3_agent.ini{,.bak}cat > /etc/neutron/l3_agent.ini << EOF [DEFAULT] interface_driver = linuxbridge EOF mv /etc/neutron/dhcp_agent.ini{,.bak}cat > /etc/neutron/dhcp_agent.ini << EOF [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true EOF mv /etc/neutron/metadata_agent.ini{,.bak}cat >> /etc/neutron/metadata_agent.ini << EOF [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET # METADATA_SECRET 为 元数据 的密钥 EOF cat >> /etc/nova/nova.conf << EOF #追加在末尾 [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET #NEUTRON_PASS 为 neutron 服务的密码 #METADATA_SECRET 为上边设置的元数据密钥 EOF ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.inisu -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron systemctl restart openstack-nova-api systemctl enable neutron-server.service neutron-linuxbridge-agent.service \ neutron-dhcp-agent.service neutron-metadata-agent.service neutron-l3-agent.service --now

1 sh neutron-controller.sh

compute

操作节点[compute]

1 2 cd openstack-install vim neutron-compute.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 # !/bin/bash dnf install openstack-neutron-linuxbridge ebtables ipset -y # 配置neutron cat >/etc/neutron/neutron.conf << "EOF" [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = neutron password = NEUTRON_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp EOF # 修改/etc/neutron/plugins/ml2/linuxbridge_agent.ini cat > /etc/neutron/plugins/ml2/linuxbridge_agent.ini <<EOF [linux_bridge] physical_interface_mappings = provider:ens33 # ens33 为第一块网卡名称 [vxlan] enable_vxlan = true local_ip = 192.168.48.102 l2_population = true # 192.168.48.102 为计算节点的 IP [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver EOF # 配置nova compute服务使用neutron cat >> /etc/nova/nova.conf << EOF # 追加在末尾 [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET # NEUTRON_PASS 为 neutron 服务的密码 # METADATA_SECRET 为上边设置的元数据密钥 EOF systemctl restart openstack-nova-compute.service systemctl enable neutron-linuxbridge-agent --now

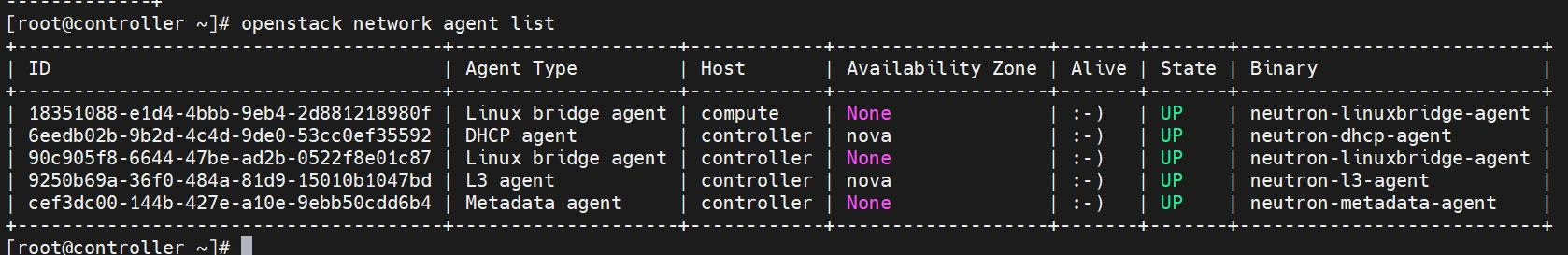

验证服务

操作节点[controller]

1 2 source admin-openrc openstack network agent list

确保五个都是up你再做下一步

Cinder

Cinder是OpenStack的存储服务,提供块设备的创建、发放、备份等功能。

controller

操作节点[controller]

1 2 cd openstack-install vim cinder-controller.sh

my_ip = 192.168.48.101为控制节点ip

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 #!/bin/bash echo "CREATE DATABASE cinder;" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'CINDER_DBPASS';" | mysql -u root -pMARIADB_PASSecho "GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'CINDER_DBPASS';" | mysql -u root -pMARIADB_PASSmysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES;" source /root/admin-openrcopenstack user create --domain default --password CINDER_PASS cinder openstack role add --project service --user cinder admin openstack service create --name cinderv3 \ --description "OpenStack Block Storage" volumev3 openstack endpoint create --region RegionOne \ volumev3 public http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 internal http://controller:8776/v3/%\(project_id\)s openstack endpoint create --region RegionOne \ volumev3 admin http://controller:8776/v3/%\(project_id\)s dnf install openstack-cinder-api openstack-cinder-scheduler -y cat >/etc/cinder/cinder.conf<<"EOF" [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone my_ip = 192.168.48.101 [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = cinder password = CINDER_PASS [oslo_concurrency] lock_path = /var/lib/cinder/tmp EOF su -s /bin/sh -c "cinder-manage db sync" cinder cat >> /etc/nova/nova.conf << EOF [cinder] os_region_name = RegionOne EOF systemctl restart openstack-nova-api systemctl enable openstack-cinder-api openstack-cinder-scheduler --now

Storage

关闭所有节点(运行不了,请回开头,复制关闭顺序脚本)(注意关闭顺序,这里不在说明,回头看)

操作节点[所有节点]

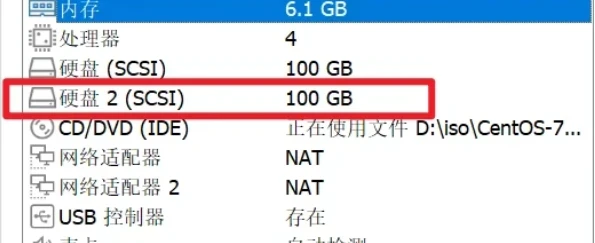

给compute添加一块100g的磁盘

操作节点[Storage]

按照前情提要里的Storage 节点,添加一块新硬盘,我这里是/dev/sdb

Cinder支持很多类型的后端存储,本指导使用最简单的lvm为参考,如果您想使用如ceph等其他后端,请自行配置。

1 2 mkdir openstack-install && cd openstack-install vim cinder-storage.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 dnf install lvm2 device-mapper-persistent-data scsi-target-utils rpcbind nfs-utils openstack-cinder-volume openstack-cinder-backup -y pvcreate /dev/sdb vgcreate cinder-volumes /dev/sdb mv /etc/cinder/cinder.conf{,.bak} cat >/etc/cinder/cinder.conf<<"EOF" [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controllerauth_strategy = keystonemy_ip = 192.168 .0.4 enabled_backends = lvmglance_api_servers = http://controller:9292 [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = cinderpassword = CINDER_PASS[database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder[lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDrivervolume_group = cinder-volumestarget_protocol = iscsitarget_helper = lioadm[oslo_concurrency] lock_path = /var/lib/cinder/tmpEOF systemctl enable openstack-cinder-volume target --now

至此,Cinder服务的部署已全部完成,可以在controller通过以下命令进行简单的验证

配置cinder backup (可选)

cinder-backup是可选的备份服务,cinder同样支持很多种备份后端,本文使用swift存储,如果您想使用如NFS等后端,请自行配置,例如可以参考OpenStack官方文档 对NFS的配置说明。

修改/etc/cinder/cinder.conf,在[DEFAULT]中新增

1 2 3 [DEFAULT] backup_driver = cinder.backup.drivers.swift.SwiftBackupDriverbackup_swift_url = SWIFT_URL

这里的SWIFT_URL是指环境中swift服务的URL,在部署完swift服务后,执行openstack catalog show object-store命令获取。

1 systemctl start openstack-cinder-backup

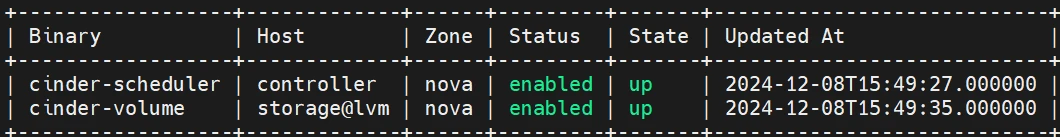

验证服务

操作节点[controller]

1 2 3 source admin-openrc systemctl restart httpd memcached openstack volume service list

Horizon

Horizon是OpenStack提供的前端页面,可以让用户通过网页鼠标的操作来控制OpenStack集群,而不用繁琐的CLI命令行。Horizon一般部署在控制节点。

操作节点[controller]

1 2 cd openstack-install vim horizon-controller.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 dnf install openstack-dashboard -y cp /etc/openstack-dashboard/local_settings{,.bak} sed -i 's/^ALLOWED_HOSTS/ sed -i 's/^OPENSTACK_HOST/ sed -i 's/^OPENSTACK_KEYSTONE_URL/ sed -i 's/^TIME_ZONE/ cat >>/etc/openstack-dashboard/local_settings << "EOF" OPENSTACK_HOST = "controller" ALLOWED_HOSTS = ['*' ]OPENSTACK_KEYSTONE_URL = "http://controller:5000/v3" SESSION_ENGINE = 'django.contrib.sessions.backends.cache' CACHES = {'default': { 'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache', 'LOCATION': 'controller:11211', } } OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default" OPENSTACK_KEYSTONE_DEFAULT_ROLE = "member" WEBROOT = '/dashboard' TIME_ZONE = "Asia/Shanghai" POLICY_FILES_PATH = "/etc/openstack-dashboard" OPENSTACK_API_VERSIONS = { "identity": 3, "image": 2, "volume": 3, } EOF systemctl restart httpd memcached

1 sh horizon-controller.sh

至此,horizon服务的部署已全部完成,打开浏览器,输入http://192.168.48.101/dashboard,打开horizon登录页面。

Swift

Swift 提供了弹性可伸缩、高可用的分布式对象存储服务,适合存储大规模非结构化数据。

controller

操作节点[controller]

1 2 cd openstack-install vim swift-controller-1.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 source /root/admin-openrc openstack user create --domain default --password SWIFT_PASS swift openstack role add --project service --user swift admin openstack service create --name swift --description "OpenStack Object Storage" object-store openstack endpoint create --region RegionOne object-store public http://controller:8080/v1/AUTH_%\(project_id\)s openstack endpoint create --region RegionOne object-store internal http://controller:8080/v1/AUTH_%\(project_id\)s openstack endpoint create --region RegionOne object-store admin http://controller:8080/v1 dnf install openstack-swift-proxy python3-swiftclient \ python3-keystoneclient python3-keystonemiddleware memcached -y sed -i "s/password = swift/password = SWIFT_PASS/g" /etc/swift/proxy-server.conf

1 sh swift-controller-1.sh

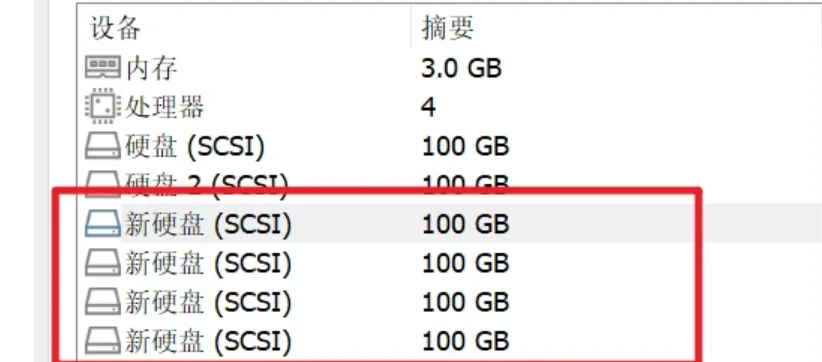

Storage

本节点,添加了四块硬盘作为swift使用的硬盘

关闭所有节点,回头看物理关闭顺序

给storage 添加4张硬盘,添加完之后就可以开机了

操作节点[Storage]

1 2 cd openstack-install vim swift-storage-1.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 dnf install openstack-swift-account openstack-swift-container openstack-swift-object -y dnf install xfsprogs rsync -y mkfs.xfs -f /dev/sdc mkfs.xfs -f /dev/sdd mkfs.xfs -f /dev/sde mkfs.xfs -f /dev/sdf mkdir -p /srv/node/sdc mkdir -p /srv/node/sdd mkdir -p /srv/node/sde mkdir -p /srv/node/sdf cat >> /etc/fstab << EOF /dev/sdc /srv/node/sdc xfs noatime 0 2 /dev/sdd /srv/node/sdd xfs noatime 0 2 /dev/sde /srv/node/sde xfs noatime 0 2 /dev/sdf /srv/node/sdf xfs noatime 0 2 EOF systemctl daemon-reload mount -t xfs /dev/sdc /srv/node/sdc mount -t xfs /dev/sdd /srv/node/sdd mount -t xfs /dev/sde /srv/node/sde mount -t xfs /dev/sdf /srv/node/sdf mv /etc/rsyncd.conf{,.bak} cat>/etc/rsyncd.conf<<EOF [DEFAULT] uid = swiftgid = swiftlog file = /var/log/rsyncd.log pid file = /var/run/rsyncd.pid address = 192.168 .48.103 [account] max connections = 2 path = /srv/node/read only = False lock file = /var/lock/account.lock [container] max connections = 2 path = /srv/node/read only = False lock file = /var/lock/container.lock [object] max connections = 2 path = /srv/node/read only = False lock file = /var/lock/object.lock EOF systemctl enable rsyncd.service --now sudo sed -i 's/^bind_ip = 127 \.0 \.0 \.1 $/bind_ip = 192.168 .48.103 /' /etc/swift/account-server.conf /etc/swift/container-server.conf /etc/swift/object-server.conf #确保挂载点目录结构的正确所有权。 chown -R swift:swift /srv/node #创建recon目录并确保其拥有正确的所有权。 mkdir -p /var/cache/swift chown -R root:swift /var/cache/swift chmod -R 775 /var/cache/swift

controller

操作节点[controller]

1 2 cd openstack-install vim swift-controller-2.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 cd /etc/swift swift-ring-builder account.builder create 10 3 1 swift-ring-builder account.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6202 --device sdc --weight 100 swift-ring-builder account.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6202 --device sdd --weight 100 swift-ring-builder account.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6202 --device sde --weight 100 swift-ring-builder account.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6202 --device sdf --weight 100 swift-ring-builder account.builder rebalance swift-ring-builder account.builder swift-ring-builder container.builder create 10 3 1 swift-ring-builder container.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6201 --device sdc --weight 100 swift-ring-builder container.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6201 --device sdd --weight 100 swift-ring-builder container.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6201 --device sde --weight 100 swift-ring-builder container.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6201 --device sdf --weight 100 swift-ring-builder container.builder swift-ring-builder container.builder rebalance swift-ring-builder object.builder create 10 3 1 swift-ring-builder object.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6200 --device sdc --weight 100 swift-ring-builder object.builder add \ --region 1 --zone 1 --ip 192.168.48.103 \ --port 6200 --device sdd --weight 100 swift-ring-builder object.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6200 --device sde --weight 100 swift-ring-builder object.builder add \ --region 1 --zone 2 --ip 192.168.48.103 \ --port 6200 --device sdf --weight 100 swift-ring-builder object.builder swift-ring-builder object.builder rebalance scp /etc/swift/account.ring.gz \ /etc/swift/container.ring.gz \ /etc/swift/object.ring.gz \ 192.168.48.103:/etc/swift mv /etc/swift/swift.conf{,.bak} cat> /etc/swift/swift.conf<<EOF [swift-hash] swift_hash_path_suffix = swiftswift_hash_path_prefix = swift[storage-policy:0] name = Policy-0 default = yes EOF ------------------------------------------------------------------------------------- sshpass -p 'Lj201840.' scp /etc/swift/swift.conf 192.168.48.103:/etc/swift ------------------------------------------------------------------------------------- chown -R root:swift /etc/swift yum install ansible -y cat << EOF >> /etc/ansible/hosts [storage] 192.168.48.103 ansible_user =root EOF ansible storage -m command -a "chown -R root:swift /etc/swift" -b --become-user root systemctl enable openstack-swift-proxy.service memcached.service --now systemctl restart openstack-swift-proxy.service memcached.service

1 sh swift-controller-2.sh

Storage

1 2 cd openstack-install vim swift-storage-2.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 systemctl enable openstack-swift-account.service openstack-swift-account-auditor.service \ openstack-swift-account-reaper.service openstack-swift-account-replicator.service systemctl start openstack-swift-account.service openstack-swift-account-auditor.service \ openstack-swift-account-reaper.service openstack-swift-account-replicator.service systemctl enable openstack-swift-container.service \ openstack-swift-container-auditor.service openstack-swift-container-replicator.service \ openstack-swift-container-updater.service systemctl start openstack-swift-container.service \ openstack-swift-container-auditor.service openstack-swift-container-replicator.service \ openstack-swift-container-updater.service systemctl enable openstack-swift-object.service openstack-swift-object-auditor.service \ openstack-swift-object-replicator.service openstack-swift-object-updater.service systemctl start openstack-swift-object.service openstack-swift-object-auditor.service \ openstack-swift-object-replicator.service openstack-swift-object-updater.service yum install ansible -y cat << EOF >> /etc/ansible/hosts [controller] 192.168.48.101 ansible_user =root EOF ansible controller -m command -a "systemctl restart httpd memcached" -b --become-user root ansible controller -m command -a "systemctl restart openstack-nova*" -b --become-user root

验证

操作节点[controller]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 source /root/admin-openrc cd /etc/swift swift stat [root@controller swift]# swift stat Account: AUTH_07a1ce96dca54f1bb0d3b968f1343617 Containers: 0 Objects: 0 Bytes: 0 X-Put-Timestamp: 1684919814.32783 X-Timestamp: 1684919814.32783 X-Trans-Id: txd6f3affa0140455b935ff-00646dd605 Content-Type: text/plain; charset=utf-8 X-Openstack-Request-Id: txd6f3affa0140455b935ff-00646dd605 #测试上传镜像 [root@controller swift]# cd [root@controller ~]# swift upload demo /root/openstack-install/iso/cirros-0.4.0-x86_64-disk.img --object-name image image

Heat

Heat是 OpenStack 自动编排服务,基于描述性的模板来编排复合云应用,也称为Orchestration Service。Heat 的各服务一般安装在Controller节点上。

操作节点[controller]

1 2 cd openstack-install vim heat-controller.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 echo "CREATE DATABASE heat echo "GRANT ALL PRIVILEGES ON heat.* TO 'heat'@'localhost' IDENTIFIED BY 'HEAT_DBPASS' echo "GRANT ALL PRIVILEGES ON heat.* TO 'heat'@'%' IDENTIFIED BY 'HEAT_DBPASS' mysql -u root -pMARIADB_PASS -e "FLUSH PRIVILEGES source /root/admin-openrc openstack user create --domain default --password HEAT_PASS heat openstack role add --project service --user heat admin openstack service create --name heat \ --description "Orchestration" orchestration openstack service create --name heat-cfn \ --description "Orchestration" cloudformation openstack endpoint create --region RegionOne \ orchestration public http://controller:8004/v1/%\(tenant_id\)s openstack endpoint create --region RegionOne \ orchestration internal http://controller:8004/v1/%\(tenant_id\)s openstack endpoint create --region RegionOne \ orchestration admin http://controller:8004/v1/%\(tenant_id\)s openstack endpoint create --region RegionOne \ cloudformation public http://controller:8000/v1 openstack endpoint create --region RegionOne \ cloudformation internal http://controller:8000/v1 openstack endpoint create --region RegionOne \ cloudformation admin http://controller:8000/v1 openstack domain create --description "Stack projects and users" heat openstack user create --domain heat --password =HEAT_DOMAIN_USER_PASS heat_domain_admin openstack role add --domain heat --user-domain heat --user heat_domain_admin admin openstack role create heat_stack_owner openstack role add --project demo --user demo heat_stack_owner openstack role create heat_stack_user dnf install openstack-heat-api openstack-heat-api-cfn openstack-heat-engine -y mv /etc/heat/heat.conf{,.bak} cat > /etc/heat/heat.conf << EOF [database] connection = mysql+pymysql://heat:HEAT_DBPASS@controller/heat [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controllerheat_metadata_server_url = http://controller:8000 heat_waitcondition_server_url = http://controller:8000 /v1/waitconditionstack_domain_admin = heat_domain_adminstack_domain_admin_password = HEAT_DOMAIN_PASSstack_user_domain_name = heat[keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = passwordproject_domain_name = defaultuser_domain_name = defaultproject_name = serviceusername = heatpassword = HEAT_PASS[trustee] auth_type = passwordauth_url = http://controller:5000 username = heatpassword = HEAT_PASSuser_domain_name = default[clients_keystone] auth_uri = http://controller:5000 EOF su -s /bin/sh -c "heat-manage db_sync" heat systemctl enable openstack-heat-api.service \ openstack-heat-api-cfn.service openstack-heat-engine.service --now systemctl restart openstack-heat-api.service \ openstack-heat-api-cfn.service openstack-heat-engine.service systemctl restart httpd memcached

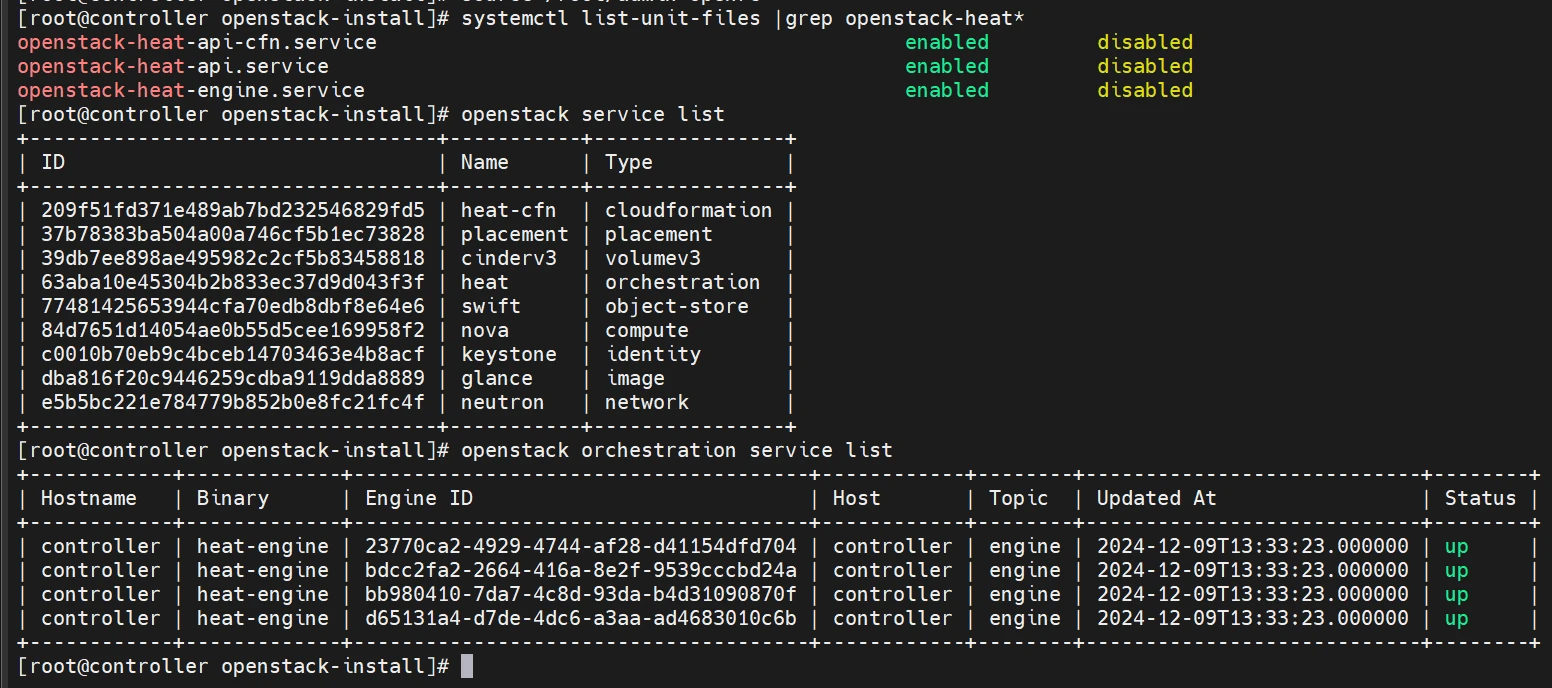

验证

1 2 3 4 5 source /root/admin-openrcsystemctl list-unit-files |grep openstack-heat* openstack service list openstack orchestration service list

创建实例

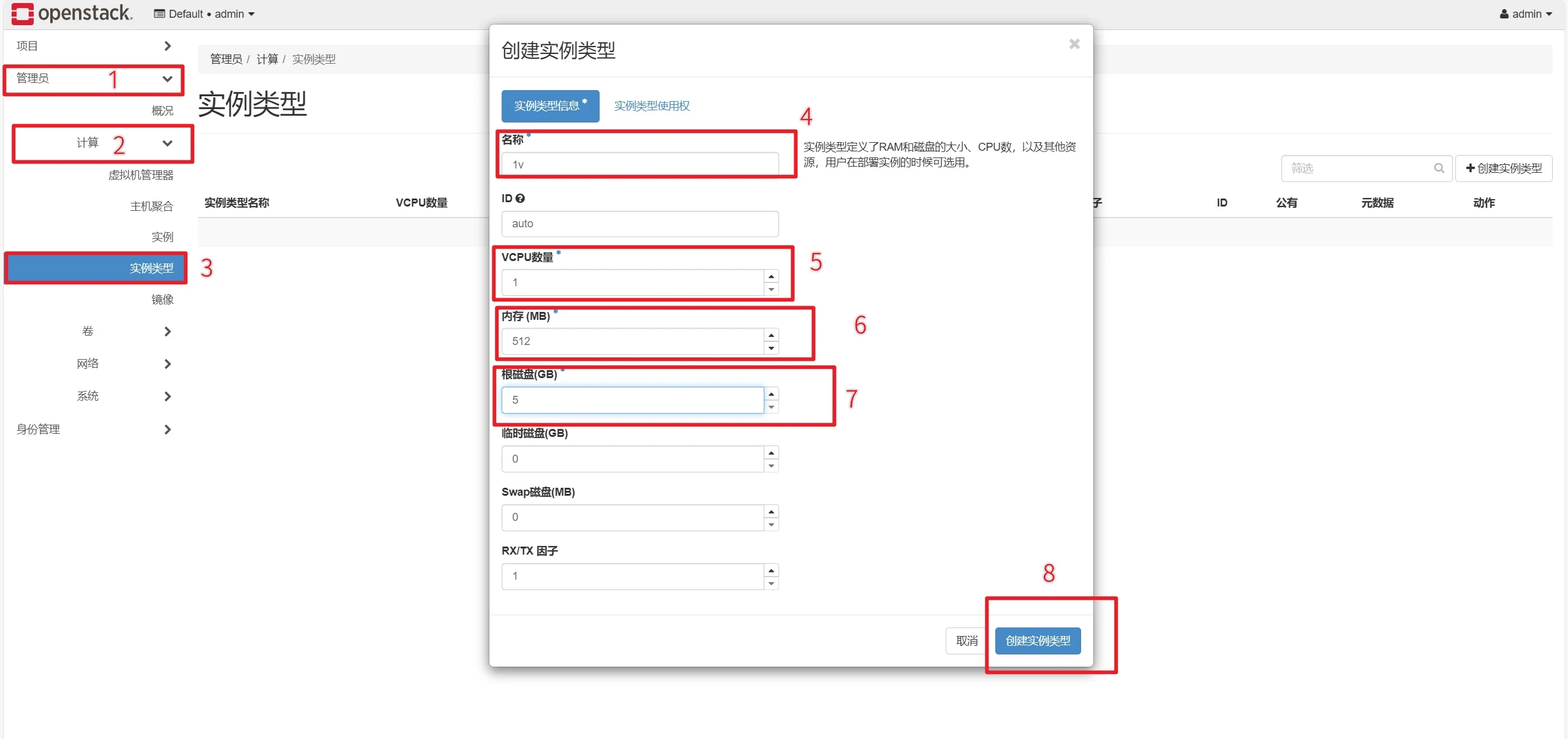

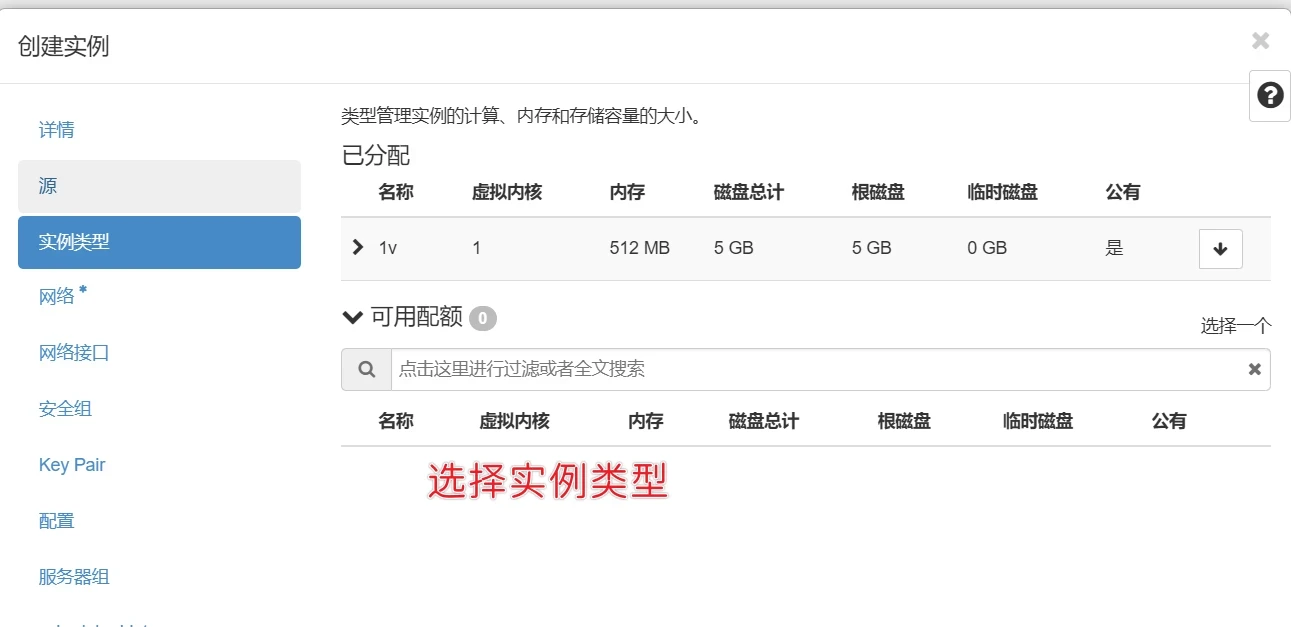

创建实例类型

左侧选择管理员,点击计算,点击实例类型,右侧点击创建实例类型。

根据以上图片步骤依次填入:实例名称、VCPU数量、内存大小、根磁盘大小,确认无误后点击创建实例类型。

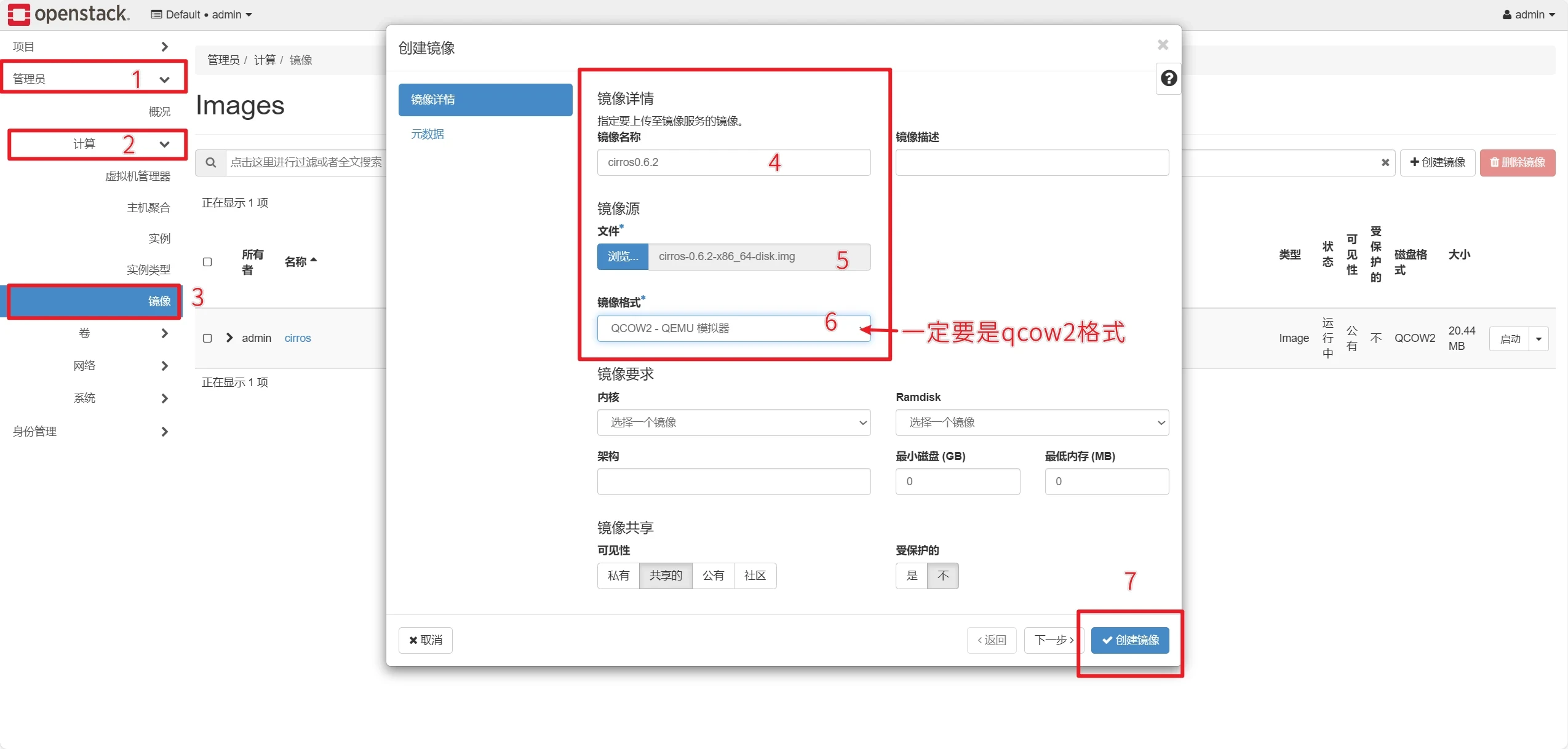

创建镜像

测试镜像:https://download.cirros-cloud.net/0.6.2/cirros-0.6.2-x86_64-disk.img

有两种上传方式(二选一)!!!

1.Windows上传镜像方式

左侧选择管理员,点击计算,点击镜像,右侧点击创建镜像。

Windows下载到本地即可

根据以上图片步骤依次填入:镜像名称、选择文件、镜像格式,确认无误后点击创建镜像。注 :演示上传的 img 镜像格式需选用 QCOW2 - QEMU模拟器 才可正常加载。

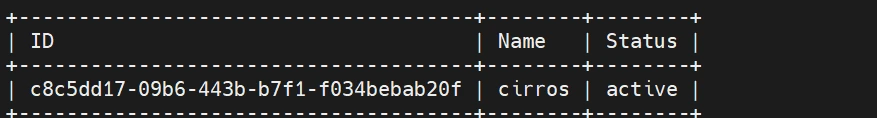

2.Linux上传镜像方式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 source admin-openrc wget https://download.cirros-cloud.net/0.6.2/cirros-0.6.2-x86_64-disk.img #可能会下载不到,可以复制链接到浏览器下载,然后移到/root/目录下 glance image-create --name "cirros" \ --file cirros-0.6.2-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --visibility=public openstack image list [root@controller-1 ~]# openstack image list +--------------------------------------+--------+--------+ | ID | Name | Status | +--------------------------------------+--------+--------+ | 627761da-7f8c-4780-842a-e50e62f5c464 | cirros | active | +--------------------------------------+--------+--------+

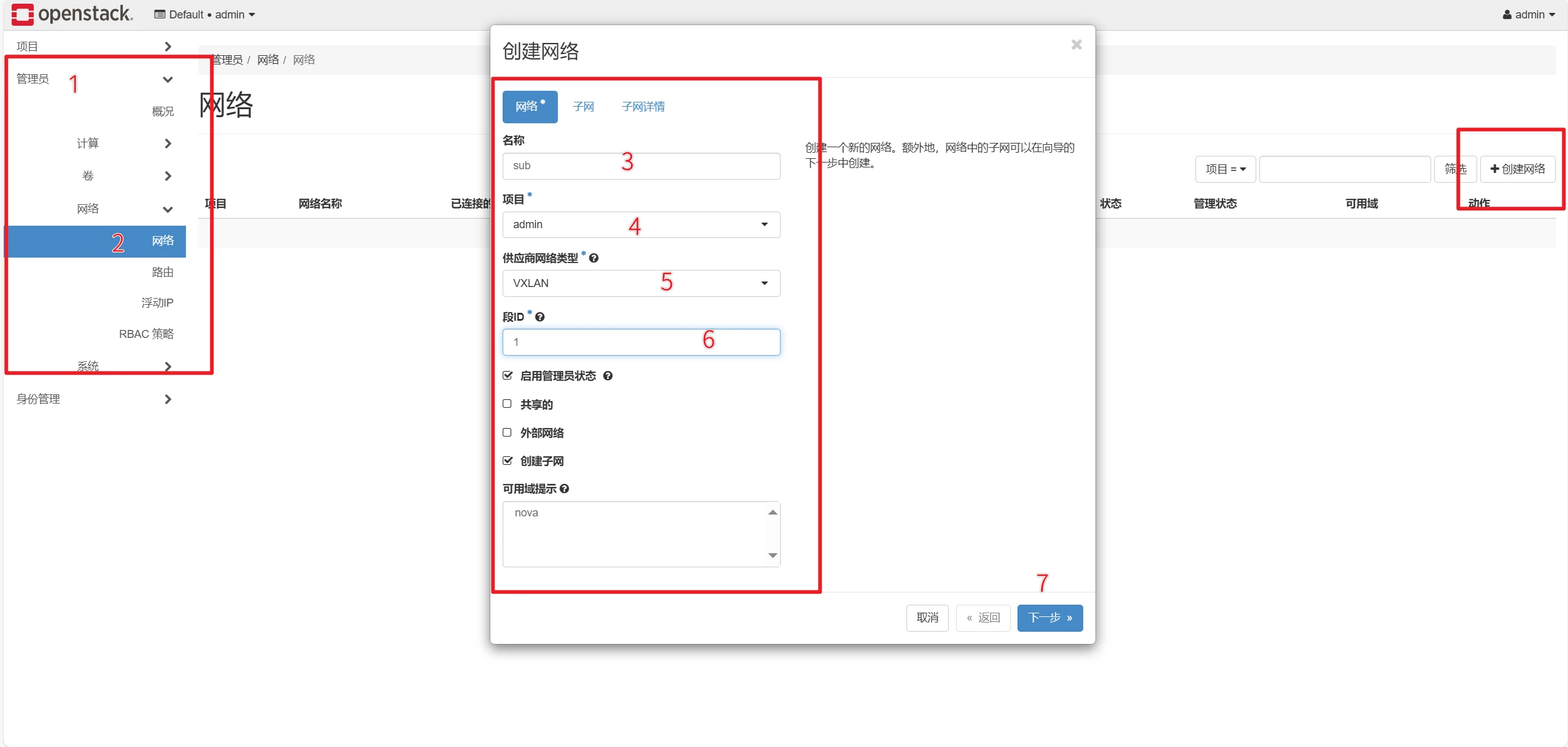

创建内部网络

左侧选择管理员,点击网络,点击网络,右侧点击创建网络。

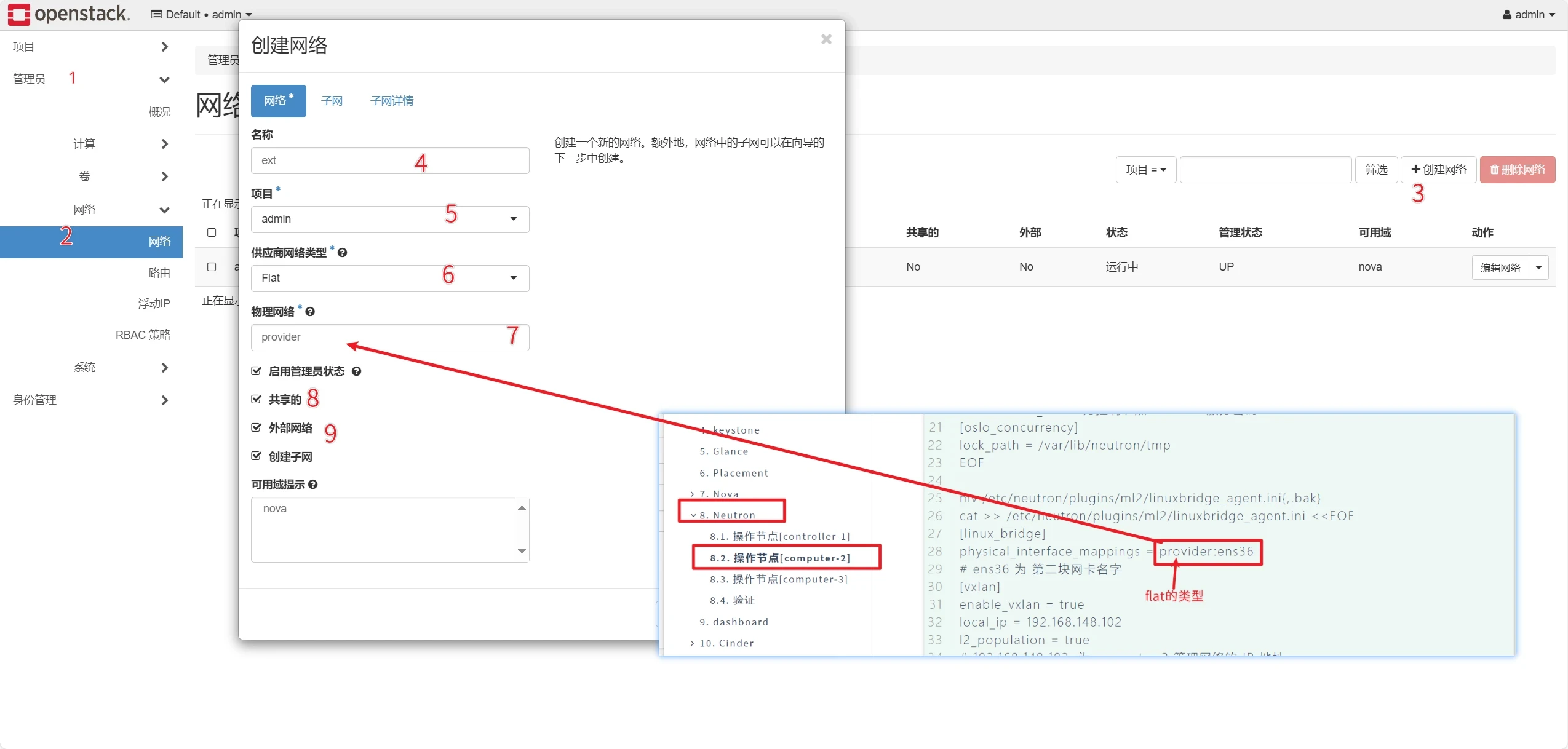

创建外部网络

左侧选择管理员,点击网络,点击网络,右侧点击创建网络。

如果你是按照本文档搭建的,就填provider

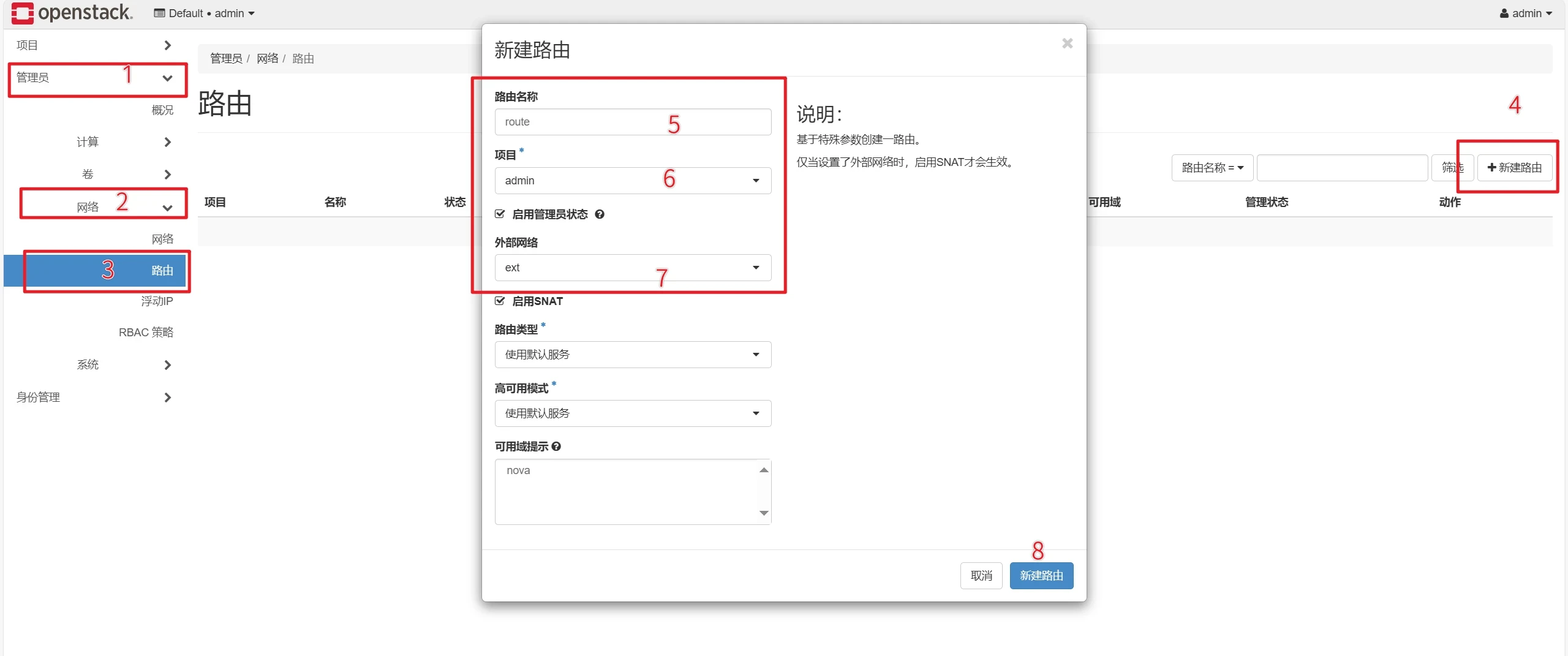

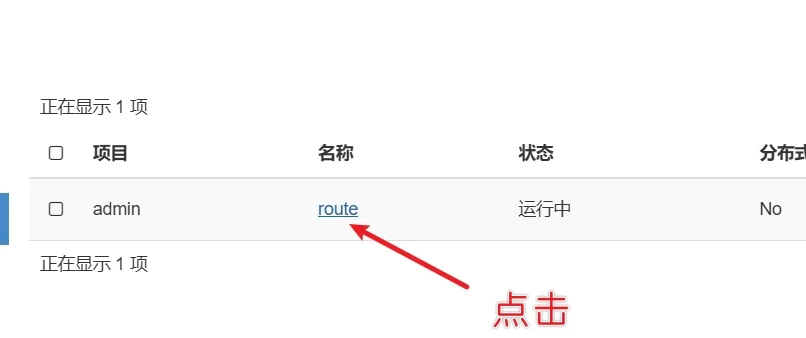

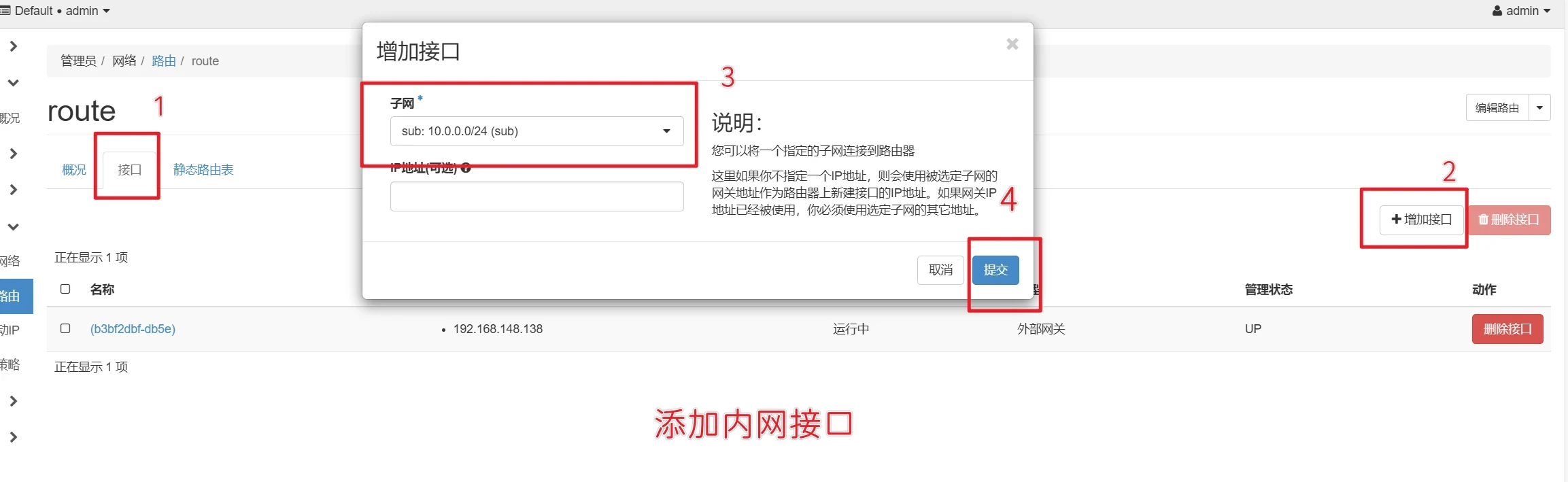

创建路由

左侧选择管理员,点击网络,点击路由,右侧点击创建路由。

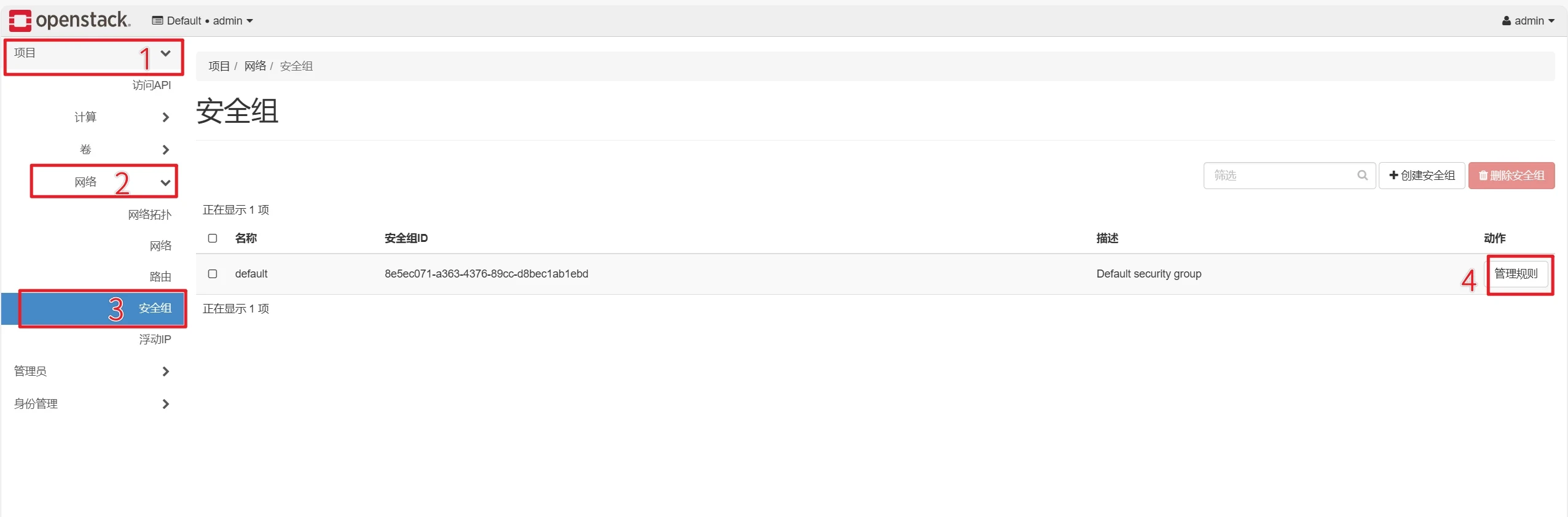

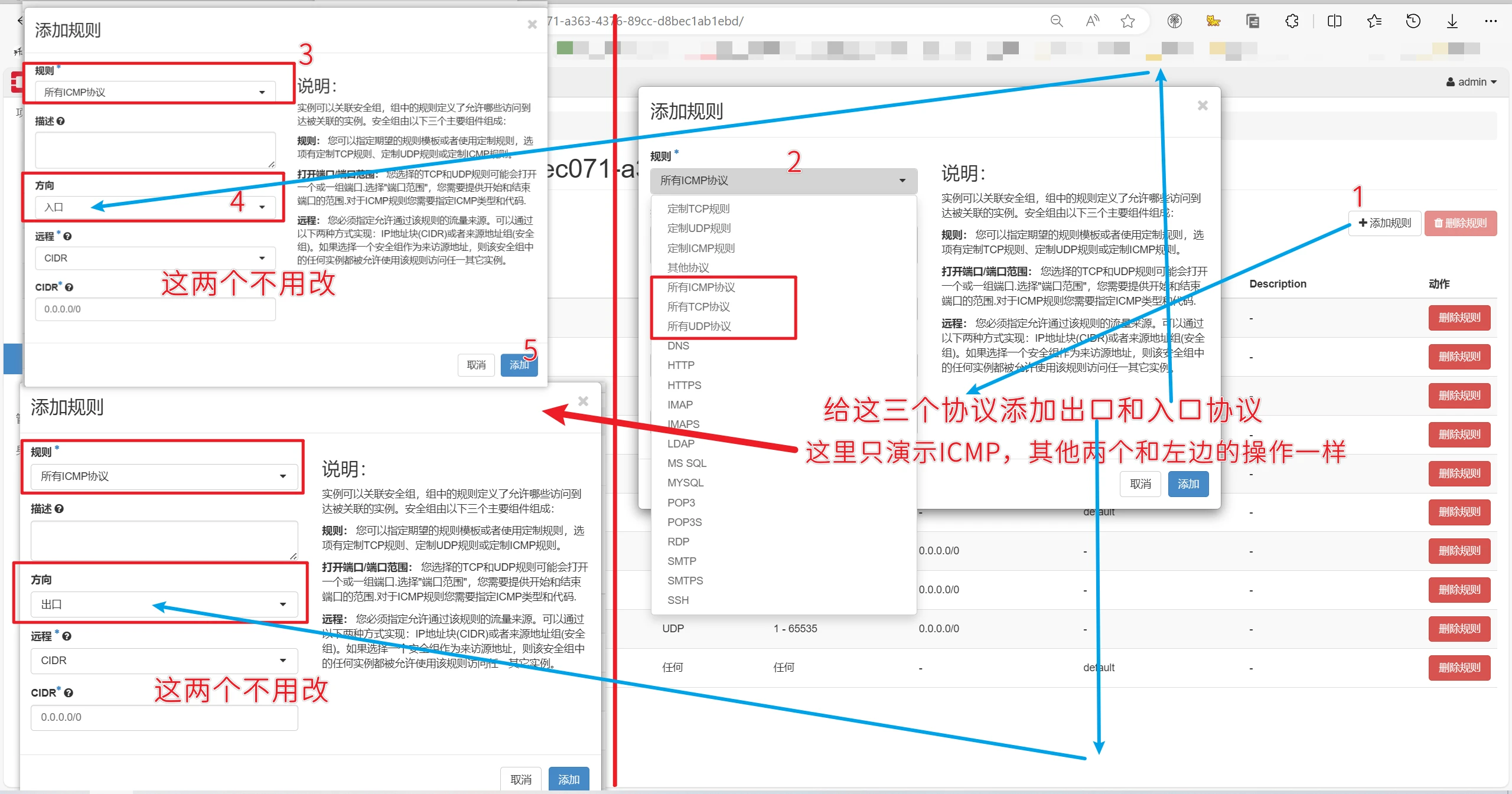

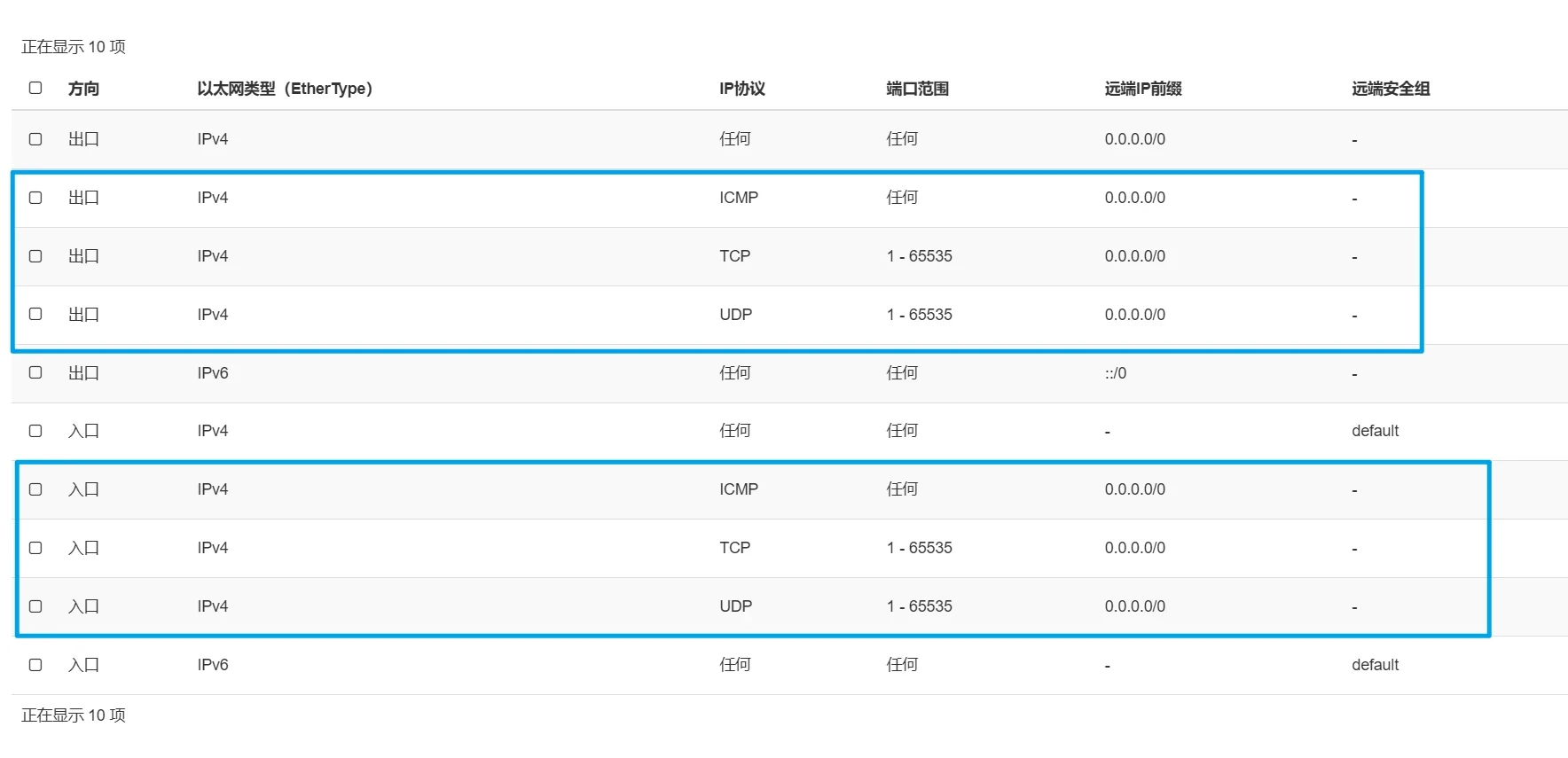

添加安全组规则

最后效果长这样

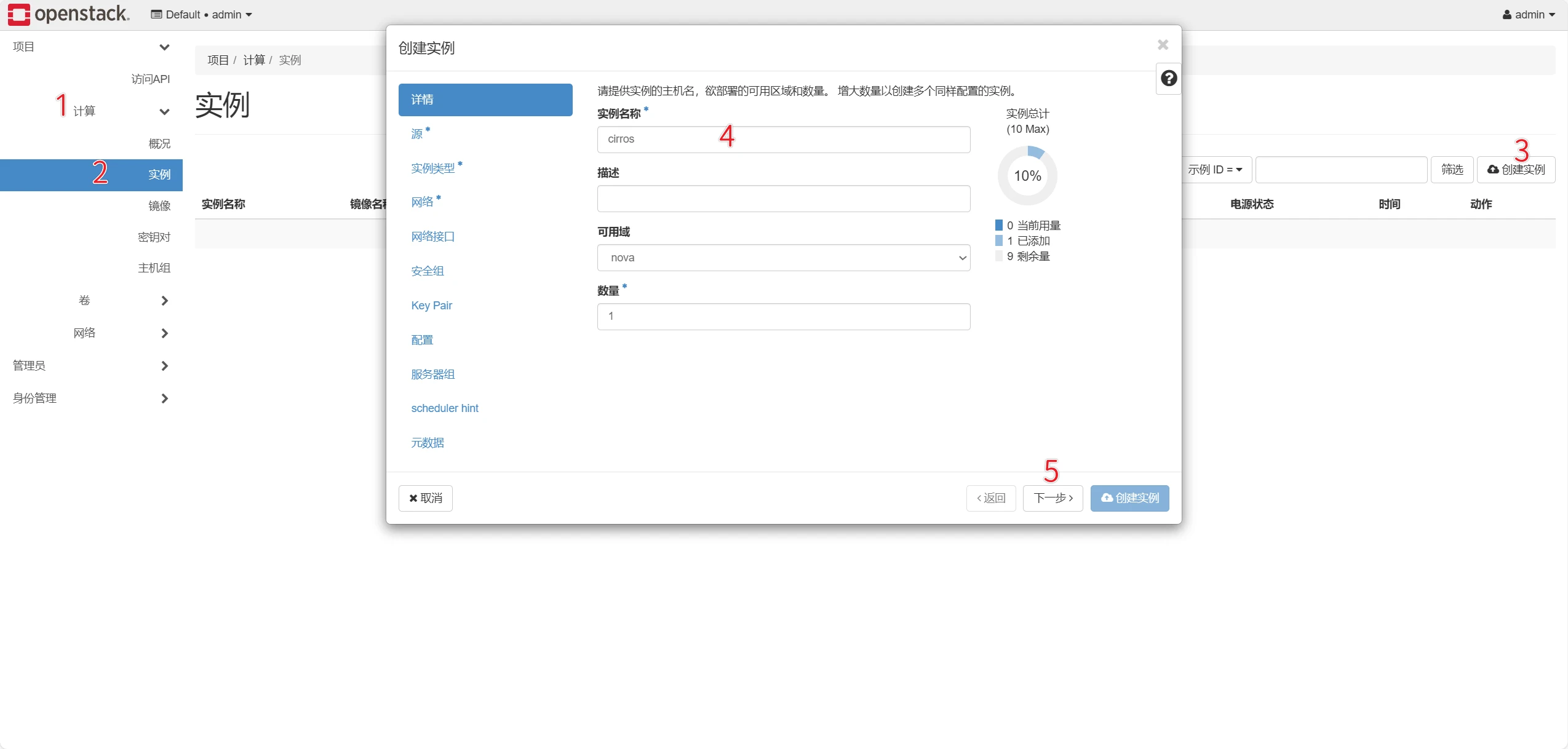

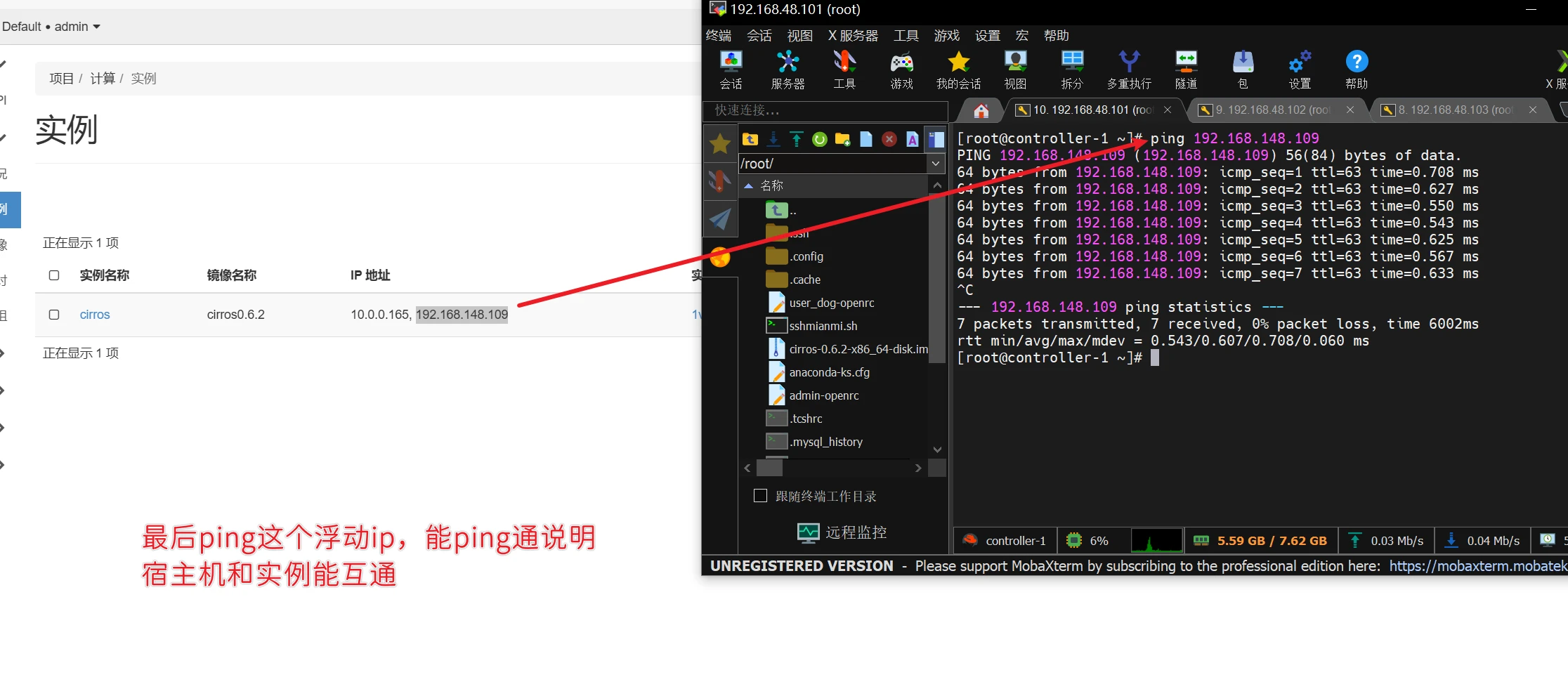

创建实例

然后点击创建实例

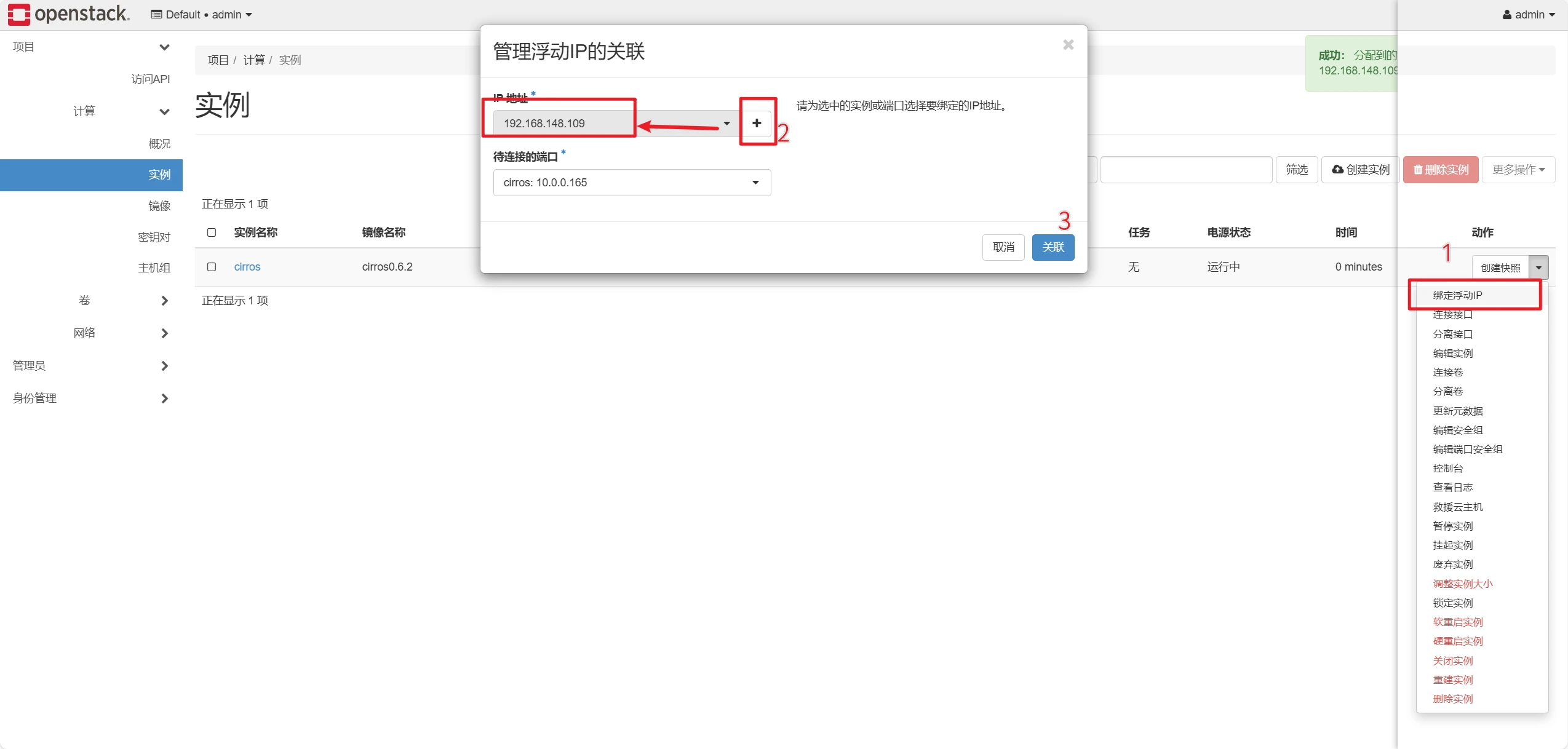

分配浮动ip

结论:创建实例成功

千屹博客旗下的所有文章,是通过本人课堂学习和课外自学所精心整理的知识巨著