OpenEuler-部署K8S高可用集群(内部etcd)

主机拓扑

主机名

ip1(NAT)

系统

磁盘

内存

master1

192.168.48.101

OpenEuler-22.03-LTS

100G

4G

master2

192.168.48.102

OpenEuler-22.03-LTS

100G

4G

master3

192.168.48.103

OpenEuler-22.03-LTS

100G

4G

node01

192.168.48.104

OpenEuler-22.03-LTS

100G

8G

镜像下载地址:OpenEuler-22.03-LTS

下载名为openEuler-22.03-LTS-SP4-x86_64-dvd.iso

基础配置

Openeuler通过单独安装,非克隆。安装完后进行基本环境的配置,配置一下几个方面:

设置主机名

关闭firewalld、dnsmasq、selinux

设置ens33

备份并新增、docker-ce源、k8s源

更新yum源软件包缓存

添加hosts解析

关闭swap分区

安装chrony服务,并同步时间

配置limits.conf

安装必备工具

升级系统并重启

操作主机:[master1,master2,master3,node01]

1 2 #将以下脚本内容添加进去 vi k8s_system_init.sh

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 #!/bin/bash if [ $# -eq 2 ];then echo "设置主机名为:$1" echo "ens33设置IP地址为:192.168.48.$2" else echo "使用方法:sh $0 主机名 主机位" exit 2 fi echo "--------------------------------------" echo "1.正在设置主机名:$1" hostnamectl set-hostname $1 echo "2.正在关闭firewalld、dnsmasq、selinux" systemctl disable firewalld &> /dev/null systemctl disable dnsmasq &> /dev/null systemctl stop firewalld systemctl stop dnsmasq sed -i "s#SELINUX=enforcing#SELINUX=disabled#g" /etc/selinux/config setenforce 0 echo "3.正在设置ens33:192.168.48.$2" cat > /etc/sysconfig/network-scripts/ifcfg-ens33 <<EOF TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no NAME=ens33 UUID=53b402ff-5865-47dd-a853-7afcd6521738 DEVICE=ens33 ONBOOT=yes IPADDR=192.168.48.$2 GATEWAY=192.168.48.2 PREFIX=24 DNS1=192.168.48.2 DNS2=114.114.114.114 EOF nmcli c reload nmcli c up ens33 echo "4.新增docker-ce源、k8s源" mkdir /etc/yum.repos.d/bak/ cp /etc/yum.repos.d/* /etc/yum.repos.d/bak/ sleep 3 cat > /etc/yum.repos.d/kubernetes.repo <<EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg EOF #切换为华为云,下载速度更快 sed -i 's/\$basearch/x86_64/g' /etc/yum.repos.d/openEuler.repo sed -i 's/http\:\/\/repo.openeuler.org/https\:\/\/mirrors.huaweicloud.com\/openeuler/g' /etc/yum.repos.d/openEuler.repo curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo sed -i 's/\$releasever/7/g' /etc/yum.repos.d/docker-ce.repo echo "5.更新yum源软件包缓存" yum clean all && yum makecache echo "6.添加hosts解析" cat > /etc/hosts <<EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.48.101 master1 192.168.48.102 master2 192.168.48.103 master3 192.168.48.104 node01 192.168.48.105 node02 EOF echo "7.关闭swap分区" swapoff -a && sysctl -w vm.swappiness=0 &> /dev/null sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab echo "8.安装chrony服务,并同步时间" yum install chrony -y systemctl start chronyd systemctl enable chronyd chronyc sources chronyc sources echo "9.配置limits.conf" ulimit -SHn 65535 cat >> /etc/security/limits.conf <<EOF * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited EOF echo "10.必备工具安装" yum install wget psmisc vim net-tools telnet device-mapper-persistent-data lvm2 git -y echo "11.重启" reboot

1 2 3 4 5 6 7 8 9 sh k8s_system_init.sh 主机名 主机位 [master1] sh k8s_system_init.sh master1 101 [master2] sh k8s_system_init.sh master2 102 [master3] sh k8s_system_init.sh master3 103 [node01] sh k8s_system_init.sh node01 104

配置ssh免密

操作节点[master1]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 yum install -y sshpass cat > sshmianmi.sh << "EOF" #!/bin/bash # 目标主机列表 hosts=("master1" "master2" "master3" "node01") # 密码 password="Lj201840." # 生成 SSH 密钥对 ssh-keygen -t rsa -N "" -f ~/.ssh/id_rsa # 循环遍历目标主机 for host in "${hosts[@]}" do # 复制公钥到目标主机 sshpass -p "$password" ssh-copy-id -o StrictHostKeyChecking=no "$host" # 验证免密登录 sshpass -p "$password" ssh -o StrictHostKeyChecking=no "$host" "echo '免密登录成功'" done EOF sh sshmianmi.sh

内核及ipvs模块配置

此步骤是配置ipvs模块,开启一些k8s集群中必须的内核参数。配置一下几个方面:

更改内核启动顺序

安装ipvsadm

配置ipvs模块

开启k8s集群必须的内核参数

配置完内核,重启服务器

操作主机:[master1,master2,master3,node01]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 #!/bin/bash echo "1.更改内核启动顺序" grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)" echo "2.安装ipvsadm" yum install ipvsadm ipset sysstat conntrack libseccomp -y &> /dev/null echo "3.配置ipvs模块" modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack cat >> /etc/modules-load.d/ipvs.conf <<EOF ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip EOF systemctl enable --now systemd-modules-load.service &> /dev/null echo "4.开启k8s集群必须的内核参数" cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 fs.may_detach_mounts = 1 net.ipv4.conf.all.route_localnet = 1 vm.overcommit_memory=1 vm.panic_on_oom=0 fs.inotify.max_user_watches=89100 fs.file-max=52706963 fs.nr_open=52706963 net.netfilter.nf_conntrack_max=2310720 net.ipv4.tcp_keepalive_time = 600 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.tcp_keepalive_intvl =15 net.ipv4.tcp_max_tw_buckets = 36000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_max_orphans = 327680 net.ipv4.tcp_orphan_retries = 3 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.ip_conntrack_max = 65536 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_timestamps = 0 net.core.somaxconn = 16384 EOF sysctl --system echo "5.配置完内核,重启服务器!" reboot

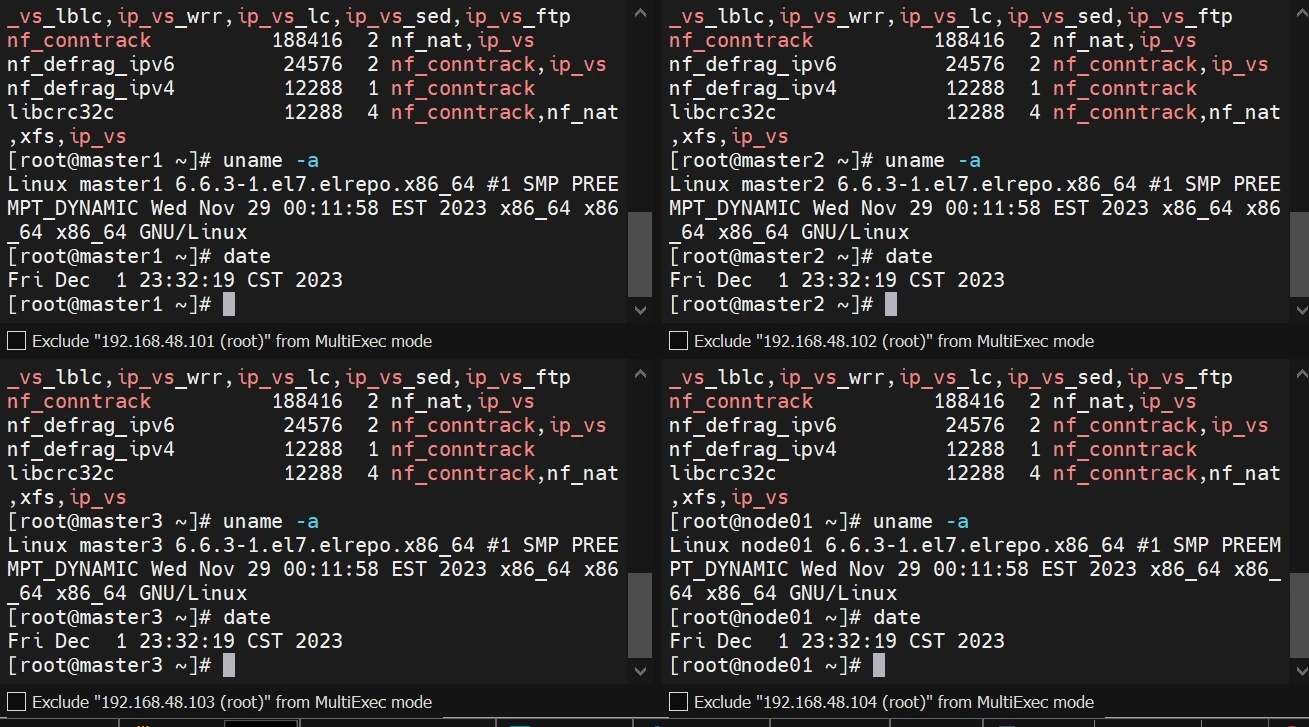

检查ipvs加载、内核版本验证

1 2 lsmod | grep --color=auto -e ip_vs -e nf_conntrack uname -a

高可用组件安装

haproxy配置

操作节点:[master1,master2,master3]

1 yum install keepalived haproxy -y

所有Master节点配置HAProxy,所有Master节点的HAProxy配置相同。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 操作节点:[master1,master2,master3] cat > /etc/haproxy/haproxy.cfg <<"EOF" global maxconn 2000 ulimit-n 16384 log 127.0 .0 .1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor frontend k8s-master bind 0.0 .0 .0 :16443 bind 127.0 .0 .1 :16443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server master1 192.168 .48 .101 :6443 check server master2 192.168 .48 .102 :6443 check server master3 192.168 .48 .103 :6443 check EOF

Keepalived配置

操作节点:[master1,master2,master3]

所有Master节点配置Keepalived,以下三个Master节点配置注意ip和网卡。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/keepalived/keepalived.conf << "EOF" ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface ens33 mcast_src_ip 192.168.48.101 virtual_router_id 51 priority 201 advert_int 2 authentication { auth_type PASS auth_pass 1111 # 限制在8个字符以内 } virtual_ipaddress { 192.168.48.200 } track_script { chk_apiserver } } EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/keepalived/keepalived.conf << "EOF" ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface ens33 mcast_src_ip 192.168.48.102 virtual_router_id 51 priority 150 advert_int 2 authentication { auth_type PASS auth_pass 1111 # 限制在8个字符以内 } virtual_ipaddress { 192.168.48.200 } track_script { chk_apiserver } } EOF

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 cat > /etc/keepalived/keepalived.conf << "EOF" ! Configuration File for keepalived global_defs { router_id LVS_DEVEL script_user root enable_script_security } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface ens33 mcast_src_ip 192.168.48.103 virtual_router_id 51 priority 99 advert_int 2 authentication { auth_type PASS auth_pass 1111 # 限制在8个字符以内 } virtual_ipaddress { 192.168.48.200 } track_script { chk_apiserver } } EOF

配置Keepalived健康检查文件

操作节点:[master1,master2,master3]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 cat > /etc/keepalived/check_apiserver.sh << "EOF" #!/bin/bash err=0 for k in \$(seq 1 3) do check_code=\$(pgrep haproxy) if [[ \$check_code == "" ]]; then err=\$(expr \$err + 1) sleep 1 continue else err=0 break fi done if [[ \$err != "0" ]]; then echo "Stopping keepalived due to haproxy failure." /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF chmod +x /etc/keepalived/check_apiserver.sh

启动haproxy和keepalived

1 2 3 4 5 操作节点:[master,master2,master3] systemctl daemon-reload systemctl enable --now haproxy systemctl enable --now keepalived systemctl restart haproxy keepalived

测试集群负载均衡高可用

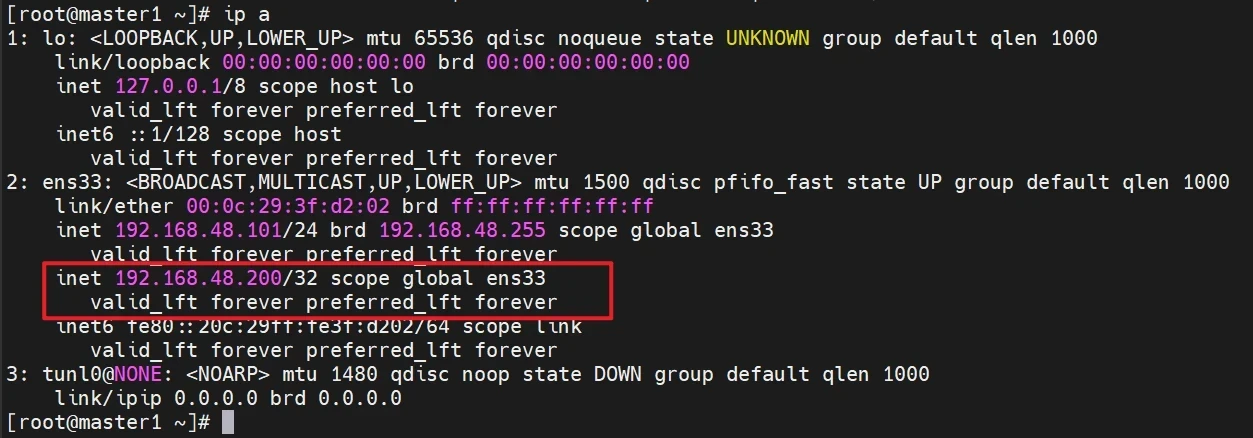

查看master1的vip

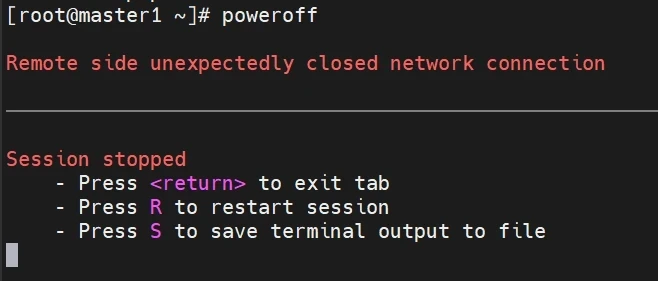

模拟master1的宕机测试,看看vip会不会漂移到master2去

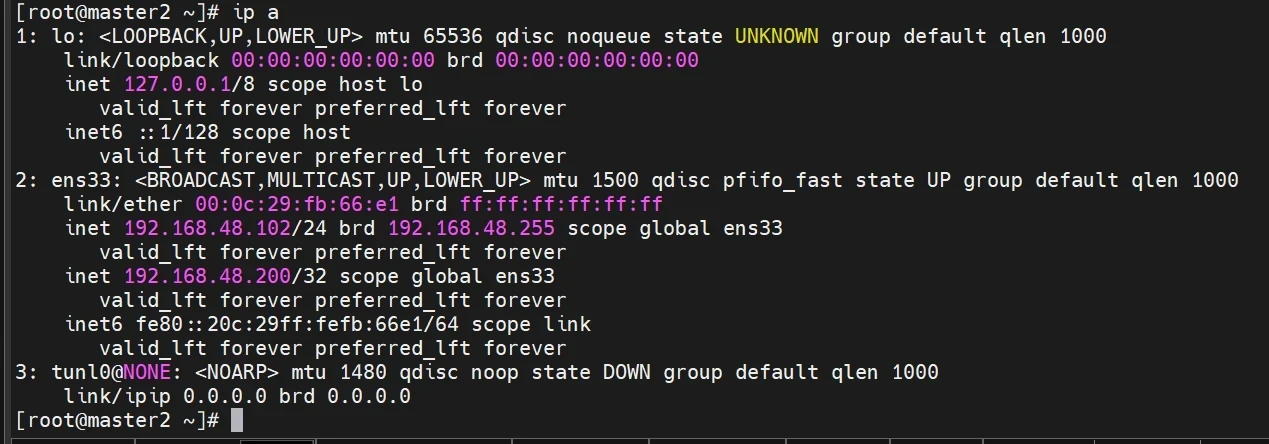

这时候查看master2的ip列表

结论:这时可以知道,负载均衡集群成功,当master1出现宕机情况,vip会从master1漂移到master2

docker安装

安装docker

操作节点[master1,master2,master3,node01]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 wget https://download.docker.com/linux/static/stable/x86_64/docker-24.0.7.tgz tar xf docker-*.tgz cp -rf docker/* /usr/bin/ #创建containerd的service文件,并且启动 cat >/etc/systemd/system/containerd.service <<EOF [Unit] Description=containerd container runtime Documentation=https://containerd.io After=network.target local-fs.target [Service] ExecStartPre=-/sbin/modprobe overlay ExecStart=/usr/bin/containerd Type=notify Delegate=yes KillMode=process Restart=always RestartSec=5 LimitNPROC=infinity LimitCORE=infinity LimitNOFILE=1048576 TasksMax=infinity OOMScoreAdjust=-999 [Install] WantedBy=multi-user.target EOF systemctl enable --now containerd.service #准备docker的service文件 cat > /etc/systemd/system/docker.service <<EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service containerd.service Wants=network-online.target Requires=docker.socket containerd.service [Service] Type=notify ExecStart=/usr/bin/dockerd --config-file=/etc/docker/daemon.json -H fd:// containerd=/run/containerd/containerd.sock ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always StartLimitBurst=3 StartLimitInterval=60s LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TasksMax=infinity Delegate=yes KillMode=process OOMScoreAdjust=-500 [Install] WantedBy=multi-user.target EOF #准备docker的socket文件 cat > /etc/systemd/system/docker.socket <<EOF [Unit] Description=Docker Socket for the API [Socket] ListenStream=/var/run/docker.sock SocketMode=0660 SocketUser=root SocketGroup=docker [Install] WantedBy=sockets.target EOF groupadd docker systemctl enable --now docker.socket && systemctl enable --now docker.service #验证 mkdir /etc/docker sudo tee /etc/docker/daemon.json > /dev/null <<'EOF' { "registry-mirrors": [ "https://docker.xuanyuan.me", "https://docker.m.daocloud.io", "https://docker.1ms.run", "https://docker.1panel.live", "https://registry.cn-hangzhou.aliyuncs.com", "https://docker.qianyios.top" ], "max-concurrent-downloads": 10, "log-driver": "json-file", "log-level": "warn", "log-opts": { "max-size": "10m", "max-file": "3" }, "data-root": "/var/lib/docker" } EOF systemctl daemon-reload systemctl restart docker

安装cri-docker

操作节点[master1,master2,master3,node01]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.12/cri-dockerd-0.3.12.amd64.tgz tar -zxvf cri-dockerd-0.3.*.amd64.tgz cp cri-dockerd/cri-dockerd /usr/bin/ chmod +x /usr/bin/cri-dockerd #写入启动配置文件 cat > /usr/lib/systemd/system/cri-docker.service <<EOF [Unit] Description=CRI Interface for Docker Application Container Engine Documentation=https://docs.mirantis.com After=network-online.target firewalld.service docker.service Wants=network-online.target Requires=cri-docker.socket [Service] Type=notify ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always StartLimitBurst=3 StartLimitInterval=60s LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target EOF #写入socket配置文件 cat > /usr/lib/systemd/system/cri-docker.socket <<EOF [Unit] Description=CRI Docker Socket for the API PartOf=cri-docker.service [Socket] ListenStream=%t/cri-dockerd.sock SocketMode=0660 SocketUser=root SocketGroup=docker [Install] WantedBy=sockets.target EOF systemctl daemon-reload && systemctl enable cri-docker --now

K8S集群安装

安装k8s所需的工具

1 2 3 4 5 6 7 8 9 10 操作节点[master1,master2,master3,node01] yum -y install kubeadm kubelet kubectl #为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,配置如下: sed -i 's/^KUBELET_EXTRA_ARGS=/KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"/g' /etc/sysconfig/kubelet #设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动 systemctl enable kubelet systemctl enable kubelet.service

集群初始化

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 操作节点[master1,master2,master3] cat > kubeadm-config.yaml << EOF apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168 .48 .101 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/cri-dockerd.sock imagePullPolicy: IfNotPresent taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {}dns: {}etcd: local: dataDir: /var/lib/etcd imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.28 .2 networking: dnsDomain: cluster.local podSubnet: 10.244 .0 .0 /16 serviceSubnet: 10.96 .0 .0 /12 scheduler: {}controlPlaneEndpoint: "192.168.48.200:16443" --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0 .0 .0 bindAddressHardFail: false clientConnection: acceptContentTypes: "" burst: 0 contentType: "" kubeconfig: /var/lib/kube-proxy/kubeconfig.conf qps: 0 clusterCIDR: "" configSyncPeriod: 0s conntrack: maxPerCore: null min: null tcpCloseWaitTimeout: null tcpEstablishedTimeout: null detectLocal: bridgeInterface: "" interfaceNamePrefix: "" detectLocalMode: "" enableProfiling: false healthzBindAddress: "" hostnameOverride: "" iptables: localhostNodePorts: null masqueradeAll: false masqueradeBit: null minSyncPeriod: 0s syncPeriod: 0s ipvs: excludeCIDRs: null minSyncPeriod: 0s scheduler: "" strictARP: false syncPeriod: 0s tcpFinTimeout: 0s tcpTimeout: 0s udpTimeout: 0s kind: KubeProxyConfiguration logging: flushFrequency: 0 options: json: infoBufferSize: "0" verbosity: 0 metricsBindAddress: "" mode: "ipvs" nodePortAddresses: null oomScoreAdj: null portRange: "" showHiddenMetricsForVersion: "" winkernel: enableDSR: false forwardHealthCheckVip: false networkName: "" rootHnsEndpointName: "" sourceVip: "" --- apiVersion: kubelet.config.k8s.io/v1beta1 authentication: anonymous: enabled: false webhook: cacheTTL: 0s enabled: true x509: clientCAFile: /etc/kubernetes/pki/ca.crt authorization: mode: Webhook webhook: cacheAuthorizedTTL: 0s cacheUnauthorizedTTL: 0s cgroupDriver: systemd clusterDNS: - 10.96 .0 .10 clusterDomain: cluster.local containerRuntimeEndpoint: "" cpuManagerReconcilePeriod: 0s evictionPressureTransitionPeriod: 0s fileCheckFrequency: 0s healthzBindAddress: 127.0 .0 .1 healthzPort: 10248 httpCheckFrequency: 0s imageMinimumGCAge: 0s kind: KubeletConfiguration logging: flushFrequency: 0 options: json: infoBufferSize: "0" verbosity: 0 memorySwap: {}nodeStatusReportFrequency: 0s nodeStatusUpdateFrequency: 0s rotateCertificates: true runtimeRequestTimeout: 0s shutdownGracePeriod: 0s shutdownGracePeriodCriticalPods: 0s staticPodPath: /etc/kubernetes/manifests streamingConnectionIdleTimeout: 0s syncFrequency: 0s volumeStatsAggPeriod: 0s EOF kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

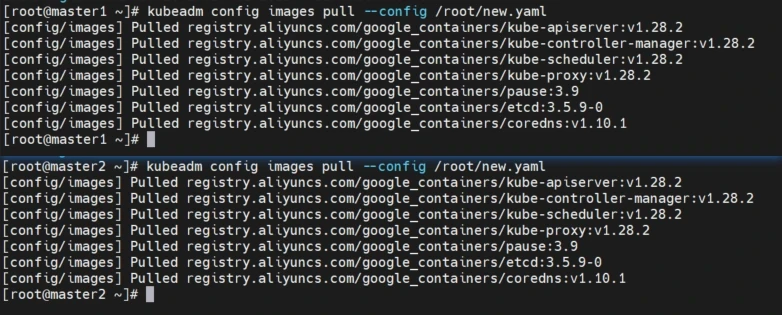

准备k8s所需的镜像

1 2 3 操作节点[master1,master2,master3] kubeadm config images pull --config /root/new.yaml

master1节点初始化

操作节点[master1]

1 kubeadm init --config /root/new.yaml --upload-certs

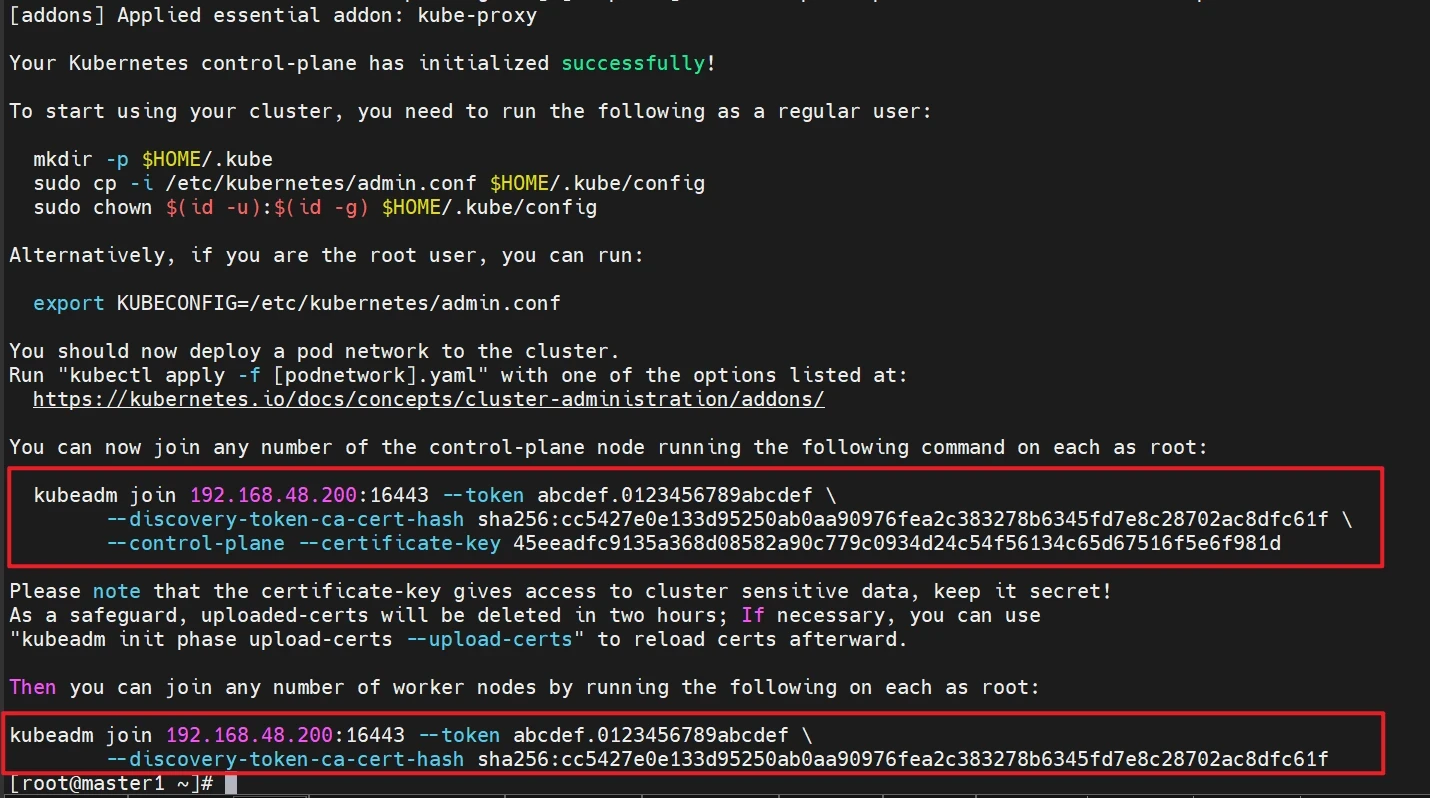

会生成信息

记录信息后面会用到

初始化成功以后,会产生Token值,用于其他节点加入时使用,因此要记录下初始化成功生成的token值(令牌值),有效期24小时,后续需要操作可以重新生成Token

操作节点[master1]

1 2 3 4 5 6 kubeadm join 192.168.48.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:fd91b75286ce9d50c840f380117e25961ad743a8962f3a9f2c80d74c928e1497 \ --control-plane --certificate-key 0dfa57d943f4a2b8b55840d17f67de731b137e11ca4720f7bf9bfd75d8247e22 kubeadm join 192.168.48.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:fd91b75286ce9d50c840f380117e25961ad743a8962f3a9f2c80d74c928e1497

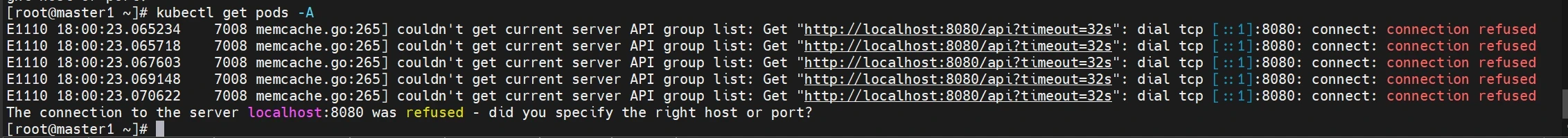

操作kubect报错:

此时通过kubectl操作,会出现失败,因为还没有将集群的"钥匙"交给root用户。/etc/kubernetes/admin.conf 文件是 Kubernetes(K8s)集群中的管理员配置文件,它包含了用于管理集群的身份验证和访问信息。所以下面进行配置环境变量,用于访问Kubernetes集群:

添加环境变量

操作节点[master1]

1 2 3 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

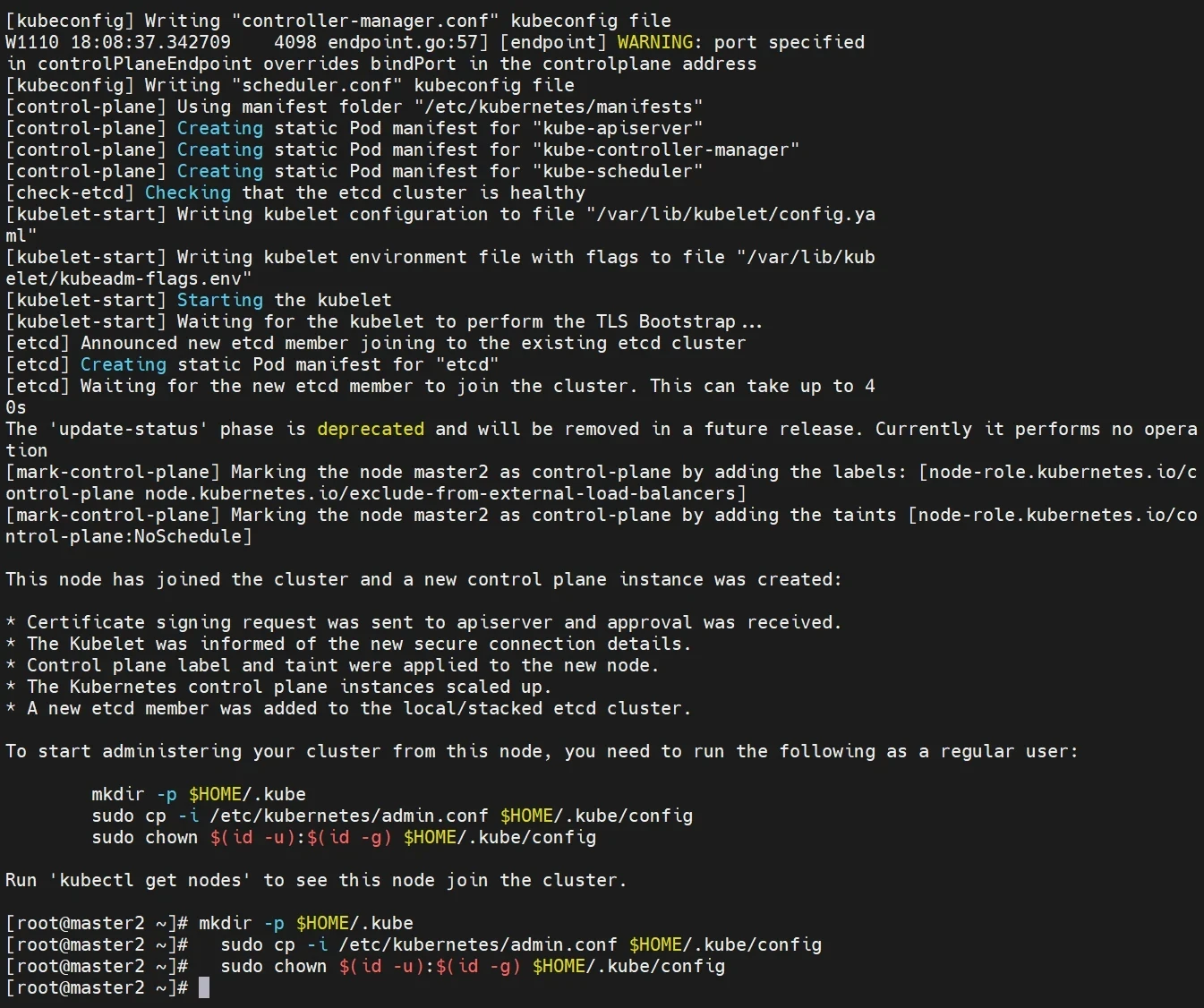

添加其他master节点至集群中

操作节点[master2,master3]

1 2 3 4 5 操作节点[master2,master3] kubeadm join 192.168.48.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:fd91b75286ce9d50c840f380117e25961ad743a8962f3a9f2c80d74c928e1497 \ --control-plane --certificate-key 0dfa57d943f4a2b8b55840d17f67de731b137e11ca4720f7bf9bfd75d8247e22 \ --cri-socket unix:///var/run/cri-dockerd.sock

注意:这里末尾添加了--cri-socket unix:///var/run/cri-dockerd.sock

接着给master2添加环境变量

1 2 3 4 操作节点[master2,master3] mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

这里没有展示master3的图片,但是步骤一样的

模拟Token过期重新生成并加入Node节点

假设加入集群的token过期了。node01无法加入了,这里就模拟一下这种情况

Token过期后生成新的token:

1 kubeadm token create --print-join-command

1 2 3 [root@master1 ~]# kubeadm token create --print-join-command kubeadm join 192.168.48.200:16443 --token tn5q1b.7w1jj77ewup7k2in --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f

其中,192.168.48.200:16443 是你的 Kubernetes API 服务器的地址和端口,tn5q1b.7w1jj77ewup7k2in 是新的令牌,sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f 是令牌的 CA 证书哈希值。

Master需要生成–certificate-key:

1 kubeadm init phase upload-certs --upload-certs

1 2 3 4 5 6 [root@master1 ~]# kubeadm init phase upload-certs --upload-certs W1110 18:12:03.426245 10185 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W1110 18:12:03.426320 10185 version.go:105] falling back to the local client version: v1.28.2 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 5d3706028d5e569324a4c456c81ae0f5551ece88b3132f03917668c6b0605128

其中,5d3706028d5e569324a4c456c81ae0f5551ece88b3132f03917668c6b0605128 是证书密钥。

生成新的Token用于集群添加新Node节点

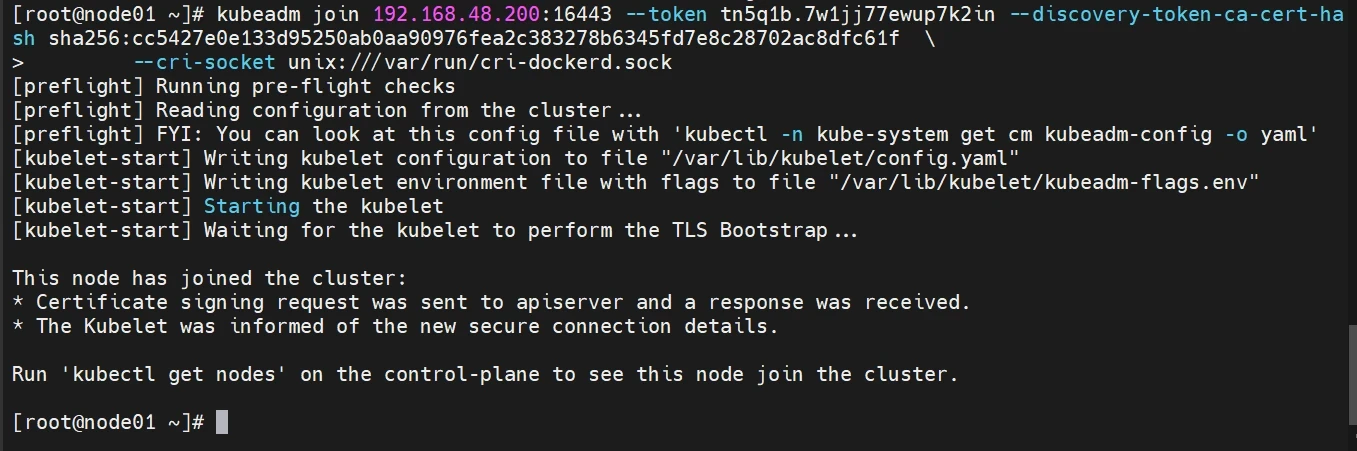

操作节点[node01]

1 2 kubeadm join 192.168.48.200:16443 --token tn5q1b.7w1jj77ewup7k2in --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f \ --cri-socket unix:///var/run/cri-dockerd.sock

注意:这里末尾添加了--cri-socket unix:///var/run/cri-dockerd.sock

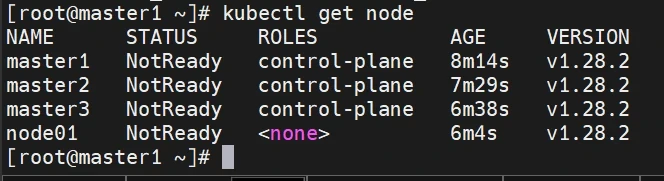

这时在master查看node状态(显示为notready不影响)

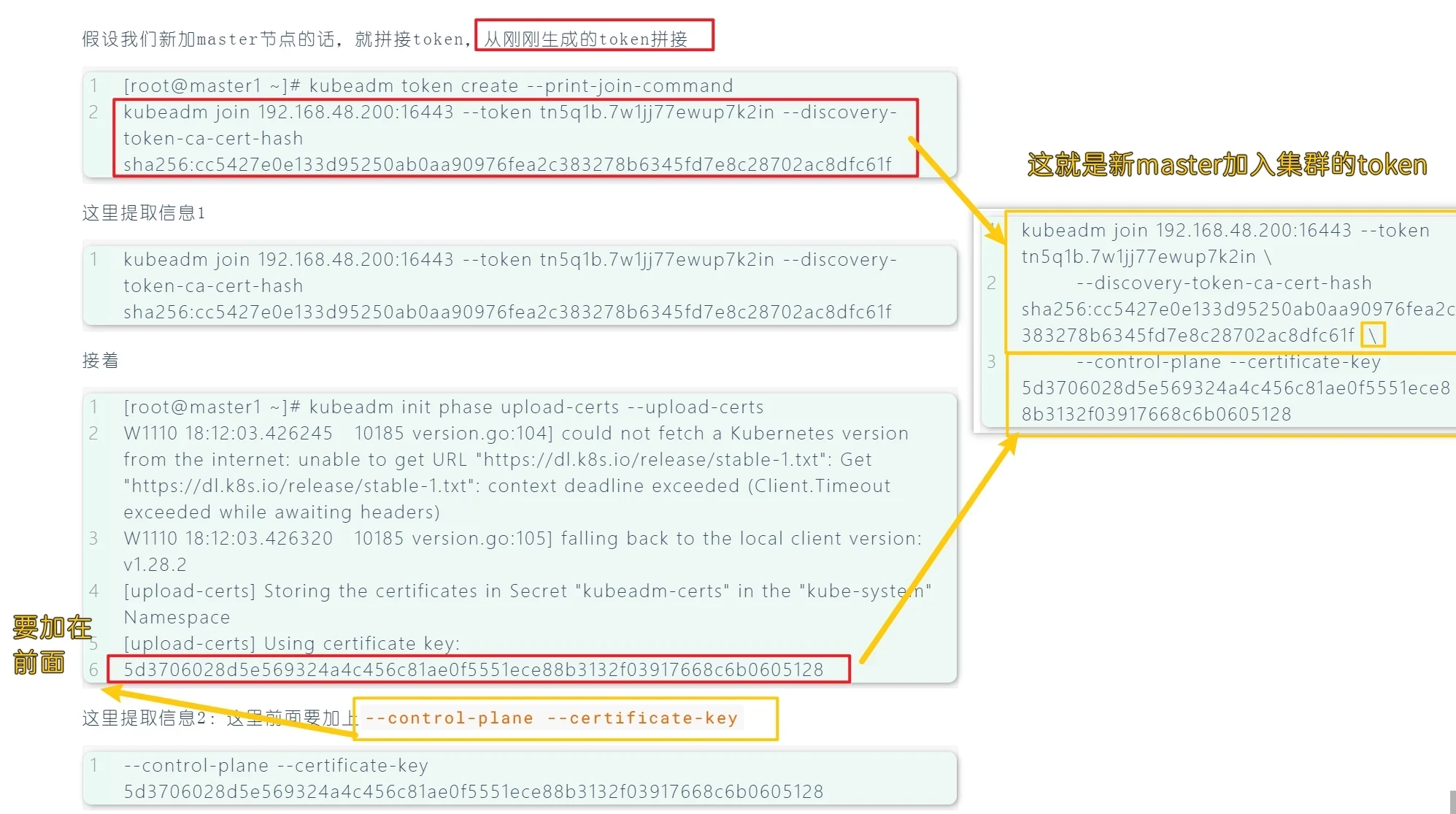

模拟新加master节点的加入K8S集群中

假设我们新加master节点的话,就拼接token,从刚刚生成的token拼接

1 2 [root@master1 ~]# kubeadm token create --print-join-command kubeadm join 192.168.48.200:16443 --token tn5q1b.7w1jj77ewup7k2in --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f

这里提取信息1

1 kubeadm join 192.168.48.200:16443 --token tn5q1b.7w1jj77ewup7k2in --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f

接着

1 2 3 4 5 6 [root@master1 ~]# kubeadm init phase upload-certs --upload-certs W1110 18:12:03.426245 10185 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers) W1110 18:12:03.426320 10185 version.go:105] falling back to the local client version: v1.28.2 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 5d3706028d5e569324a4c456c81ae0f5551ece88b3132f03917668c6b0605128

这里提取信息2:这里前面要加上--control-plane --certificate-key

1 --control-plane --certificate-key 5d3706028d5e569324a4c456c81ae0f5551ece88b3132f03917668c6b0605128

合成

1 2 3 4 5 6 7 8 9 10 11 12 kubeadm join 192.168.48.200:16443 --token tn5q1b.7w1jj77ewup7k2in \ --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f \ --control-plane --certificate-key 5d3706028d5e569324a4c456c81ae0f5551ece88b3132f03917668c6b0605128 \ --cri-socket unix:///var/run/cri-dockerd.sock kubeadm join 192.168.48.200:16443 --token lnkno8.u4v1l8n9pahzf0kj \ --discovery-token-ca-cert-hash sha256:cc5427e0e133d95250ab0aa90976fea2c383278b6345fd7e8c28702ac8dfc61f \ --control-plane --certificate-key 41e441fe56cb4bdfcc2fc0291958e6b1da54d01f4649b6651471c07583f85cdf \ --cri-socket unix:///var/run/cri-dockerd.sock

注意:这里末尾添加了--cri-socket unix:///var/run/cri-dockerd.sock

图示

安装calico网络插件

操作节点[master1]

添加解析记录,否则无法访问

1 echo '185.199.108.133 raw.githubusercontent.com' >> /etc/hosts

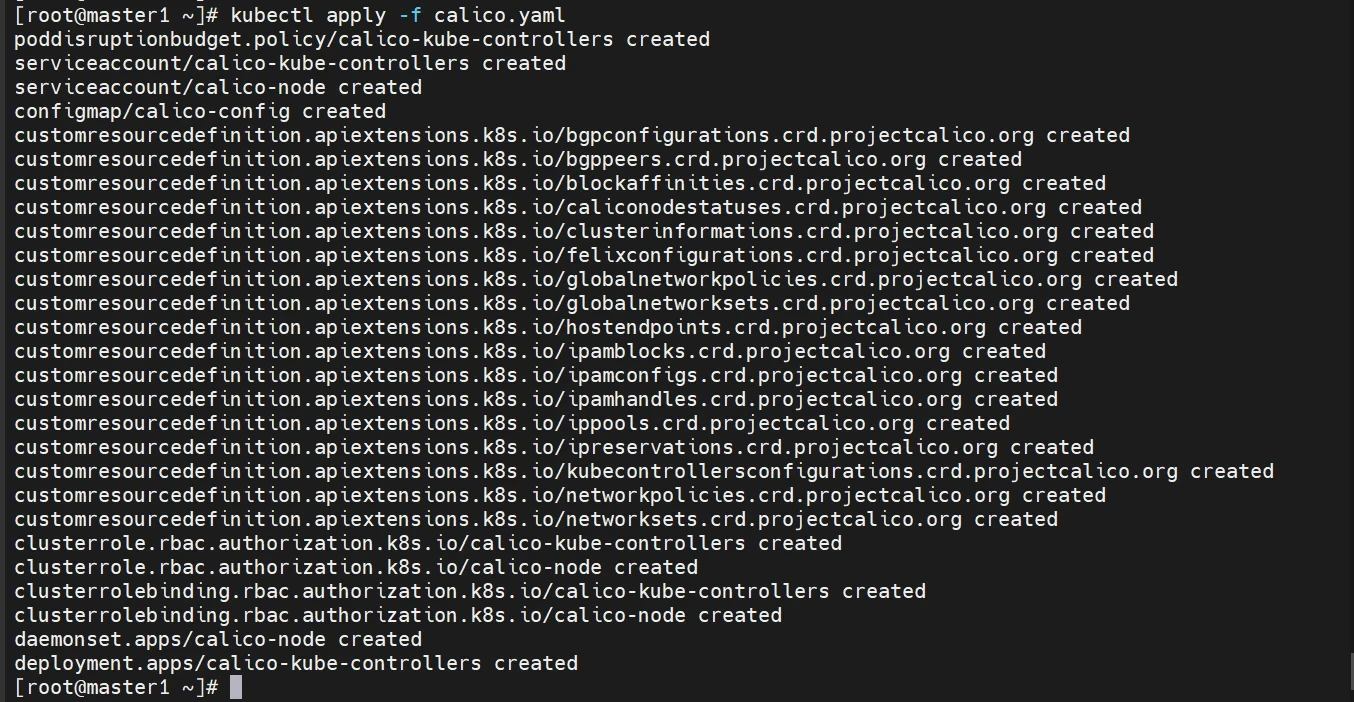

应用operator资源清单文件

网络组件有很多种,只需要部署其中一个即可,推荐Calico。

1 curl https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/calico.yaml -O

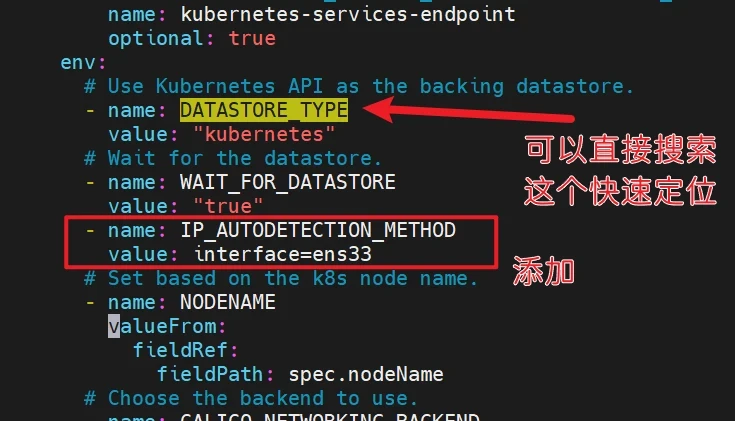

1 2 3 4 5 6 7 8 9 [root@master1 ~ ] - name: WAIT_FOR_DATASTORE value: "true" - name: IP_AUTODETECTION_METHOD value: interface=ens33

1 2 sed -i 's| docker.io/calico/| registry.cn-hangzhou.aliyuncs.com/qianyios/|' calico.yaml kubectl apply -f calico.yaml

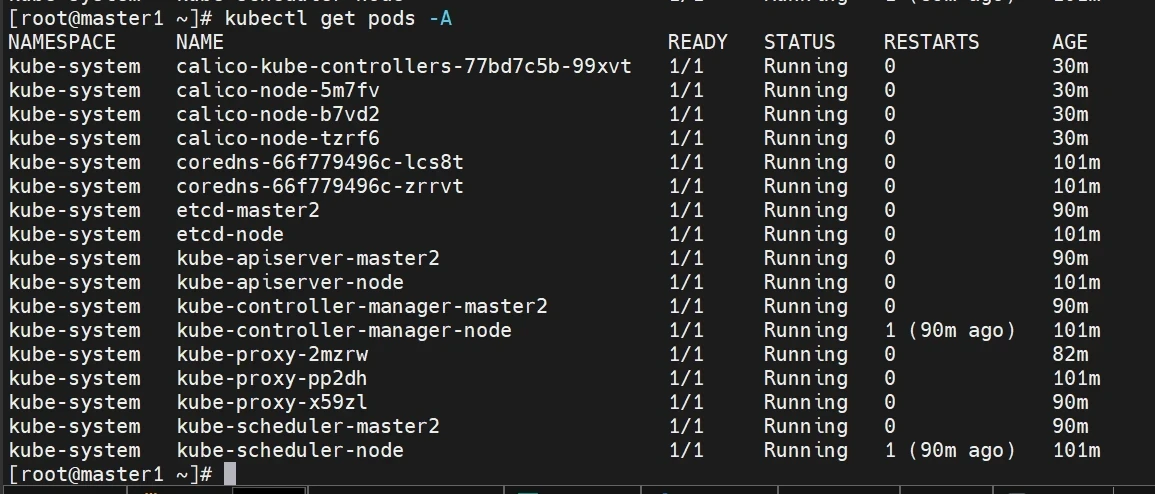

监视kube-system命名空间中pod运行情况

等待估计20分钟左右吧(确保全部running)

1 kubectl get pods -n kube-system

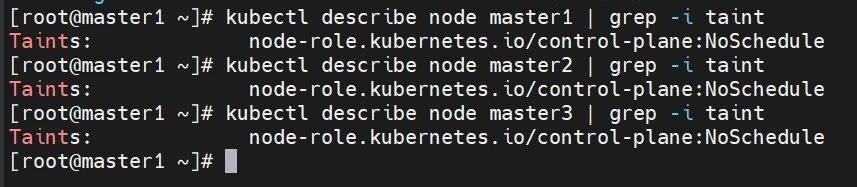

拿掉master节点的污点

节点 master1 和 master2 都有一个名为 node-role.kubernetes.io/control-plane:NoSchedule 的污点。这个污点的作用是阻止普通的 Pod 被调度到这些节点上,只允许特定的控制平面组件(如 kube-apiserver、kube-controller-manager 和 kube-scheduler)在这些节点上运行。

这种设置有助于确保控制平面节点专门用于运行 Kubernetes 的核心组件,而不会被普通的工作负载占用。通过将污点添加到节点上,可以确保只有被授权的控制平面组件才能在这些节点上运行。

1 2 3 kubectl describe node master1 | grep -i taint kubectl describe node master2 | grep -i taint kubectl describe node master3 | grep -i taint

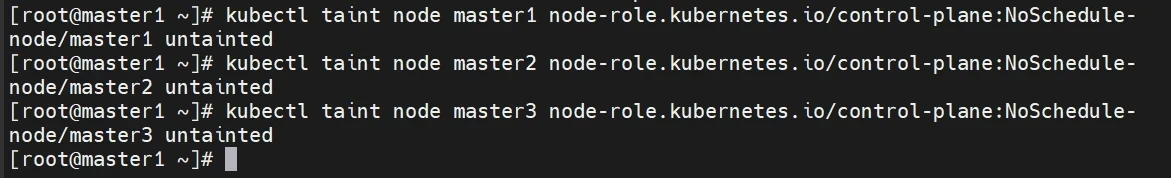

去除污点

1 2 3 kubectl taint node master1 node-role.kubernetes.io/control-plane:NoSchedule- kubectl taint node master2 node-role.kubernetes.io/control-plane:NoSchedule- kubectl taint node master3 node-role.kubernetes.io/control-plane:NoSchedule-

安装dashboard

操作节点[master1]

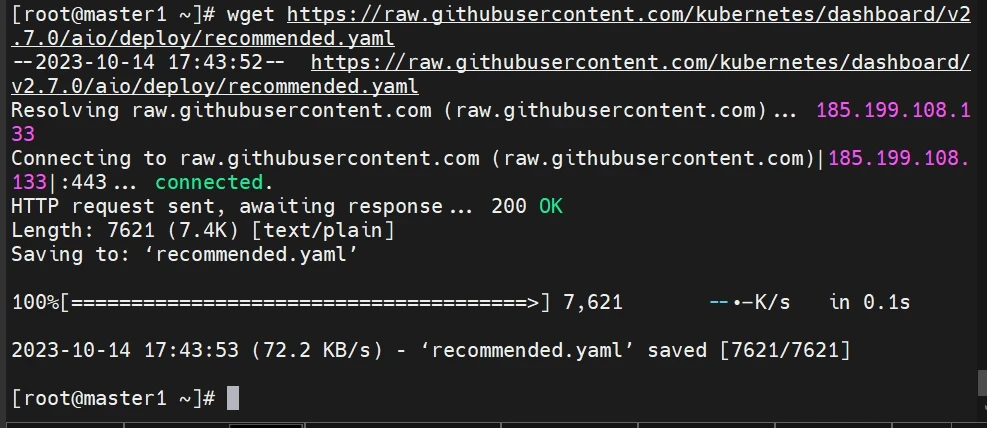

下载文件

https://github.com/kubernetes/dashboard/releases/tag/v2.7.0

目前最新版本v2.7.0

1 2 3 wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml sed -i 's/kubernetesui\/dashboard:v2.7.0/registry.cn-hangzhou.aliyuncs.com\/qianyios\/dashboard:v2.7.0/g' recommended.yaml sed -i 's/kubernetesui\/metrics-scraper:v1.0.8/registry.cn-hangzhou.aliyuncs.com\/qianyios\/metrics-scraper:v1.0.8/g' recommended.yaml

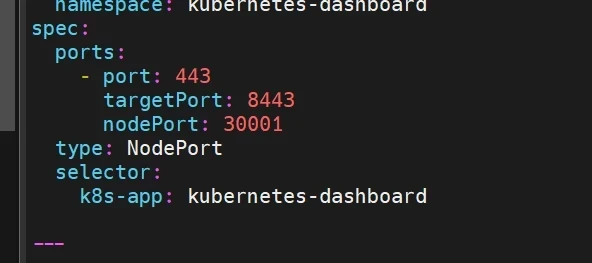

修改配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 vim recommended.yaml --- kind: Service apiVersion: v1 metadata: labels: app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 nodePort: 30001 type: NodePort selector: app: kubernetes-dashboard ---

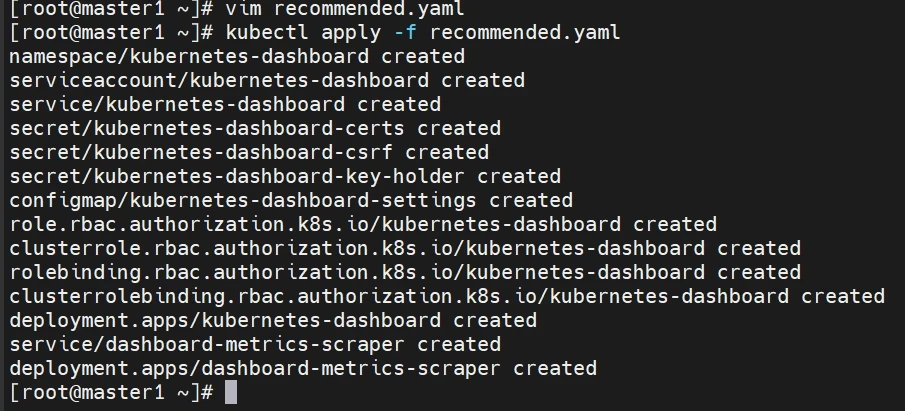

运行dashboard

1 kubectl apply -f recommended.yaml

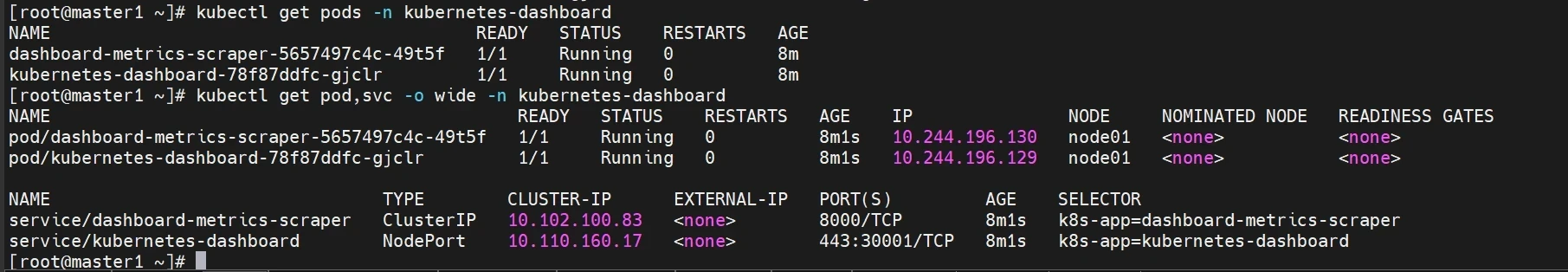

检查运行状态

1 2 kubectl get pods -n kubernetes-dashboard kubectl get pod,svc -o wide -n kubernetes-dashboard

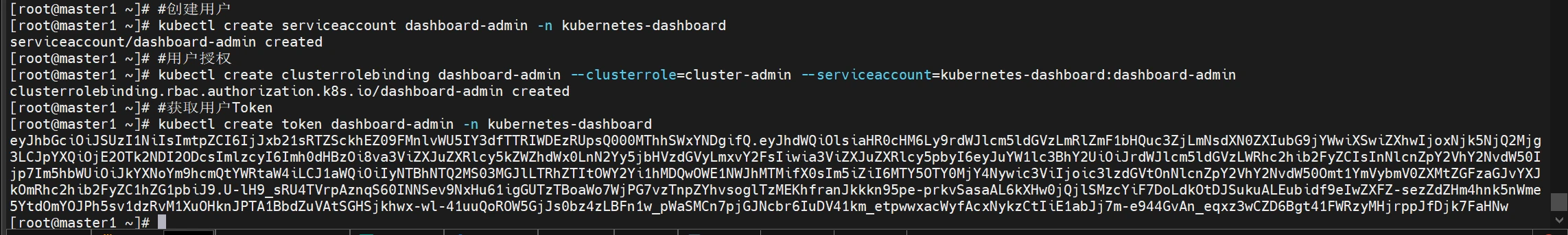

创建cluster-admin用户

1 2 3 4 5 6 7 创建service account并绑定默认cluster-admin管理员群角色 #创建用户 kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard #用户授权 kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin #获取用户Token kubectl create token dashboard-admin -n kubernetes-dashboard

记录token

1 2 eyJhbGciOiJSUzI1NiIsImtpZCI6IjJxb21sRTZSckhEZ09FMnlvWU5IY3dfTTRIWDEzRUpsQ000MThhSWxYNDgifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjk5NjQ2Mjg3LCJpYXQiOjE2OTk2NDI2ODcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkYXNoYm9hcmQtYWRtaW4iLCJ1aWQiOiIyNTBhNTQ2MS03MGJlLTRhZTItOWY2Yi1hMDQwOWE1NWJhMTMifX0sIm5iZiI6MTY5OTY0MjY4Nywic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.U-lH9_sRU4TVrpAznqS60INNSev9NxHu61igGUTzTBoaWo7WjPG7vzTnpZYhvsoglTzMEKhfranJkkkn95pe-prkvSasaAL6kXHw0jQjlSMzcYiF7DoLdkOtDJSukuALEubidf9eIwZXFZ-sezZdZHm4hnk5nWme5YtdOmYOJPh5sv1dzRvM1XuOHknJPTA1BbdZuVAtSGHSjkhwx-wl-41uuQoROW5GjJs0bz4zLBFn1w_pWaSMCn7pjGJNcbr6IuDV41km_etpwwxacWyfAcxNykzCtIiE1abJj7m-e944GvAn_eqxz3wCZD6Bgt41FWRzyMHjrppJfDjk7FaHNw

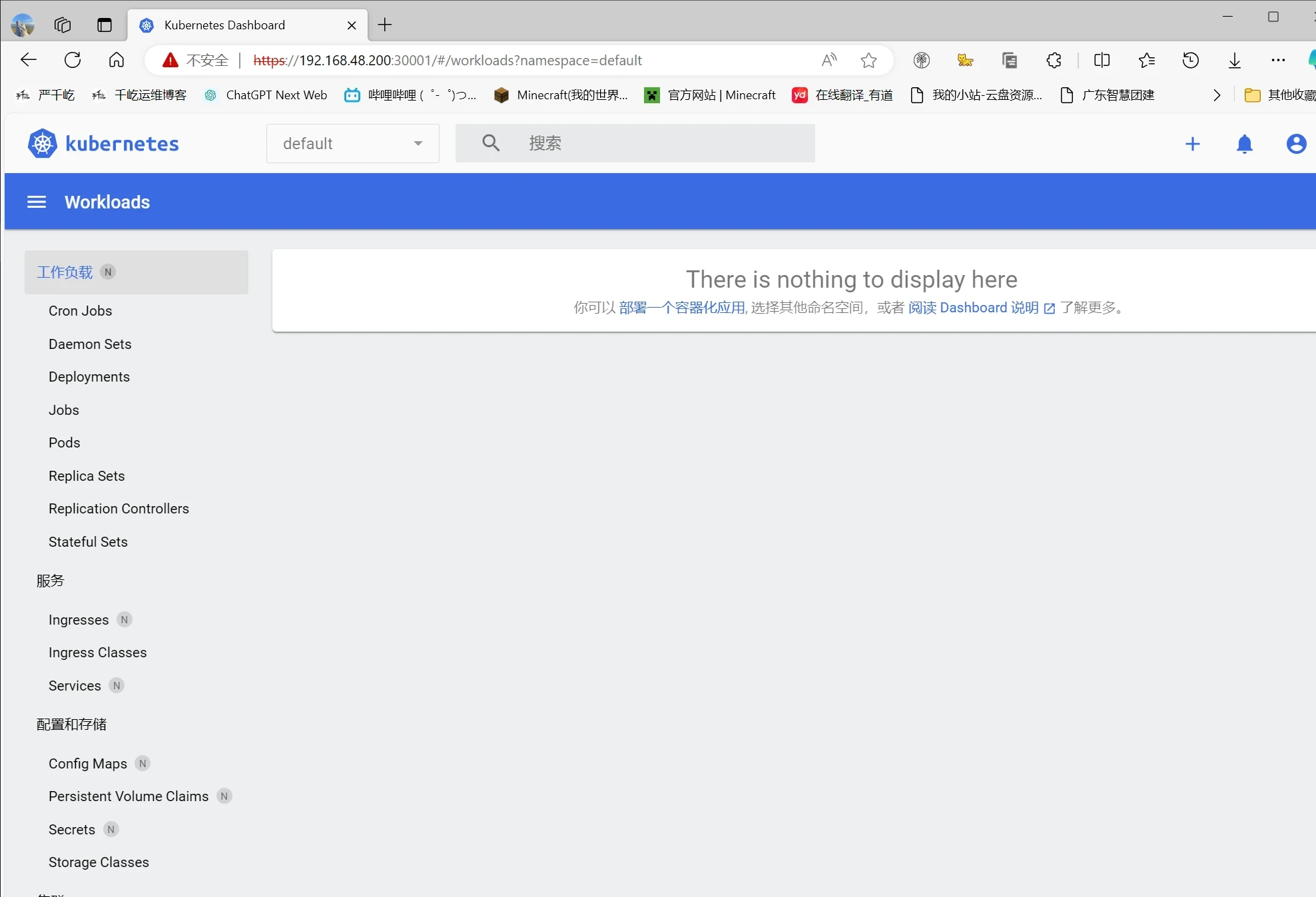

登录浏览器访问

1 2 3 4 5 6 7 8 https://192.168.48.200:30001 输入token: ---- eyJhbGciOiJSUzI1NiIsImtpZCI6IjJxb21sRTZSckhEZ09FMnlvWU5IY3dfTTRIWDEzRUpsQ000MThhSWxYNDgifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjk5NjQ2Mjg3LCJpYXQiOjE2OTk2NDI2ODcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJkYXNoYm9hcmQtYWRtaW4iLCJ1aWQiOiIyNTBhNTQ2MS03MGJlLTRhZTItOWY2Yi1hMDQwOWE1NWJhMTMifX0sIm5iZiI6MTY5OTY0MjY4Nywic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.U-lH9_sRU4TVrpAznqS60INNSev9NxHu61igGUTzTBoaWo7WjPG7vzTnpZYhvsoglTzMEKhfranJkkkn95pe-prkvSasaAL6kXHw0jQjlSMzcYiF7DoLdkOtDJSukuALEubidf9eIwZXFZ-sezZdZHm4hnk5nWme5YtdOmYOJPh5sv1dzRvM1XuOHknJPTA1BbdZuVAtSGHSjkhwx-wl-41uuQoROW5GjJs0bz4zLBFn1w_pWaSMCn7pjGJNcbr6IuDV41km_etpwwxacWyfAcxNykzCtIiE1abJj7m-e944GvAn_eqxz3wCZD6Bgt41FWRzyMHjrppJfDjk7FaHNw ----

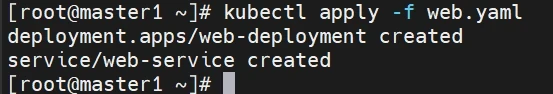

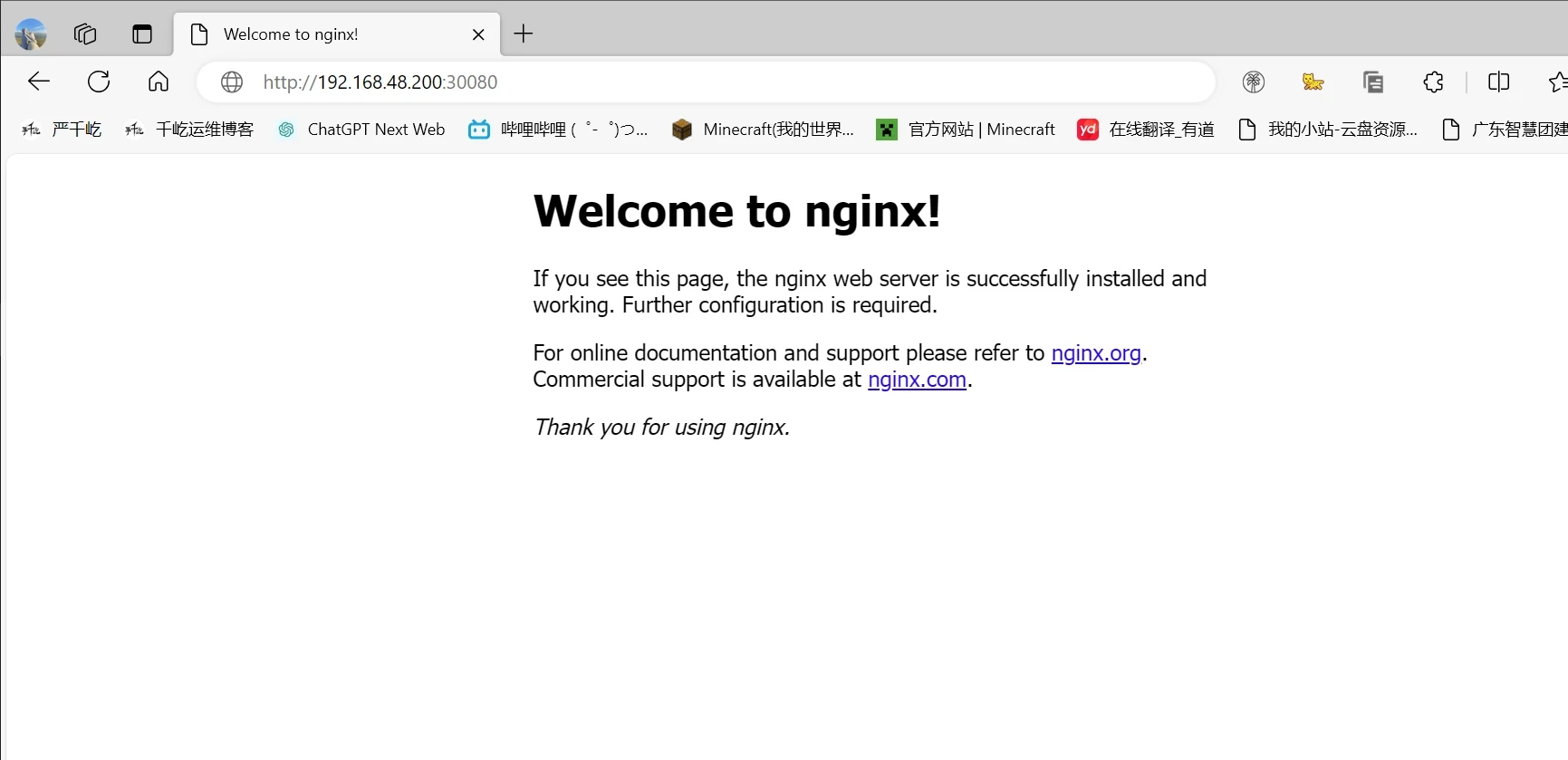

部署一个nginx测试

操作节点[master1]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 vim web.yaml kind: Deployment apiVersion: apps/v1 metadata: labels: app: web-deployment-label name: web-deployment namespace: default spec: replicas: 3 selector: matchLabels: app: web-selector template: metadata: labels: app: web-selector spec: containers: - name: web-container image: nginx:latest imagePullPolicy: Always ports: - containerPort: 80 protocol: TCP name: http - containerPort: 443 protocol: TCP name: https --- kind: Service apiVersion: v1 metadata: labels: app: web-service-label name: web-service namespace: default spec: type: NodePort ports: - name: http port: 80 protocol: TCP targetPort: 80 nodePort: 30080 - name: https port: 443 protocol: TCP targetPort: 443 nodePort: 30443 selector: app: web-selector kubectl apply -f web.yaml

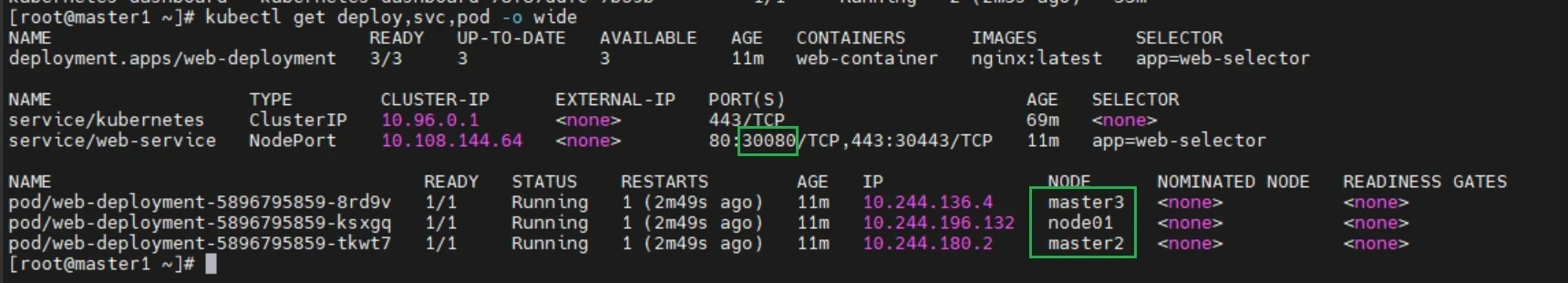

1 2 ### 查看nginx的pod 的详细信息 kubectl get deploy,svc,pod -o wide

访问nginx网站

1 http://192.168.48.200:30080

千屹博客旗下的所有文章,是通过本人课堂学习和课外自学所精心整理的知识巨著